In this guide I will go through the steps of setting up your EC2 instance for your Django project and deploy it with CI/CD using Github Actions.

Any commands with the "$" at the beginning run on your local machine and any with "%" should be run on your server.

- Project Layout

- Create an AWS Account

- Create an AWS EC2 Instance

- EC2 Environment Setup

- Setting up Continuous Deployment

- Setting up your Domain

- HTTPS

If you already have a working project go ahead and move on to either Creating your AWS Account or Creating your EC2 Instance.

Otherwise feel free to use the generic Django project I created on Github here.

Here is the project layout:

django-app

|___ backend/ (Django Backend settings)

| |___ settings.py

|___ static_files/

|___ templates/ (Django Templates)

| |___ index.html

|___ manage.py

|___ requirements.txt

I will be using a generic Django project which will run it's own server. The app simply displays the default Django app view.

- On your terminal, clone the repository with Git:

$ git clone https://github.com/rmiyazaki6499/django-app.git

For those that are not interested in setting up the project manually or would simply not have to worry about downloading python and its dependencies, I have created a Dockerfile and docker-compose.yml file to help create a container with everything you would need to run the django-app.

If you would like to build the project manually without running a Docker container, go ahead [here]

To make this as easy as possible, we will be using Docker Compose to creat our container.

-

If you do not have Docker yet, start by downloading it if you are on a Mac or Windows: https://www.docker.com/products/docker-desktop

-

Or if you are on a Linux Distribution follow the directions here: https://docs.docker.com/compose/install/

-

To confirm you have Docker Compose, open up your terminal and run the command below:

$ docker-compose --version

docker-compose version 1.26.2, build eefe0d31

- Clone the repo to your local machine:

$ git clone https://github.com/rmiyazaki6499/django-app.git

- Go into the project directory to build and run the container with:

$ cd django-app/

$ docker-compose up --build

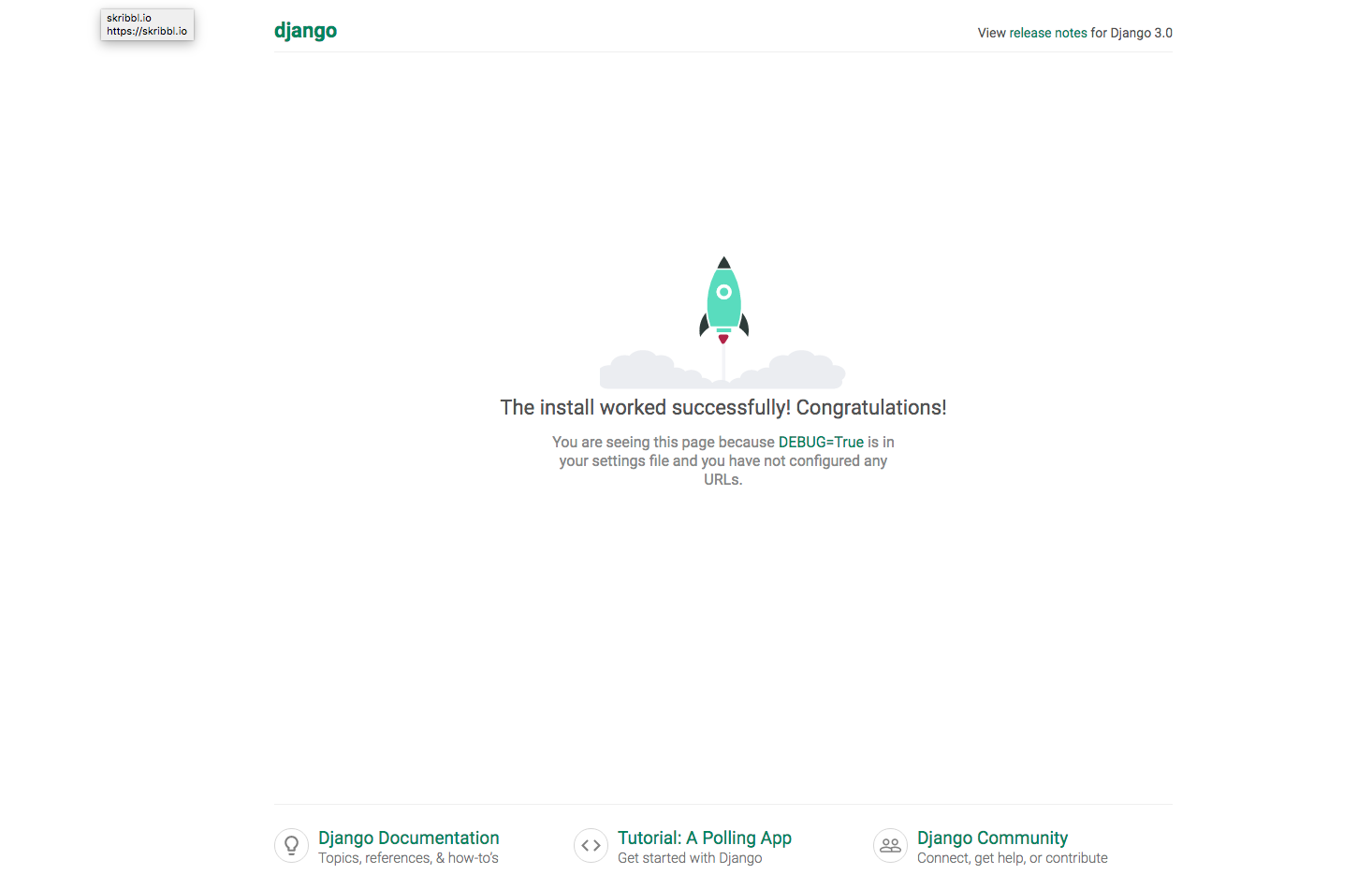

Navigate to http://localhost:8000 to view the site on the local server. It should look something like this:

If you either did not want to use Docker or was curious to build the django-app manually follow the directions below.

- Go into the project directory and make sure you create a virtual environment for your project by either using venv or pipenv:

$ cd django-app/

$ python3 -m venv env

$ source env/bin/activate

- In order to install Python dependencies, make sure you have pip (https://pip.pypa.io/en/stable/installing/) and run this command from the root of the project:

$ pip3 install -r requirements.txt

- We will now migrate the database and collect the static files:

$ python3 manage.py makemigrations

$ python3 manage.py migrate

$ python3 manage.py collectstatic

- To run the development server, use the following command:

$ python3 manage.py runserver

- To run the production server, use the following command:

$ ENV_PATH=.env-prod python3 manage.py runserver

Navigate to http://localhost:8000 to view the site on the local server. It should look something like this:

Before creating an EC2 Instance, you will need an AWS account.

If you don't have one already check out this step by step by Amazon to create your AWS account here.

Before we move on to create an EC2 instance, make sure that you are making your account as secure as possible by following the prompt on your Security Status checklist.

Once you have set up your user account we will jump in to creating our first EC2 Instance.

Note: This tutorial assumes you have at least access to the AWS Free Tier products

Amazon's EC2 or Elastic Compute Cloud is one of the products/services AWS provides and is one of the main building blocks for many of AWS's services. It allows users to rent virtual computers on which to run their own computer applications.

You can learn more about EC2 here.

Start out by going into the AWS Console and going to the EC2 console. An easy way to get there is through the Services link at the top and searching EC2 in the search prompt.

We recommend setting your AWS Region to the one closest to you or your intended audience. However, please note that not all AWS Services will be available depending on the Region. For our example, we will be working out of the us-east-1 as this Region supports all AWS Services.

You should end up at a screen which looks like this (As of July 2020).

Go to the Running Instances link on the EC2 Console Dashboard to find yourself at this screen.

We will then go to Launch Instance which will give you several prompts.

AWS will first ask you to choose an AMI. If you do not already have an AMI set up choose an OS that you would like to work in. For our example, we will use Ubuntu 18.04 with 64-bit.

Next we will choose an instance type. We will choose the t2.micro type as it is eligible for the free tier.

Once selected, click forward to Next: Configure Security Group.

This is important! Without configuring Security groups the ports on the instance will not be open and therefore your app will not be able to communicate through your instance.

Set your Security Group Setting like so:

I will explain each of the ports we will allow as the firewall rules for traffic.

| Type | Port Range | Description. |

|---|---|---|

| SSH | 22 | Port for SSH'ing into your server |

| HTTP | 80 | Port for HTTP requests to your web server |

| HTTPS | 443 | Port for HTTPS requests to your web server |

| Custom TCP | 8000 | Port which Django will run |

| Custom TCP | 5432 | Database port (Postgres for this example) |

As you can see with the Warning near the bottom that you do not want to set your Source as Anywhere. Make sure to set it to your IP address or any IP which will need access to the instance. I have this setting so that I do not show my IP address.

Click forward to Review and Launch to view all configurations of your Instance/AMI. If the configurations look correct go ahead and hit Launch.

A key pair consists of a public key that AWS stores, and a private key file that you store. Together they allow you to connect to your instance securely.

If this is the first time for you to create a key pair for your project, select Create a new key pair from the drop down and add the name of the key pair.

Once you have downloaded the key pair make sure to move the .pem file to the root directory of your project.

Make sure to check the checkbox acknowledging that you have access to the private key pair and click Launch Instances.

This should take you to the Launch Status page.

Find your way back to the Instances page un the EC2 Dashboard. It should look something like this:

If you go to your instance right after launching the instance may still be initializing. After a few minutes, under the Status Checks tab, it should show 2/2 checks... If it does, congratulations! You have your first EC2 Instance.

Before we access our EC2 Instance it is important to first receive an Elastic IP and Allocate it to our EC2 instance.

An Elastic IP is a dedicated IP address for your EC2 instance. This is important because although our instance does have an IP address assigned out of the box, it does not persist. With an Elastic IP address, you can mask the failure of an instance or software by rapidly remapping the address to another instance in your account.

Therefore by using an Elastic IP we can have a dedicated IP to which users from the internet can access your instance.

Note: If you are using the free tier, AWS will charge you unless your EC2 Instance is allocated to an Elastic IP.

On the EC2 Dashboard look under the Network & Security tab and go to Elastic IPs.

It should take you here:

Click on Allocate Elastic IP address.

It should take you here:

Go ahead and click Allocate.

This should create an Elastic IP for you.

Next we must allocate our Elastic IP to our instance.

With the Elastic IP checked on the left side.

- Go to Actions

- Click on Associate Elastic IP address

- Make sure your Resource type is Instance

- Search for your instance (if this is your first time, it should be the only one)

- Click Associate

Let's check to make sure our Elastic IP is Associated with our instance.

Go to Instances and in the instance details you should see Elastic IP: .

Now that we have our instance and have allocated an Elastic IP to it. It is time to connect to our server!

If you have not already, go to the Instances link in the EC2 Dashboard.

With the instance highlighted, click on Connect on the top banner of the Instaces Dashboard.

It should give you a pop up with directions on how to connect to your EC2 instance.

Go back to your project root directory and make sure that your .pem file has the correct permissions.

Run the command:

$ chmod 400 *.pem

Next run the command given to you in the example:

$ ssh -i "<KEY-PAIR>.pem" ubuntu@<YOUR-IP-ADDRESS>.compute-1.amazonaws.com

The ssh should prompt you that the authenticity of host instance can't be established and will show an ECDSA key fingerprint.

It will also ask you Are you sure you want to continue connecting (yes/no)?

Type yes and Enter.

This should take you into the EC2 Instance.

If not, try the ssh command again.

Congratulations you are inside your EC2 Instance!

You can think of our EC2 instance like a brand new server. There is nothing besides the Operating System and a few other things AWS has added into our instance.

Before we start building our project there are a few things we must install/configure on our empty server. We will use the following technologies:

- Django and Python3

- pip

- gunicorn

- NGINX

- UFW (Firewall)

Enable Firewall and allow OpenSSH

% sudo ufw enable

% sudo ufw allow OpenSSH

% sudo ufw allow 'Nginx Full'

Check to make sure we are allowing OpenSSH

% sudo ufw status

To Action From

-- ------ ----

Nginx Full ALLOW Anywhere

OpenSSH ALLOW Anywhere

Nginx Full (v6) ALLOW Anywhere (v6)

OpenSSH (v6) ALLOW Anywhere (v6)

Updating packages:

% sudo apt update

% sudo apt upgrade

Installing Python 3, PostgreSQL, NGINX and Gunicorn:

% sudo apt install python3-pip python3-dev libpq-dev postgresql postgresql-contrib nginx gunicorn curl

- On your terminal, clone the repository with Git:

% git clone https://github.com/rmiyazaki6499/django-app.git

- Next, make sure you create a virtual environment for your project by either using venv or pipenv:

% python3 -m venv env

% source env/bin/activate

- In order to install Python dependencies, make sure you have pip (https://pip.pypa.io/en/stable/installing/) and run this command from the root of the repo:

% pip3 install -r requirements.txt

Note: Make sure to update your .env file so that your project has the correct Environment Varialbes necessary to run.

- We will now migrate the database and collect the static files:

% python3 manage.py makemigrations

% python3 manage.py migrate

% python3 manage.py collectstatic

Configure the gunicorn.socket file:

% sudo vim /etc/systemd/system/gunicorn.socket

Use this code:

[Unit]

Description=gunicorn socket

[Socket]

ListenStream=/run/gunicorn.sock

[Install]

WantedBy=sockets.target

Next we will configure the gunicorn.service file:

% sudo nano /etc/systemd/system/gunicorn.service

Use the configurations below:

[Unit]

Description=gunicorn daemon

Requires=gunicorn.socket

After=network.target

[Service]

User=djangoadmin

Group=www-data

WorkingDirectory=/home/ubuntu/<YOUR-PROJECT>

ExecStart=/home/djangoadmin/pyapps/venv/bin/gunicorn \

--access-logfile - \

--workers 3 \

--bind unix:/run/gunicorn.sock \

<YOUR-PROJECT>.wsgi:application

[Install]

WantedBy=multi-user.target

Start and enable Gunicorn:

% sudo systemctl start gunicorn.socket

% sudo systemctl enable gunicorn.socket

To check the status of gunicorn:

% sudo systemctl status gunicorn.socket

We have our project now but...we can't see or access it...

That is why we will need to configure NGINX, our Web Server so that we can access it.

To create a new config file and configure NGINX, use the command: (You can use either nano or vim, I personally use vim):

% sudo vim /etc/nginx/sites-available/<YOUR-PROJECT-NAME>

Add this into your config file and replace any of the ALL CAPS Sections with your own details:

server {

listen 80;

server_name YOUR_INSTANCE_IP_ADDRESS;

location = /favicon.ico { access_log off; log_not_found off; }

location /static/ {

root /home/ubuntu/<YOUR-PROJECT>;

}

location /media/ {

root /home/ubuntu/<YOUR-PROJECT>;

}

location / {

include proxy_params;

proxy_pass http://unix:/run/gunicorn.sock;

}

}

We will then enable the config file by linking to the sites-enabled directory.

This is important because otherwise NGINX will simply use the default configuration settings located at /etc/nginx/sites-available/default.

% sudo ln -s /etc/nginx/sites-available/<YOUR-PROJECT-NAME> /etc/nginx/sites-enabled

Here is an example for the server name line in the config file: Note: You do not need the http:// portion of the IP.

server_name 18.XXX.XXX.XXX

Save and exit the file:

vim: Shift + zz

nano: ctrl + x and selecting Yes

Once your NGINX config is set up.

Make sure there are no syntax errors with:

% sudo nginx -t

Restart the NGINX Web Server with:

% sudo systemctl restart nginx

Now if you go to your Elastic IP on your browser it should show the App!

Continuous Deployment is helpful because it saves you the time of having to ssh into your EC2 instance each time you make an update on your code base.

We will be using Github Actions and AWS SSM Send-Command created by peterkimzz to implement auto deployment.

For Github Actions to be able to work, it needs a way for it to communicate to our EC2 Instance when there is a push to the master branch of our repo. There are ways of utilizing, for example webhooks with something like Jenkins to communicate to our server. But for this example, we will use an SSM Agent as a means of connecting Github Actions with our EC2 instance.

You can think of an SSM Agent as a "back door" to our instance and is something that comes by default to most EC2 instances (I believe Ubuntu and Linux instances have it built in, not sure of the others). Even though it is pre-installed, we need to assign an IAM Role to our instance to allow it to have access to SSM.

To create an IAM Role with AmazonSSMFullAccess permissions:

- Open the IAM console at https://console.aws.amazon.com/iam/.

- In the navigation pane, choose Roles, and then choose Create role.

- Under Select type of trusted entity, choose AWS service.

- In the Choose a use case section, choose EC2, and then choose Next: Permissions.

- On the Attached permissions policy page, search for the

AmazonSSMFullAccesspolicy, choose it, and then choose Next: Review. - On the Review page, type a name in the Role name box, and then type a description.

- Choose Create role. The system returns you to the Roles page.

Once you have the Role created:

- Go to the EC2 Instance Dashboard

- Go to the Instances link

- Highlight the Instance

- Click on Actions

- Instance Settings

- Attach/Replace IAM Role

- Select the SSM Role you had created earlier

- Hit Apply to save changes

With this your EC2 Instance has access to SSM!

With our instance being able to use the SSM Agent, we will need to provide it some details so that it can access our EC2 instance.

This would be provided as Github Secrets. They act like environment variables for our project which is useful because we do not want anyone seeing our Secrets publicly anywhere!

There are three Secrets we will need: AWS_ACCESS_KEY, AWS_SECRET_ACCESS_KEY, and INSTANCE_ID.

Before we start, there is an article by AWS on how to find your AWS Access Key and Secret Access Key here.

Start by going to your Github project repo:

- Then go to your Settings

- On the menu on the left, look for the link for Secrets

- There, add the three Secrets with these keys:

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYINSTANCE_ID

Once these Secrets are set, we are ready to move on!

This step is an extra step mainly to help make things easier although we can do everything in the next step. We will create a bash script which we would have the SSM run with the Github Action is triggered.

Go to your EC2 instance and at the root of your project, create a .sh script:

% vim deploy.sh

Fill the contents with the bash commands we ran to build the project earlier:

#!/bin/sh

sudo git pull origin master

sudo pip3 install -r requirements.txt

python3 manage.py makemigrations

python3 manage.py migrate

python3 manage.py collectstatic

sudo systemctl restart nginx

sudo pm2 restart all

I will walk through step-by-step what we are doing at each command:

git pull origin mastermakes sure we have the most up-to-date code which was triggered by a commit to themasterbranch.sudo pip3 install -r requirements.txtinstalls any new dependencies for the projectpython3 manage.py makemigrationscreates new migrations based on the changes you have made to your models.python3 manage.py migrateapplys and unapplys migrations.python3 manage.py collectstaticcollects static files from each of your applications (and any other places you specify) into a single location that can easily be served in production.sudo systemctl restart nginxrestarts nginx so that it is serving the most recent static files.sudo pm2 restart allresets PM2 so that it knows to recognize any changes to the backend (This might be unnecessary because if you were following along, we set PM2 to be--watchwhich automatically recognizes any chances).

Now that we have a deployment script we are ready for the last part where we define our .yml file!

AWS SSM Send-Command requires us to create a .yml file to execute.

Start by going into your EC2 instance and at the root of your project create these two directories:

% mkdir -p .github/workflows/

This is where our .yml file will live. Create the file with:

% sudo vim .github/workflows/deploy.yml

Use the example to fill the contents of the .yml file

name: Deploy using AWS SSM Send-Command

on:

push:

branches: [master]

jobs:

start:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: AWS SSM Send Command

uses: peterkimzz/[email protected]

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-1

instance-ids: ${{ secrets.INSTANCE_ID }}

comment: Deploy the master branch

working-directory: /home/ubuntu/<YOUR PROJECT DIRECTORY>

command: /bin/sh ./deploy.sh

Remember the Github Secrets we set in the repo?

This is where we use them. The Secrets we set become the values here for aws-access-key-id, aws-secret-access-key, and instance-ids which allows AWS SSM Send-Command to access our EC2 instance.

There are 3 parts of the .yml file you want to make sure you change for your project:

- The

aws-regionshould be the same Region as where you have created your EC2 instance. (If you do not know check the top left of your AWS EC2 Console to confirm the Region you are in). working-directoryshould be the working directory where you created thedeploy.shscript.commandshould be the directions you would like to run. For our case, we created a simple script so that it does not complicate thecommandline here but you can add as many commands here as long as you are following the .yml syntax.

Once the file is set, go ahead and git add, commit, and push to your repo and the magic should start!

I struggled with getting this to work and it took several tries. I found that if for whatever reason there are problems with your Github Actions deployment it helps to look through the errors. To find the errors go to your Github project repo:

- Go to Actions

- You should see a list of

workflowsor commits you have made since creating the .yml file. - Click the most recent one.

- Click on the start link which will show you each step of the job ran.

- Click through to the command with a red

Xand find the errors there.

If you do have issues feel free to reach out to me or peterkimzz by creating an issue here if you feel that you have done everything 100% and it still does not work. peterkimzz was extremely responsive and helpful when I was struggling to get this working (Thank you!).

This is an extra step if you decided to want to buy a domain and use it for your project (I recommend it!). There is great satisfaction in being able to tell people to go to www.your-awesome-site.com and have people see your hard work!

To get started you would need to first purchase a domain. I most commonly use Google Domains but another popular domain registrar is GoDaddy. Whichever registrar you use, make sure you have purchased the domain that you want!

There are two things we would need to configure to connect our project with our domain:

- Create records on our domain DNS with our registrar.

- Configure NGINX on our EC2 instance to recognize our domain.

Let's start with configuring our DNS with records:

- Go to the DNS portion of your registrar.

- Find where you can create custom resource records.

Set the records like so:

| Name | Type | TTL | Data |

|---|---|---|---|

| @ | A | 1h | YOUR-ELASTIC-IP-ADDRESS |

| www | CNAME | 1h | your-awesome-site.com |

Once that is set we are good to move on to configure our Web Server!

Let's configure our Web Server, in our case NGINX to recognize our domain!

Start by going to your EC2 Instance and going to our NGINX config file:

% sudo vim /etc/nginx/sites-available/default

Update the first section of the config file like so:

server {

server_name <YOUR-ELASTIC-IP> your-awesome-site.com www.your-awesome-site.com;

...

We are simply adding our root domain and our sub domain (In our case with the prefix www) to our NGINX config.

Next as we always should do after changing our NGINX config file run:

sudo sudo systemctl restart nginx

And Voila! You are done! Note: Sometimes the domain change does not happen immediately. From my experience it can happen almost instantaneously to a few hours. If the changes haven't happened after 48 hours, double check your work to see if there are any typos or errors.

SSL or Secure Sockets Layer allows HTTPS requests to happen. Our current project currently uses HTTP requests which can be dangerous for the potential users of your web app. Therefore I always recommend making sure that you are using HTTPS.

For details on why HTTPS over HTTP this article is a pretty good deep dive on why.

Alright! We will be working with Certbot which is provided by letsencrypt.org which is a non-profit organization which helps create SSL Certificates. They are widely used and best of all, FREE!

On your browser go to https://certbot.eff.org/instructions.

There select the Software and Operating System (OS) you are using. For our example, we are using NGINX and Ubuntu 18.04 LTS (bionic).

Go to your EC2 Instance and follow the instructions until they ask you to run the command:

% sudo certbot --nginx

After running the command certbot will prompt you with several options, the first being:

Which names would you like to activate HTTPS for?

And if your NGINX config is configured correctly, should show both your root domain as well as with the www subdomain, like so:

1: your-awesome-site.com

2: www.your-awesome-site.com

I usually recommend just hitting Enter to activate HTTPS for both because, why not?!

The next prompt would be:

Please choose whether or not to redirect HTTP traffic to HTTPS, removing HTTP access.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

1: No redirect - Make no further changes to the web server configuration.

2: Redirect - Make all requests redirect to secure HTTPS access. Choose this for

new sites, or if you're confident your site works on HTTPS. You can undo this

change by editing your web server's configuration.

I typically go with 2: Redirect as it seems to make more sense to have all requests be in HTTPS.

There are probably situations where it is not the best option but for our case we will go with this one.

Afterwards, Certbot will go ahead and make a few changes to our NGINX config file.

Note: Once your site is using Https, make sure to double check your API calls and make sure that they are making calls with https:// rather than http://. This may be an unnecessary precaution but I have had issues with this in the past.

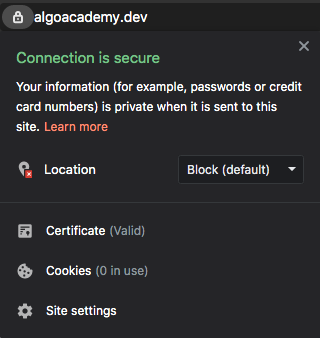

After a few moments checkout your domain at your-awesome-site.com.

Check to make sure that there is a lock icon next to your site.

Congratulations! You have successfully deployed a web app with HTTPS!