- determine the number of servers and IP address you need

- determine number of private hosts first

- 10.0.0.0/8 (24-bit; Class A)

- 172.16.0.0/12 (20-bit; 16 Class B blocks)

- 192.168.0.0/16 (16-bit; 256 Class C blocks)

- use route summarization for better routing performance and management

- 192.168.0.0/24 and 192.168.1.0/24 could be 192.168.0.0/23, spanning both (borrowing a bit)

/298 (2^3)/2816 (2^4)/2732 (2^5)/2664 (2^6)/25128 (2^7)/24256 (2^8)/23512 (2^9)/221,024 (2^10)/212,048 (2^11)/204,096 (2^12)/198,192 (2^13)/1816,384 (2^14)/1732,768 (2^15)/1665,536 (2^16)/15131,072 (2^17)/14262,144 (2^18)/13524,288 (2^19)/121,048,576 (2^20)/112,097,152 (2^21)/104,194,304 (2^22)/98,388,608 (2^23)/816,777,216 (2^24)

- Authentication (AuthN) and Authorization (AuthZ)

- who can do what on which resource

- types

- Google Account

- Service Account

- Google Groups

- GSuite (Workspace) Domain

- Cloud Identity Domain

- roles

- Primitive

- Predefined (more granular)

- Custom (most granular)

- IAM policy

- bindings consist of members and roles

- identity (who)

- role (can do what)

- resource (on which resource)

- hierarchy

- Organization

- Folder

- Project

- Resources

- Project

- Folder

- policies are inherited from top down, and union of all parent policies

- Organization

- key people

- OrgAdmin

- NetworkAdmin (networking - usually at org level)

- KNOW PERMISSIONS AND WHAT EACH ALLOWS YOU TO DO

- SecurityAdmin (security policies - usually at org level)

- ComputeInstanceAdmin

- NetworkAdmin (networking - usually at org level)

- OrgAdmin

Defining IAM Policies

- selecting the default policies that enforce organization standards across all resources

Determining the Resource Hierarchy

- create the structure of how roles will be assigned to resources

Delegating Responsibility

- select the team members that will be assigned the IAM roles to implement the configuration of Network and Security

Key roles:

roles/compute.networkAdmin(Compute Network Admin)- over 200 permissions for compute network resources

- doesn't create firewalls, assign IAM roles, or SSL certs

roles/compute.securityAdmin(Compute Security Admin)- over 50 permissions for computer security

- firewall, SSL

roles/compute.xpnAdmin(Compute Shared VPC Admin)- 14+ permissions

- administer shared VPC networks

roles/compute.networkViewer(Compute Network Viewer)- 14+ permissions

- read-only access to Compute Engine networking

- granted to Service Accounts

Can view roles and assignees in Console or Gcloud SDK

gcloud iam roles(copy, create, delete, describe, list, undelete, update)gcloud iam roles list --filter="network"gcloud iam roles describe <paste>(lists available permissions)

gcloud iam list-grantable-roles(list IAM grantable roles for a resource)gcloud iam list-testable-permissions(list IAM testable permissions for a resource)

Creating Custom Roles

- need

getIamPolicyandsetIamPolicypermissions to set IAM role

- Created at project level

- if you assign service account to Compute Engine instance, it uses IAM instead of access scopes, for permissions

- granting

serviceAccountUserrole to users allows them to impersonate and act on resources as SA (like sudo or actAs) - need to understand how to audit service accounts

Commands in GCloud SDK:

gcloud iam service-accountslistand--filter="serviceAccounts"disable <email-address>

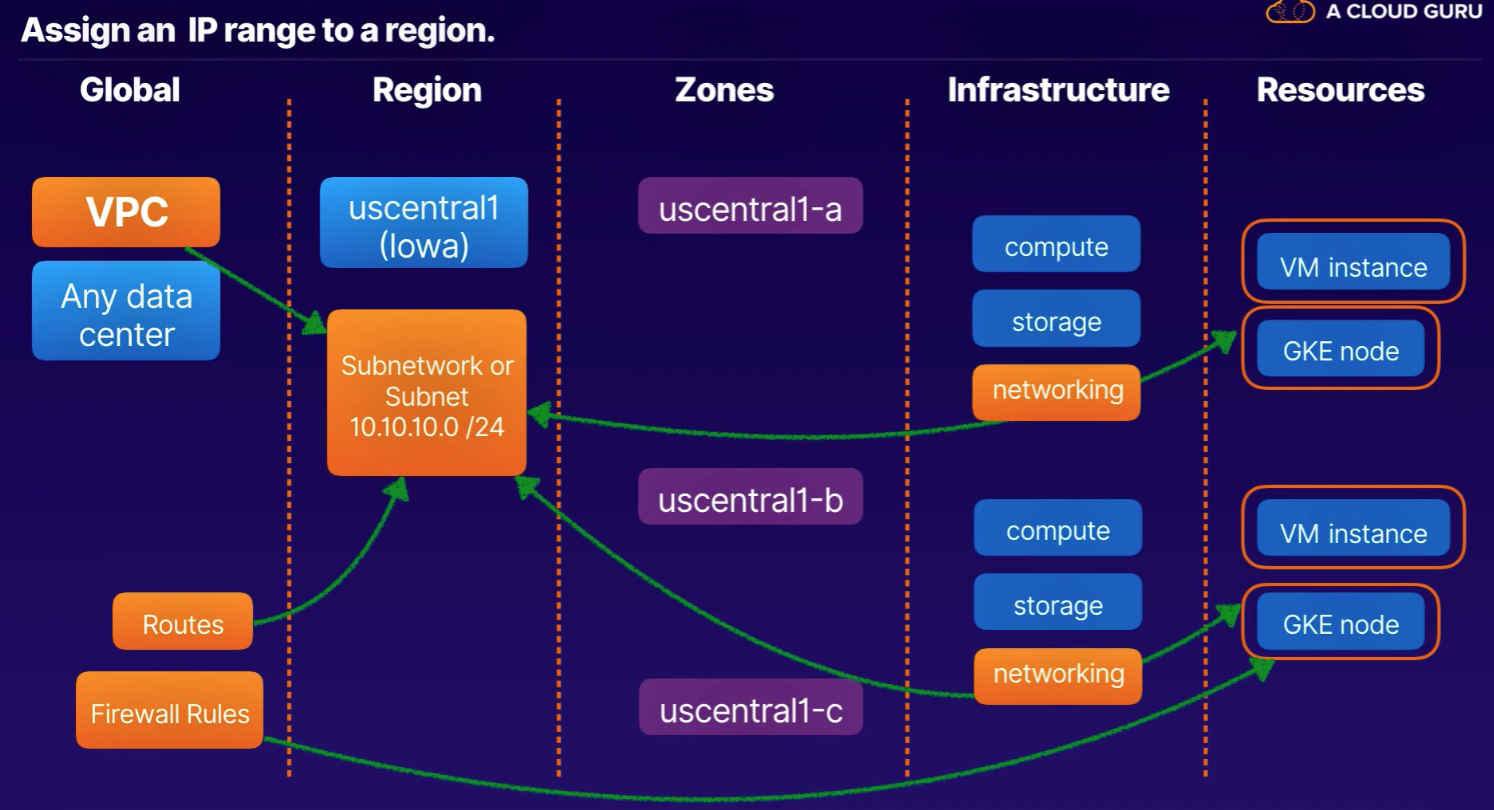

GCP computing architectures meet you where you are. All regions are connected by a private global network.

- Global

- Region

- Zones

- Infrastructure

- Resources

- Infrastructure

- Zones

- Region

Ingress to GCP

- Premium Tier: Traffic from your users enters Google's network at a location nearest to them.

- Standard Tier: Traffic from your users enters Google's network through peering, ISP, or transit networks in the region where you have deployed your GCP resources.

Egress from GCP

- Premium Tier: Egress traff is sent through Google's network backbone, leaving at a global edge POP closest to your users.

- Standard Tier: Egress traffic is sent to the internet via a peering or transit network, local to the GCP region from which it originates.

Components

- Mode (automatic or custom)

- Subnet

- Name

- Region

- IP Range

- Primary (all subnets have only 1 primary range)

- Secondary (subnets may have up to 20 secondary ranges; allows for separation of infra (VM) from containers or multiple services running on VM)

- Alias IP (associating more than one IP address to a network interface)

- allows one node on a network to have multiple connections to a network, each serving a different purpose

- can be assigned from either primary, or secondary subnet ranges

Default, Auto, Custom VPCs

- Default (named "default" and uses Auto Mode)

- Auto (VPC assigns predefined range in every region)

- starts with

/20range and can be expanded to/16range (65,534 addresses)

- starts with

- Custom (recommended in production - you completely control)

- minimize collision risk (connecting, hybrid, peering, etc.)

- cannot be changed to Auto mode after

- starts with

/12range and can be expanded to/8range (to be confirmed)

Reserved IPs

- first 2 addresses, and last 2 addresses are reserved by GCP

- Network (xxx.xxx.xxx.0)

- Gateway (xxx.xxx.xxx.1) - don't respond to ping traffic

- Second-to-last (xxx.xxx.xxx.254) - reserved for potential future use

- Broadcast (xxx.xxx.xxx.255)

DHCP, DNS, Metadata

- Internal IPs

- IP address allocated to VMs by DHCP from regional subnetworks

- DHCP renews every 24 hours

- Hostname and IP address are registered with internal DNS

- Alias IP - additional IPs assigned to a VM, mapped to or is a primary IP

- External IPs

- External IP address assigned from a pool of ephemeral IPs managed by GCP

- DHCP renews every 24 hours

- VM doesn't know about the external IP, mapped to internal IP by the VPC

- mapped by Metadata server

- Allows communications from outside the project

- Metadata (Internal DNS)

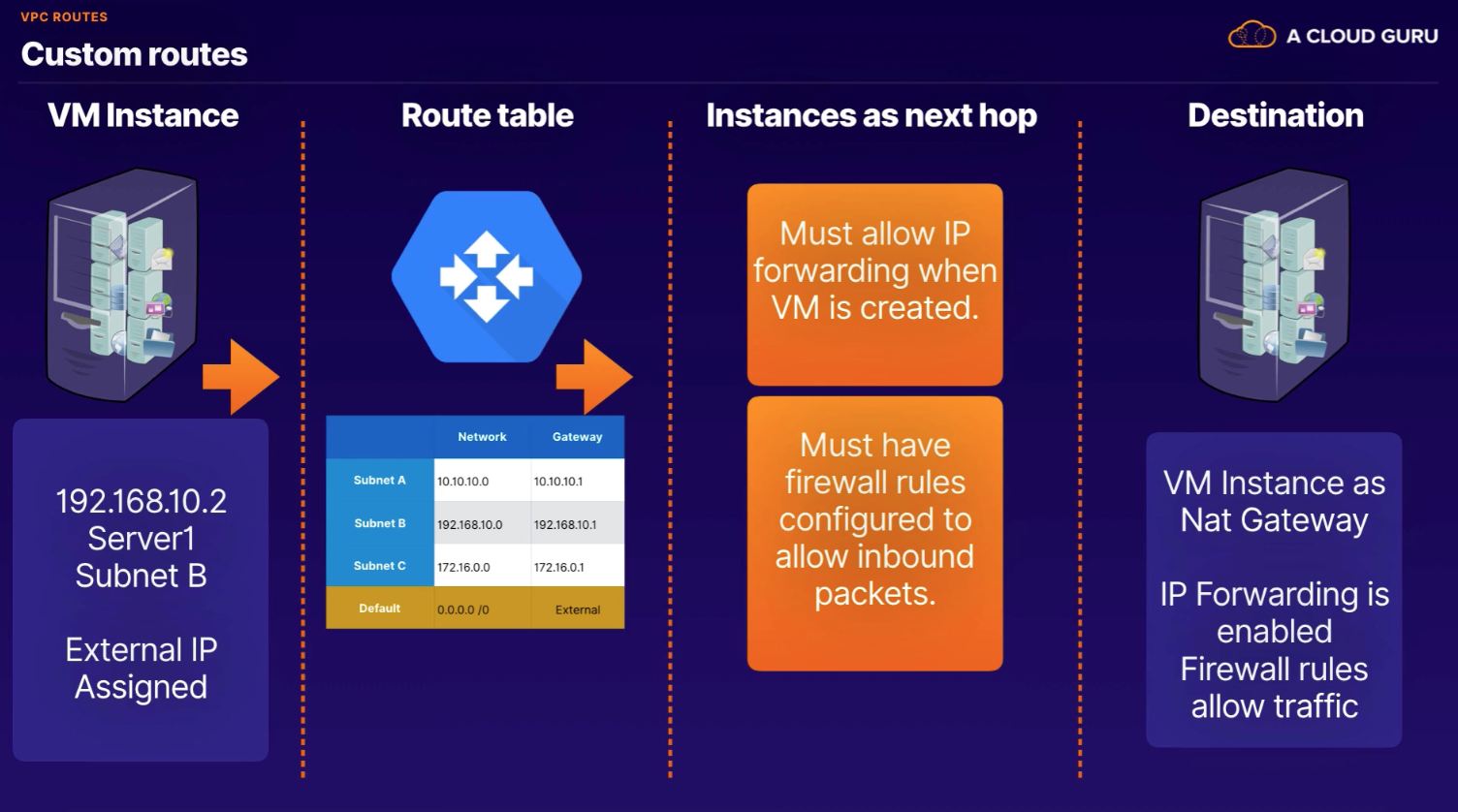

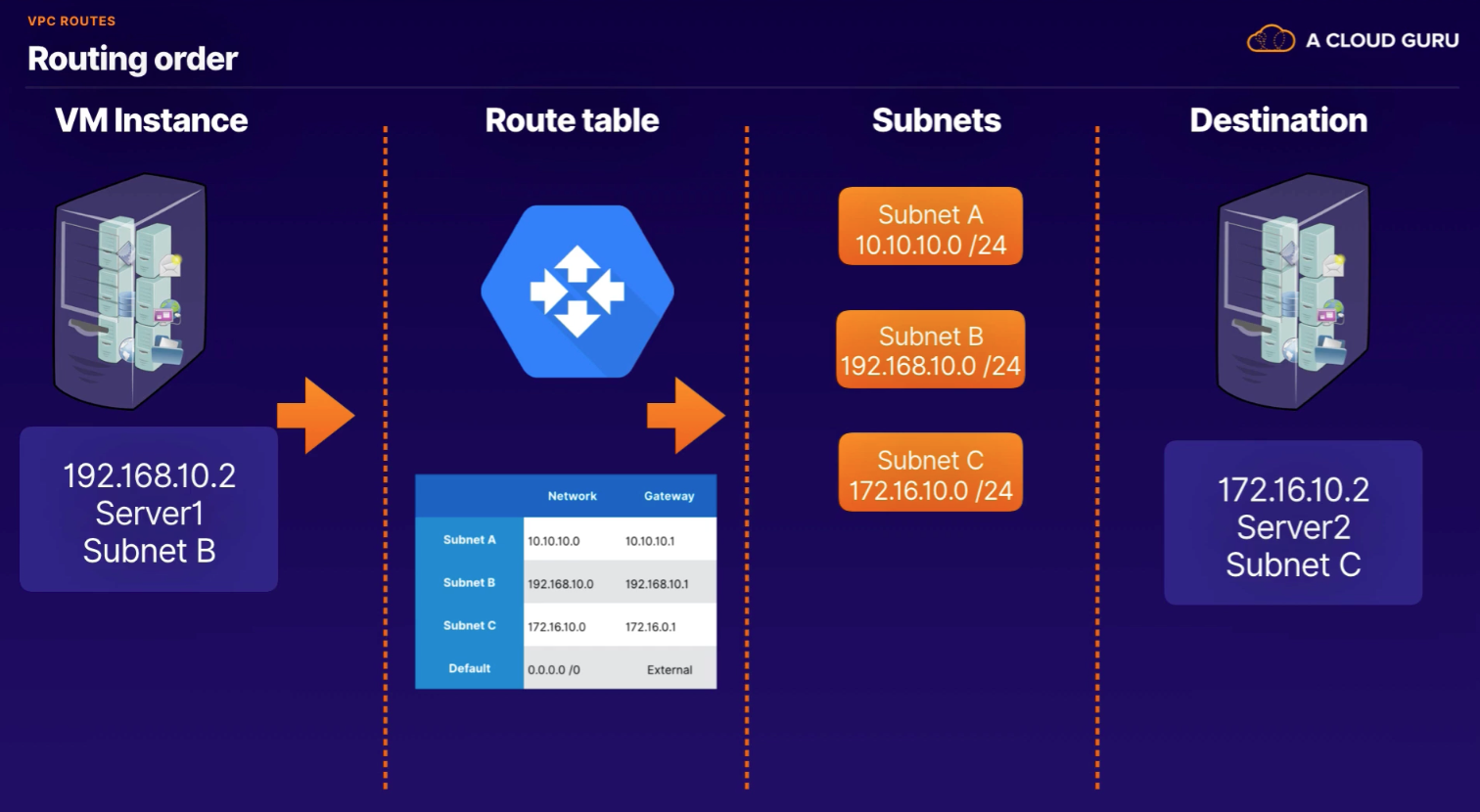

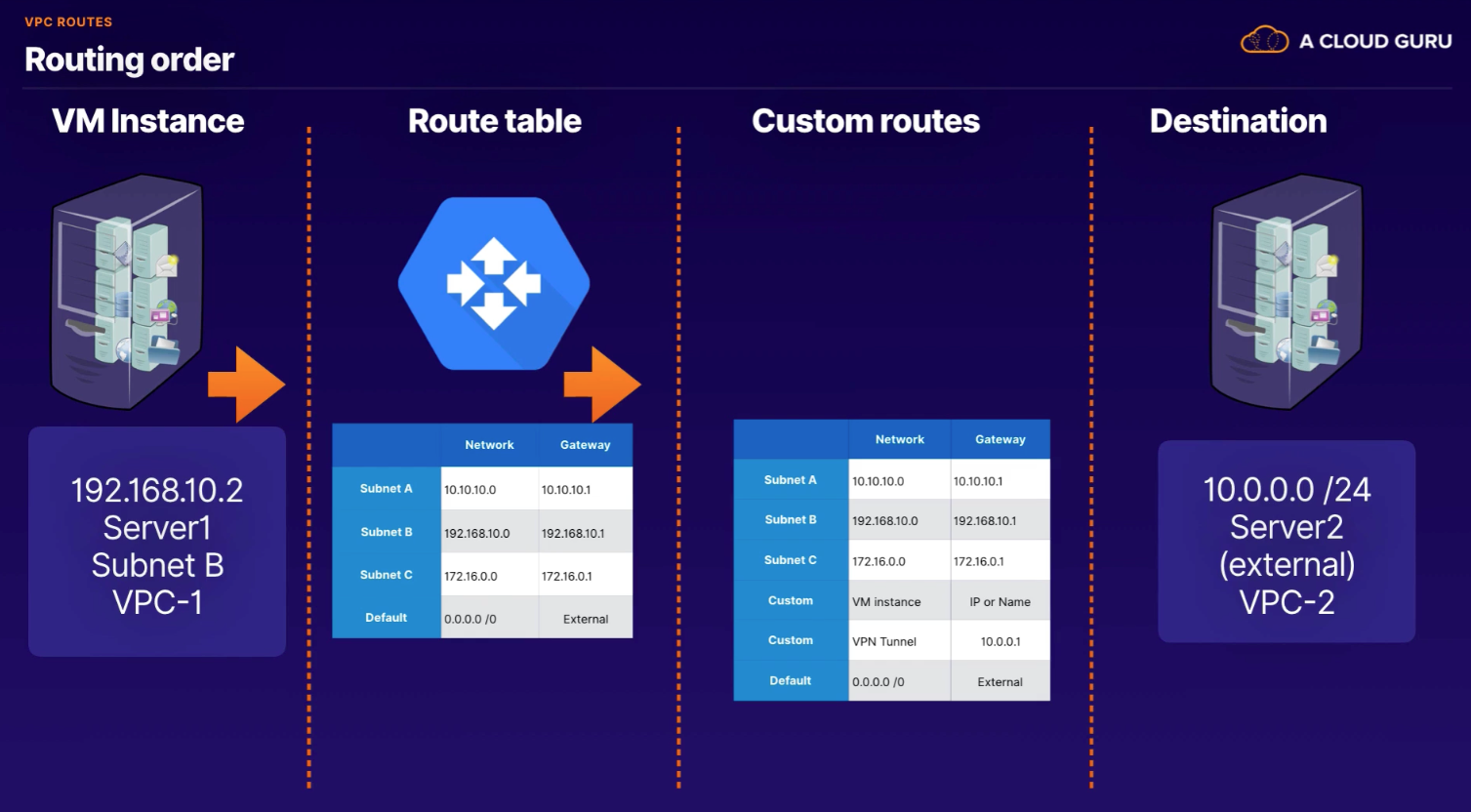

In GCP, a route consists of a single destination CIDR and a single Next Hop. Route is a way or course taken in getting from a starting point to a destination.

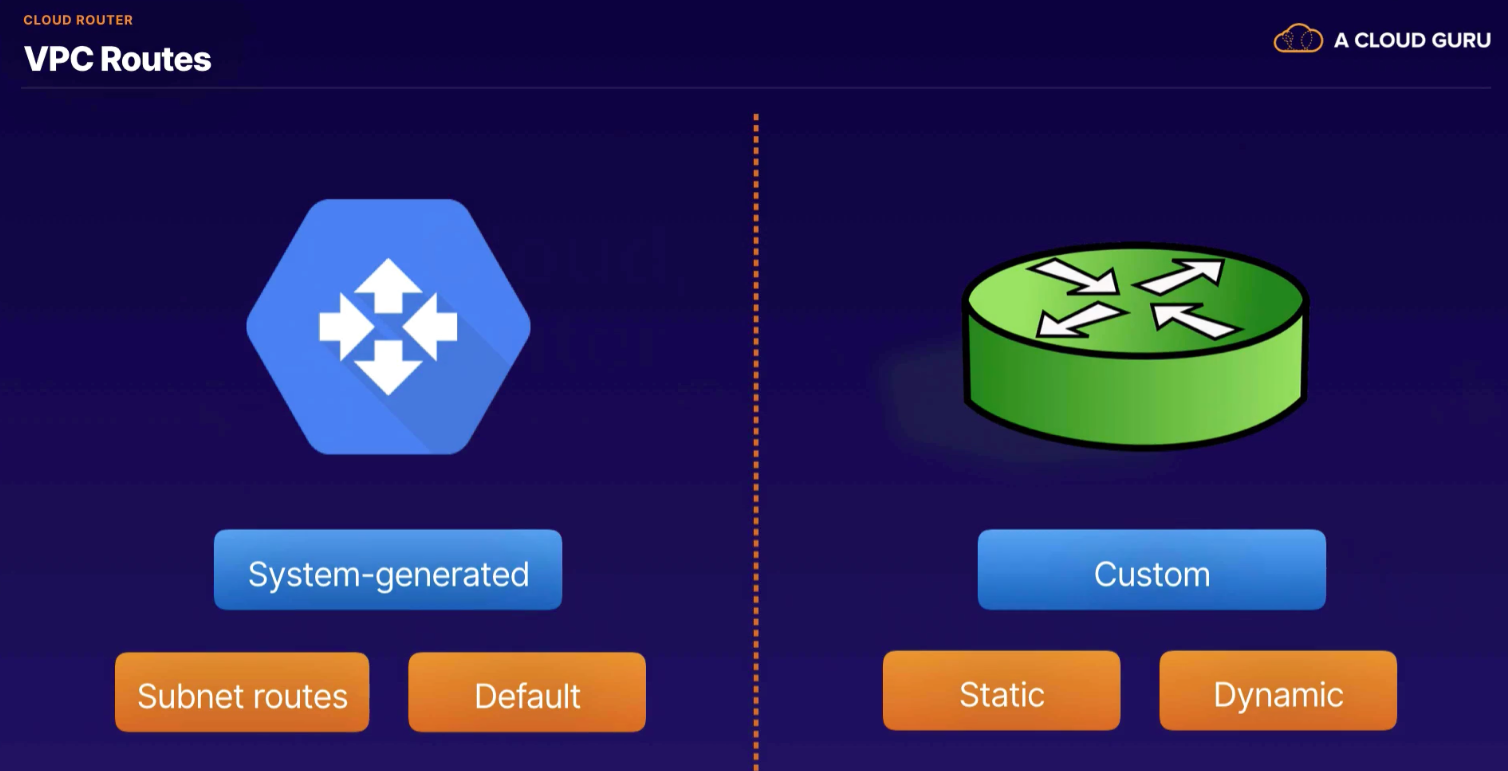

System-generated routes

- Subnet routes - created by GCP any time a primary or secondary subnet is added to a VPC

- Default - whenever a resource is created, GCP creates a default route

Custom routes

Routing Priority

- Subnet routes

- Custom routes

- Default routes

- Drops the packet

- Default routes

- Custom routes

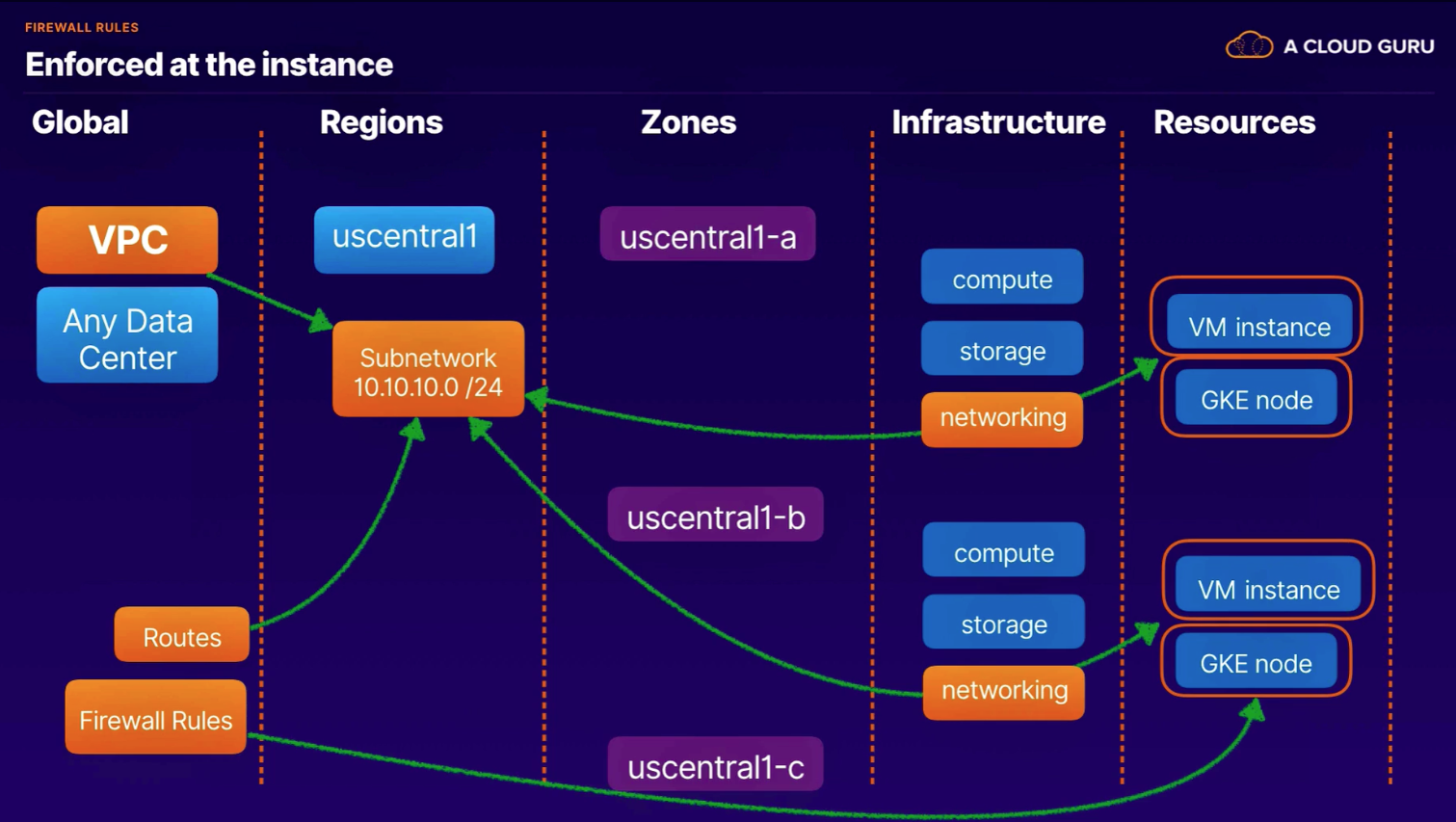

Distributed virtual firewall controlling ingress and egress traffic for a single VPC.

Implied rules

- ingress: deny all by default (cannot view in logs so would have to create same with higher priority)

- egress: allow all by default (cannot view in logs)

- priority lower than all other rules, not visible, not removable

Components

- Priority (0 - 65535; 0 is highest priority; exits on first match)

- Action on match (allow or deny; one or other)

- Direction (ingress or egress)

- Protocols and ports (TCP, UDP, ICMP, IPIP)

- Source / Destination (source IP range, destination IP range)

- Targets - what to take action on (all instances, target tags, service accounts)

- Secondary filter - narrow rules (target tags, service accounts)

- Enforcement status (enabled or disabled)

- enforced at the instance level

Summary

- VPC object can be in any datacenter and is global

- To access resources in a region, we assign a subnet to that region

- Routes are created when you create your subnet

- In each region their are zones

- Resources consumed by assigning IP addresses from subnet

- Firewall rules are enforced at the instance level

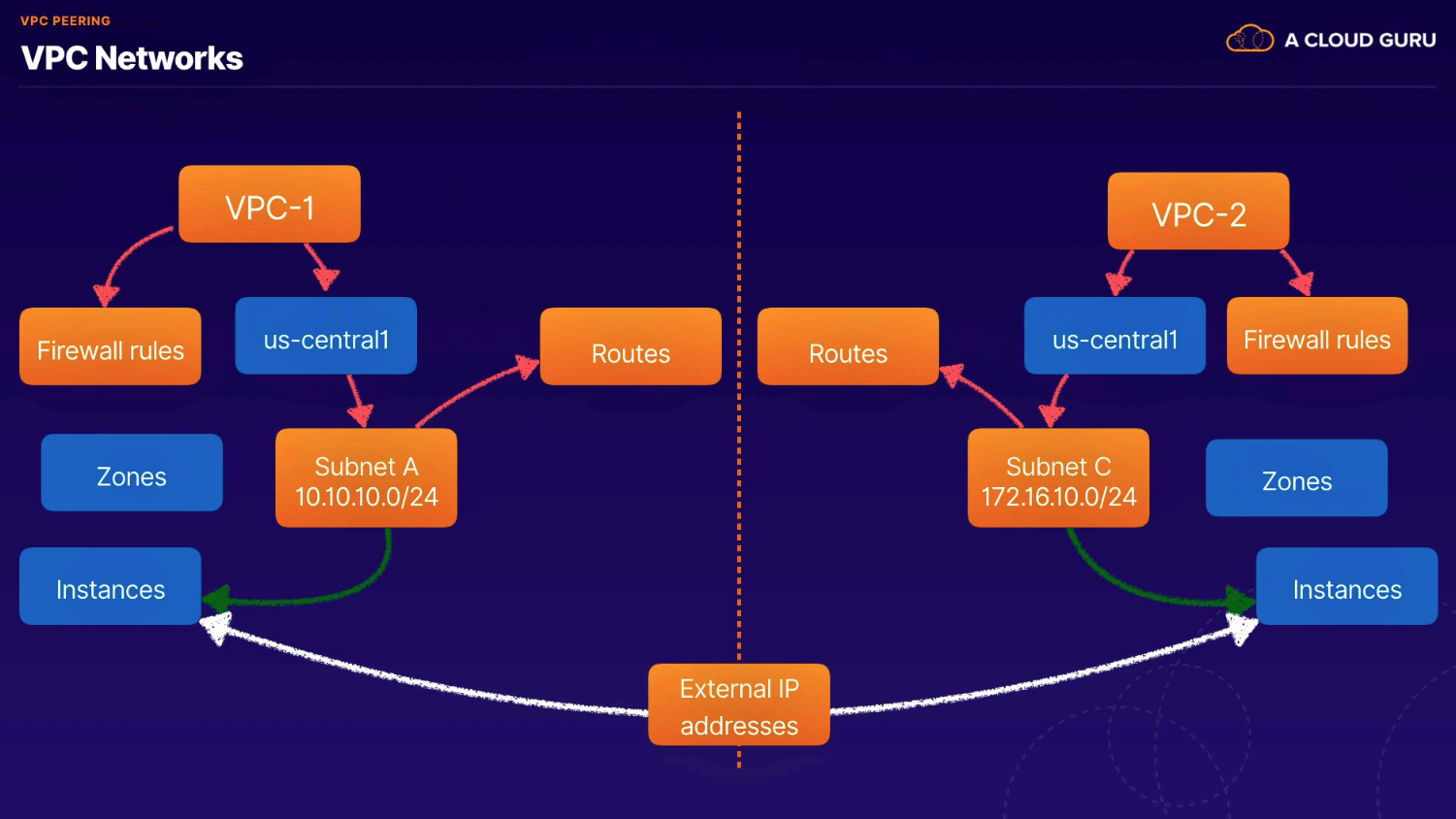

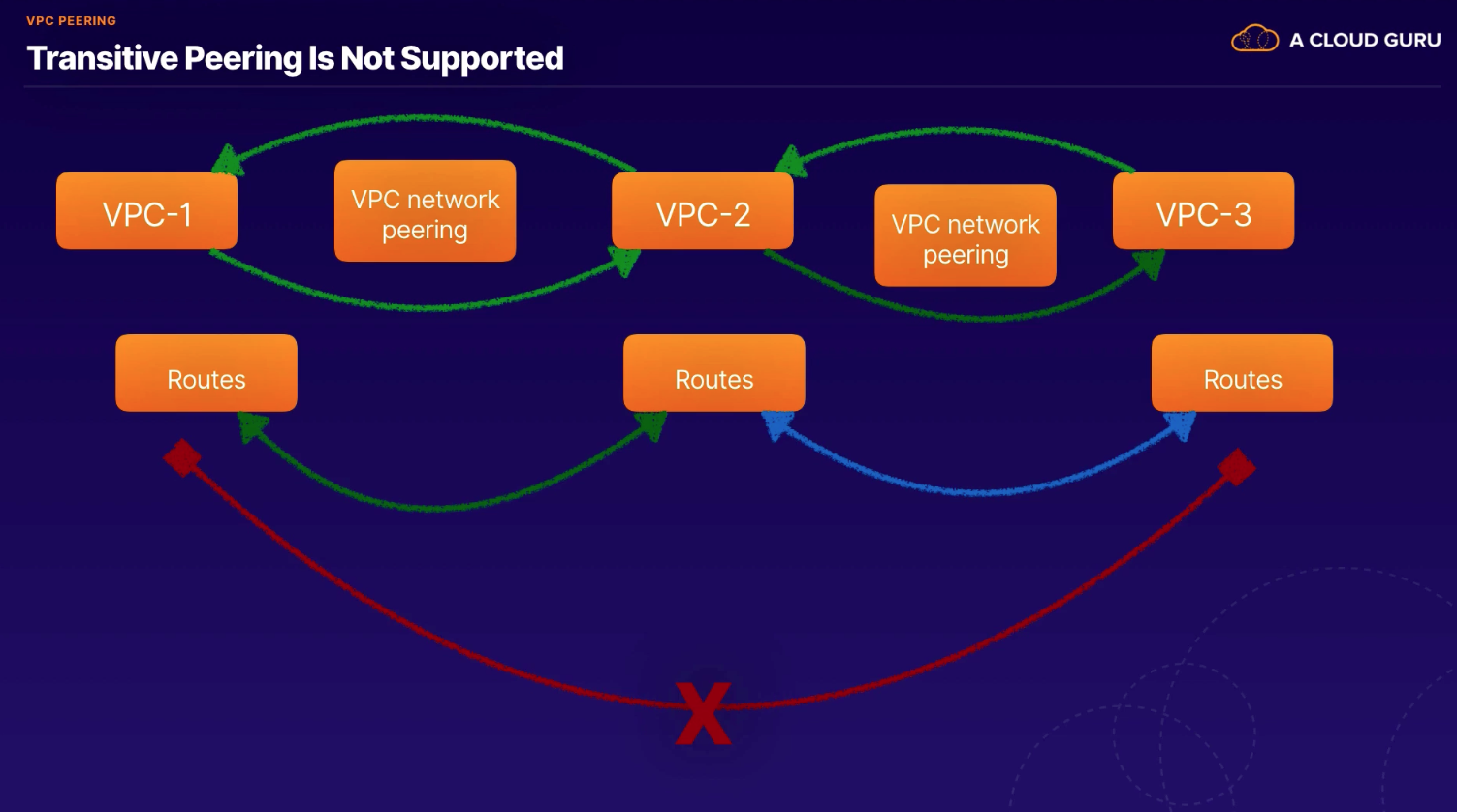

- Peering: Make or become equal or the same length.

- Allows us to build SaaS ecosystems in GCP making services available privately across different VPC networks within or across different organizations.

Overview

- Allows private communications between VPC networks without an external IP

- Connection must be established on both sides

- Subnet ranges cannot overlap

Routing

- No granular routing; use firewall rules to control traffic

- Firewall rules created separately on both sides

- must use as no way to exclude VMs, etc. from peered network

- not automatic; must use and export custom routes

- no transitive routing supported (A - B - C) [no A->C]

Peered connectivity (reduced latency, increased security, decreased egress costs)

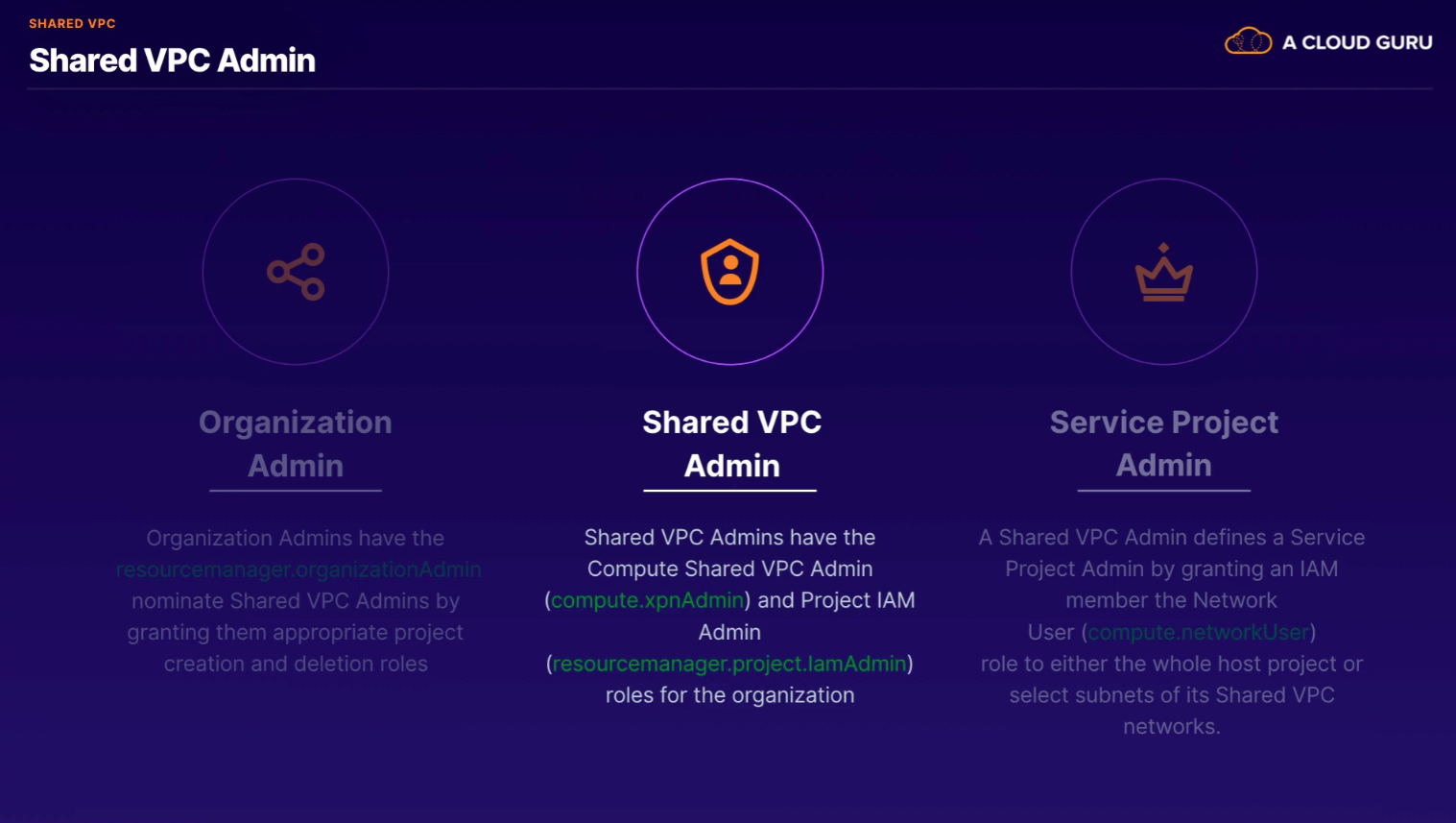

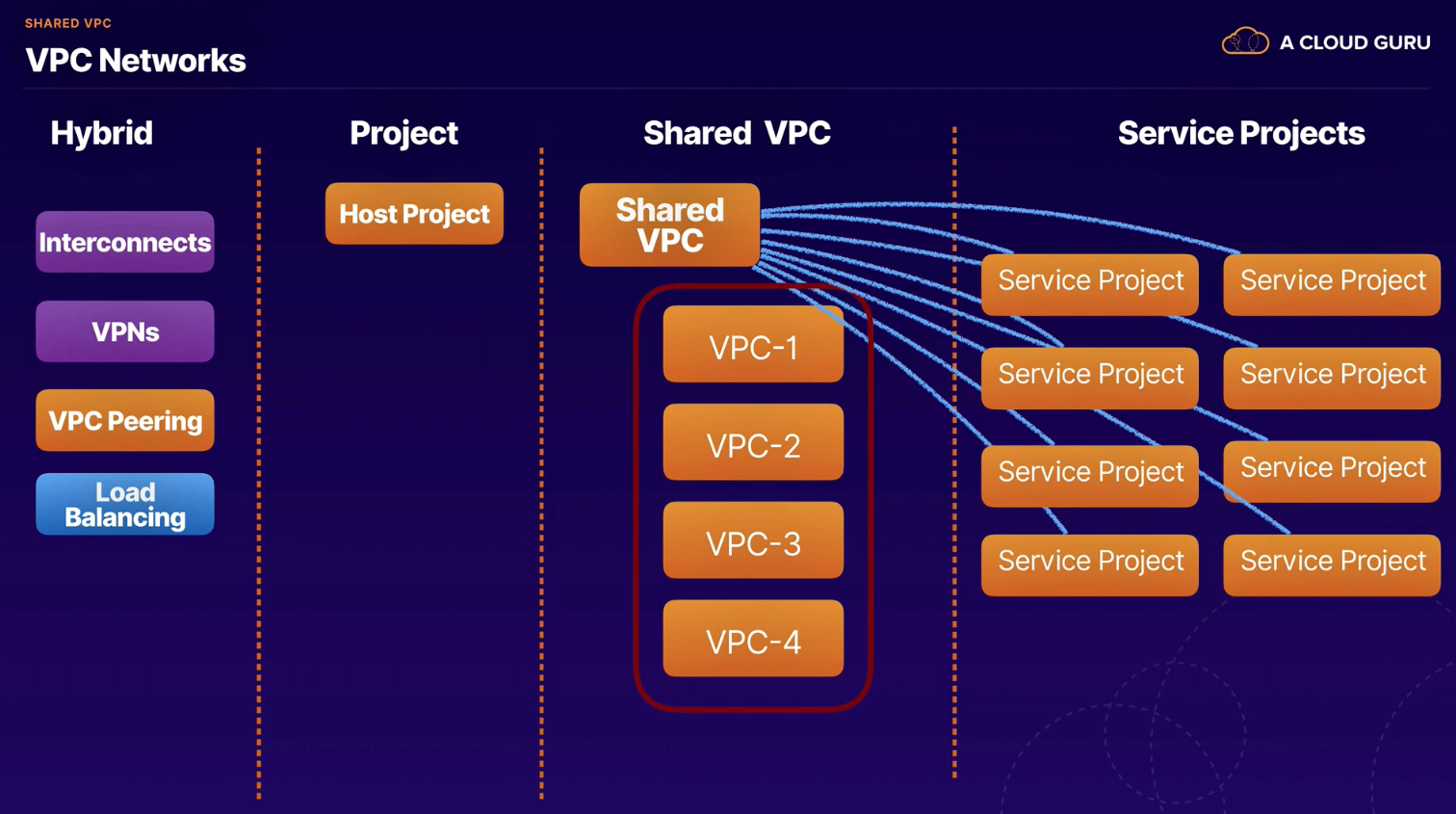

A way to centrally manage network resources within a host project and share them to any number of service projects.

Benefits

- centralizes network administration by sharing a VPC across projects

- relies on IAM roles to share network resources

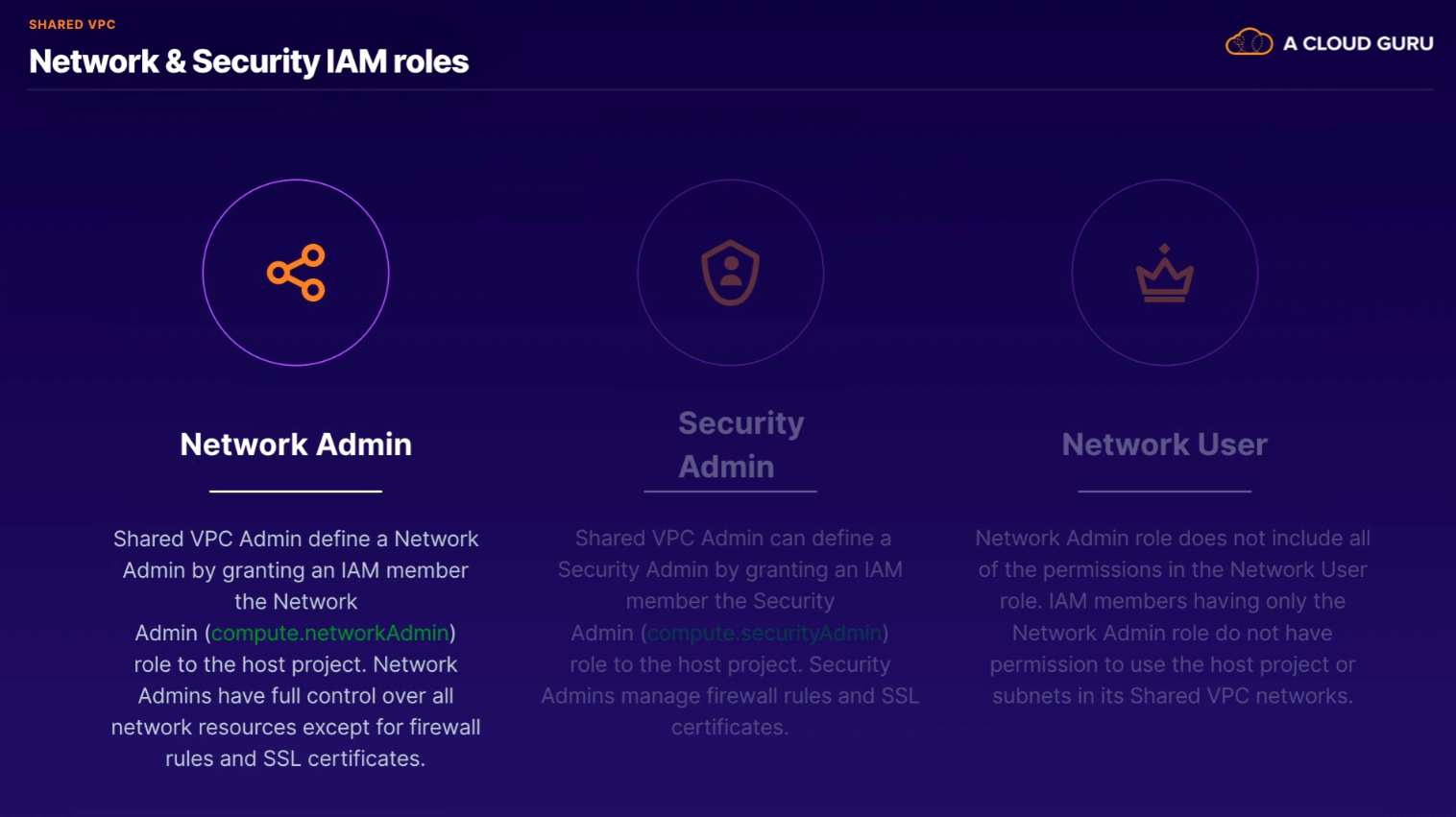

Key IAM roles that make VPC sharing possible:

- Organization Admin

- Shared VPC Admin

- Network Admin (network resources, routes, subnets)

- Security Admin (firewall rules and SSL certs)

- Service Project Admin (grant Network User role to use resources)

- Network User (assigned to all service project users, in order to consume resources)

- Shared VPC Admin

- Required roles to create Shared VPC Network

roles/compute.xpnAdminroles/resourcemanager.projectIamAdmin

Concepts

- Service Project resources are not required to use Shared VPC resources and can use local (unless restricted by Org policy)

- instances in service project must use external IP address to find in that same service project (even if internal IP)

- Hybrid connectivity best connected to Host Project, and shared with Service Project

- Load Balancing is managed in the Service Projects

- GKE: Alias IPs must be created BEFORE service project requests a GKE Cluster

Summary

- Host project can have more than one VPC; all are shared VPC

- Host and service projects attached at project level

- Project cannot be host and service project simultaneously

- Can only connect service project to one host project at a time

- Resources objects obtain IP address information from shared VPC network

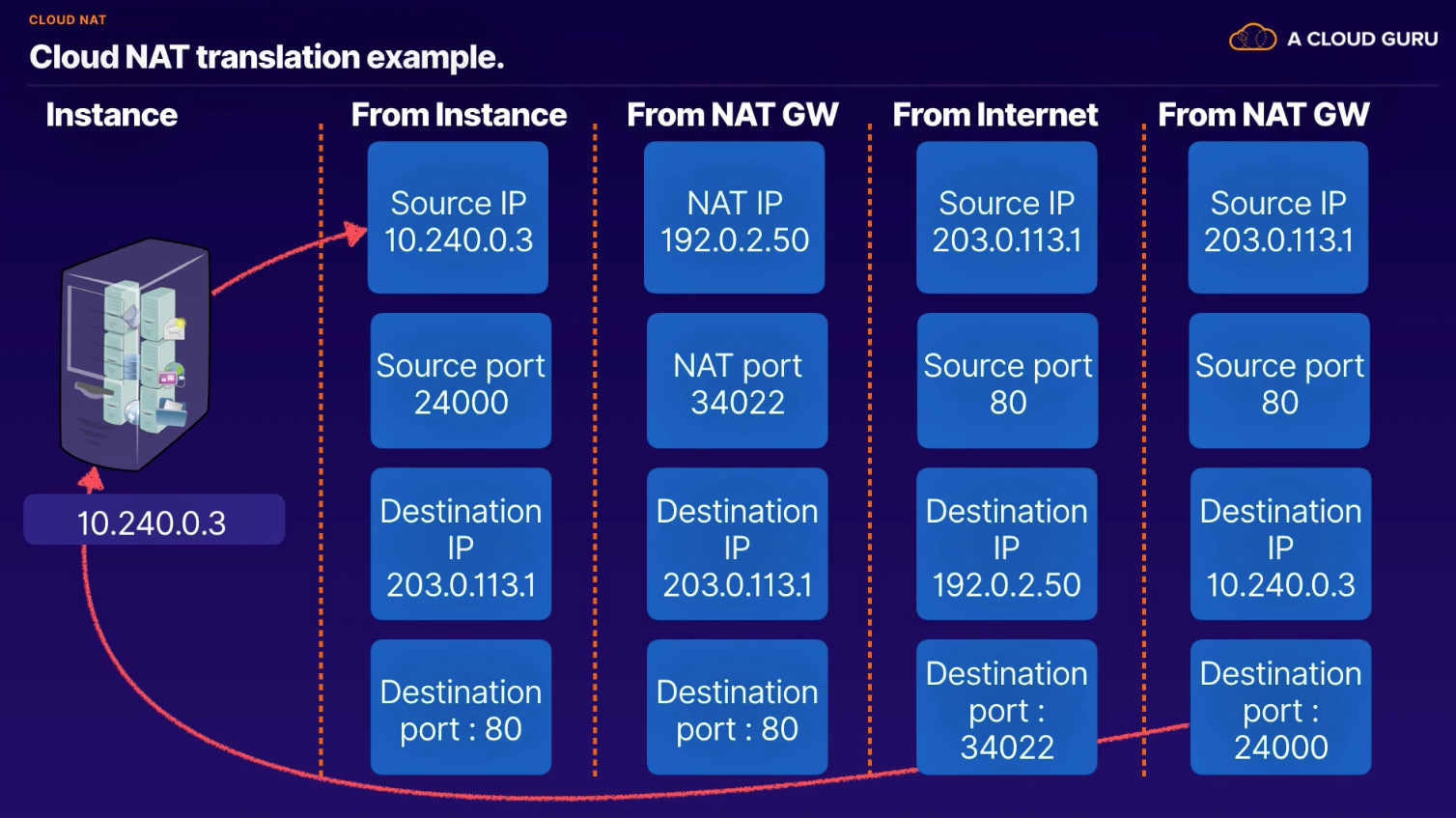

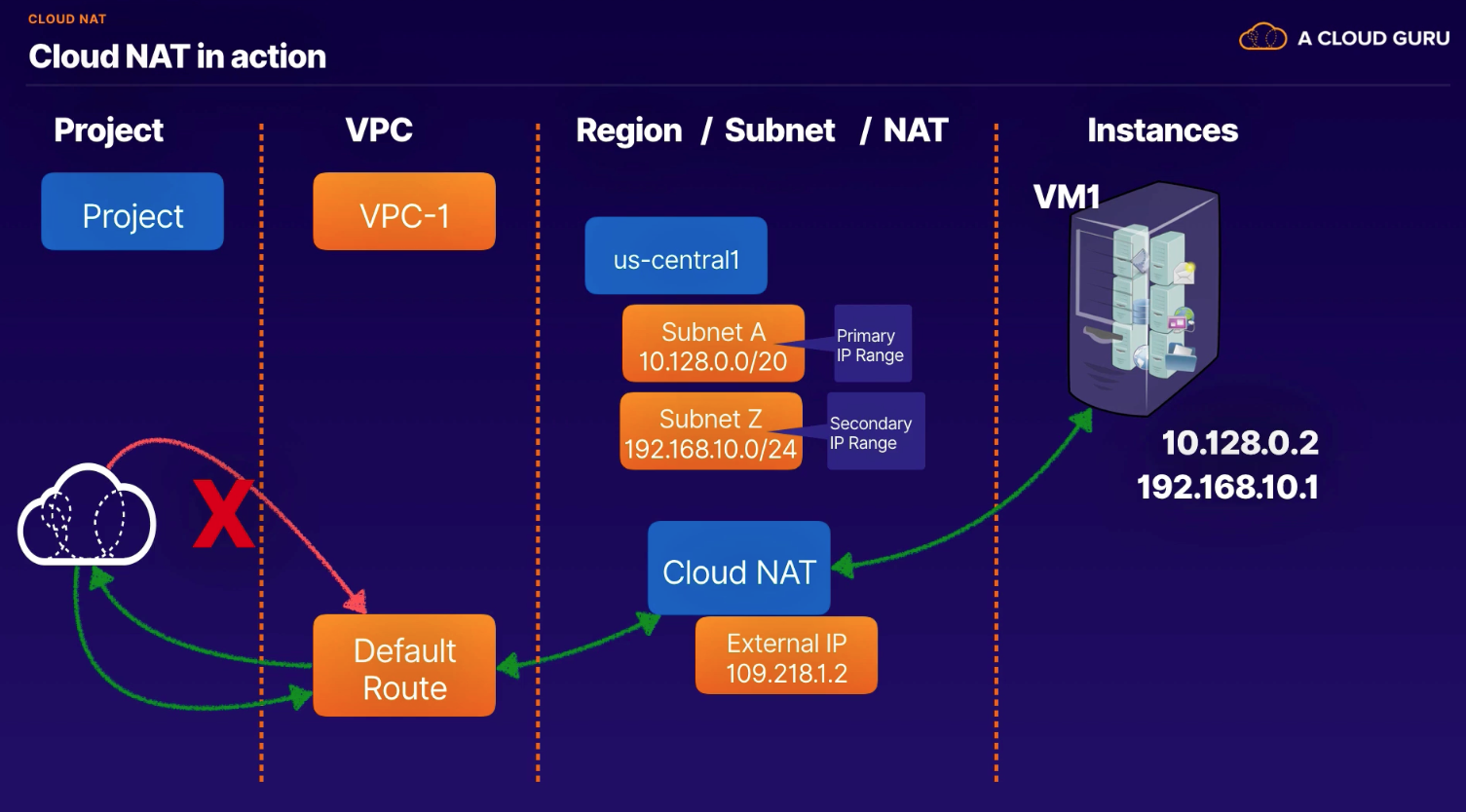

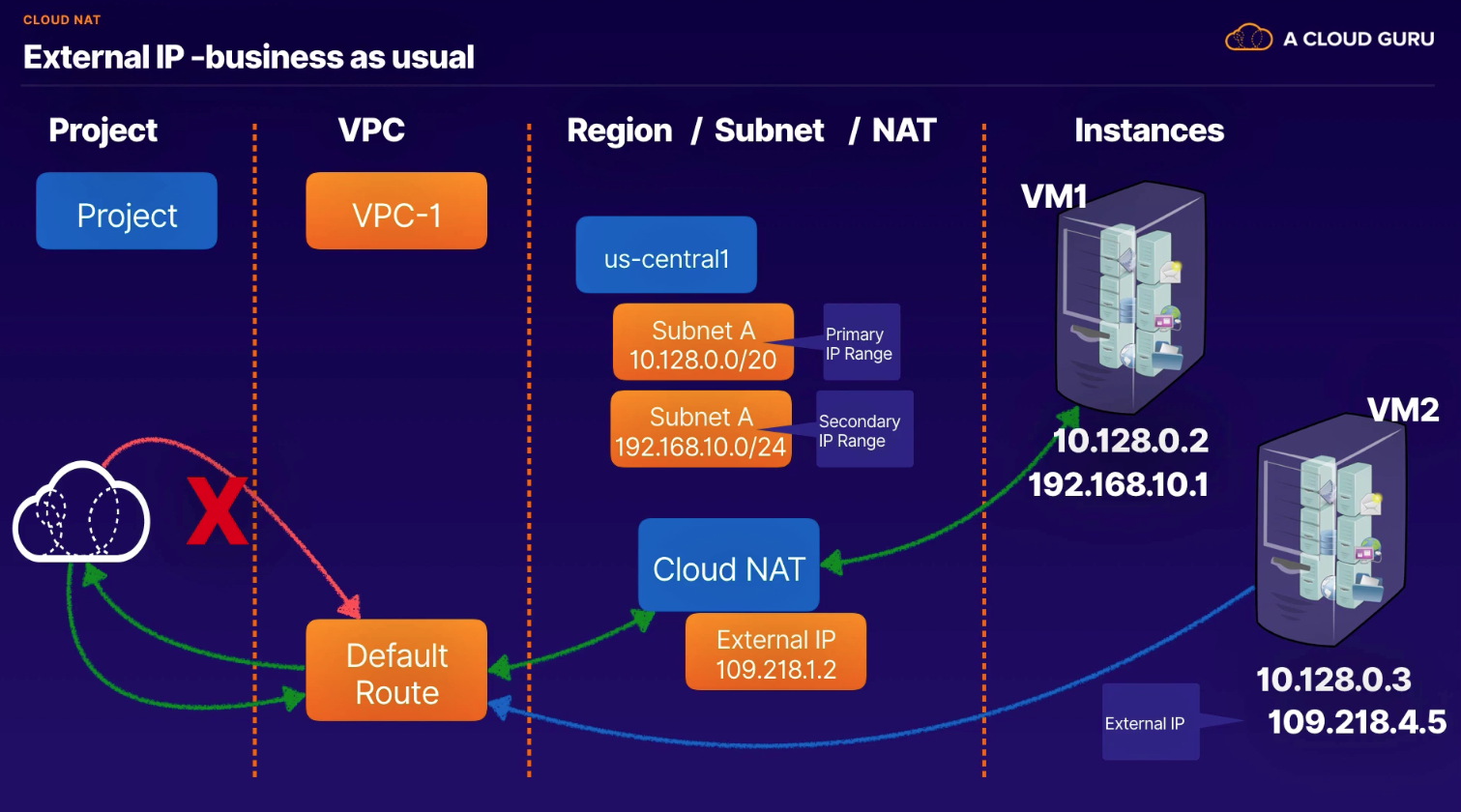

Lets your compute VM instances and GKE container pods communicate with the internet using a shared public IP address.

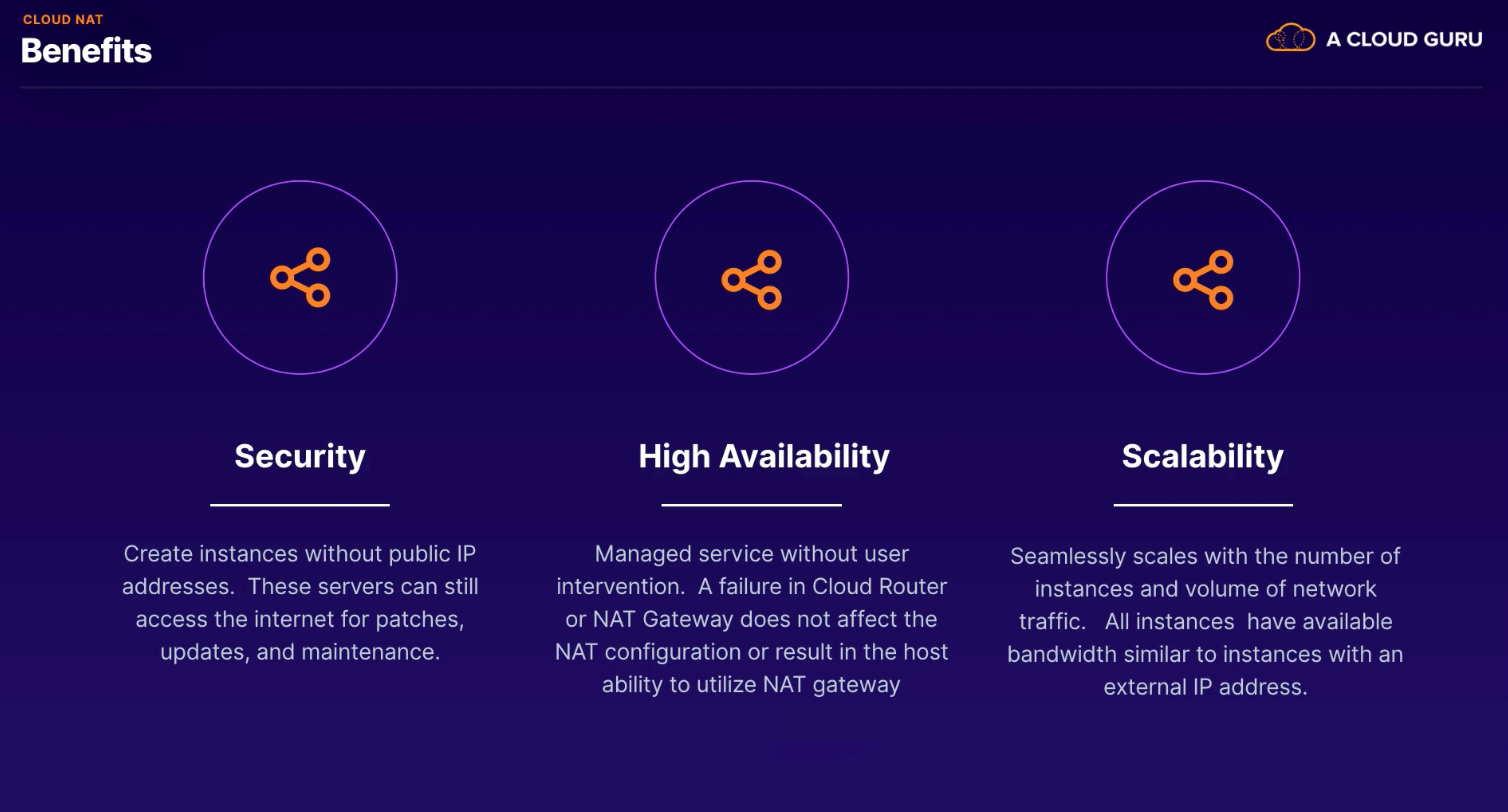

Benefits

- Security - create instances without public IP addresses

- High Availability - Managed service without user intervention

- Scalability - Seamlessly scales with the number of instances and volume of network traffic

Overview

- specific to one region

- choose ranges

- Primary & Secondary

- Primary only

- Selected subnets

- Outbound NAT traffic only (no inbound)

- VM with external IP does NOT need to route through NAT

Configuration

- Minimum ports per VM (allows up to 64K ports)

- Default 64 ports per VM

- VM ports assigned 32000 - 33023

- support approx 1000 VMs

- Increasing to 1024 for container workloads

- support approx 64 VMs (keep in mind for IPs required for GKE cluster and number of nodes)

- Default 64 ports per VM

Provides a methods for VMs to reach public IP addresses of Google APIs & Services through the VPC network's default internet gateway, while not traversing the public internet and not requiring an external IP. Instances with external IP do not use PGA.

Overview

- only instances with internal-only IP uses

- enabled On/Off on a per-subnet basis

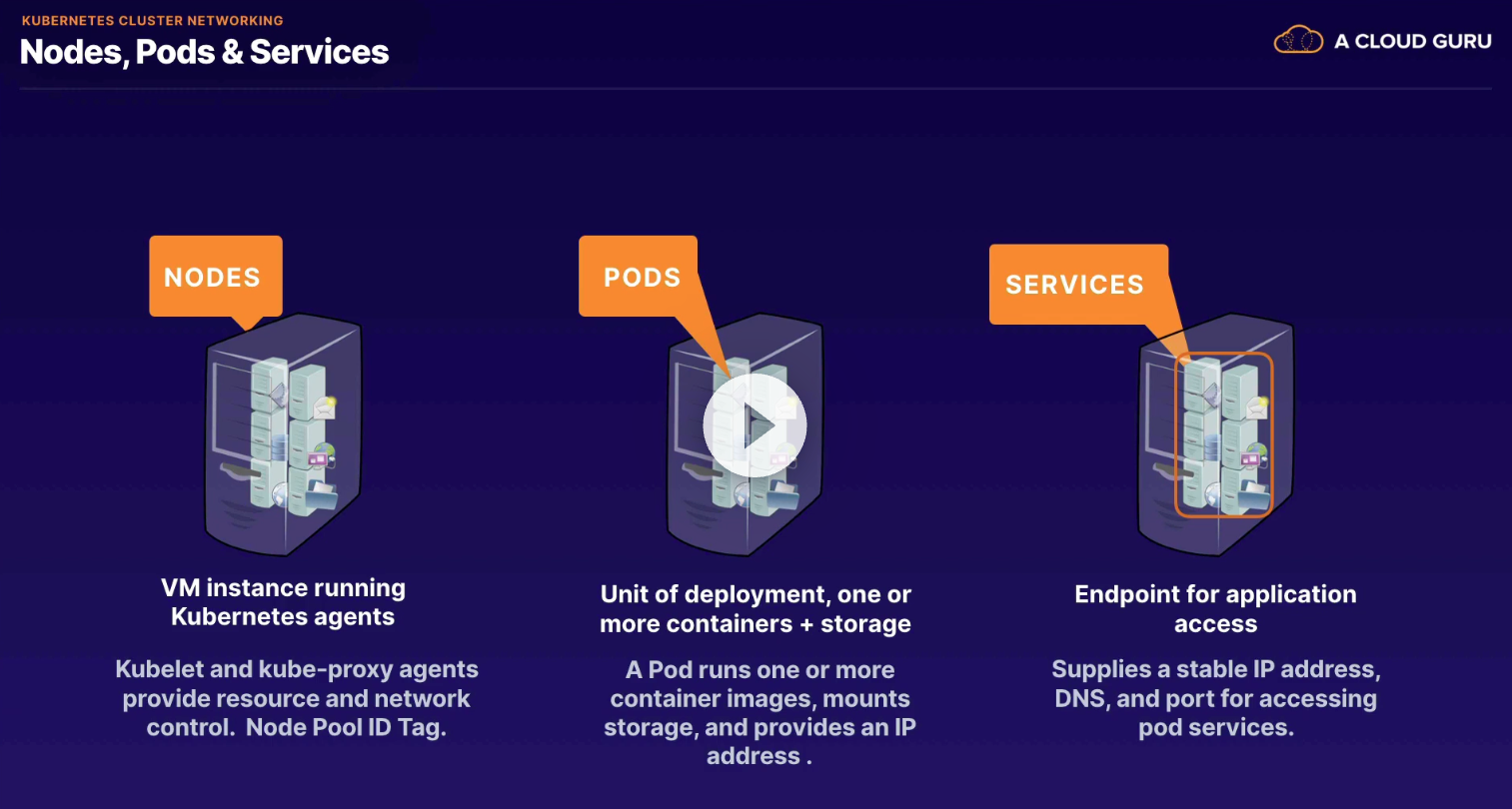

Control Plane (a.k.a. Master) - runs on Google Resources [managed]

- etcd (distributed persistent state store)

- kube-api-server

- kube-schedulers

- kube-controller-manager

- cloud-controller-manager

Workers

- Node Pool (groups of nodes)

- Node VM compute instances

- kubelet

- kube-proxy

Container Networking

- nodes - vm instance running Kubernetes agents (default

/20, min/29, max/8)- best practice: don't exceed 500 nodes

- pods - unit of deployment, one or more containers + storage (default

/14, min/19, max/9)- rolling updates, so need at least double max pods

- best practice: don't exceed 500,000 pods

- POD IP address range is a single range assigned at cluster creation and cannot be changed

- services - endpoint for application access (default

/20,/27, max `/16)

Zonal vs Regional Cluster

- Zonal default has single control plane instance

- Control plane can be inaccessible to make cluster updates during maintenance/upgrades

- Nodes share single regional subnet

Regional Cluster

- nodes spread across multiple zones in region

- Control plane at least 3 instances across zones so higher availability

- Nodes share single regional subnet

Routes-based Cluster

- legacy

- move traffic through cluster using routes

- IP range used for BOTH pods and services and the last

/20of range used for services- If default

/14range assigned, total IPs is 262,144 - Last

/20is 4,096 addresses for Services - So Pods have 258,048 addresses available (

/14 - /20)

- If default

- IPs assigned to pods by carving subsets from Pod IP range

- IP range is single range assigned to pods at cluster creation

- max pods/node of 110 allocates 256 IPs per node (

/24 CIDR)

VPC Native Cluster (Alias IP)

- acquire addresses from regional VPC subnet

- able to customer max pods/node, and impact min/max nodes in cluster

- able to set NEGs as backend for load balancer

- only option to use Shared VPC clusters

Default

- master and nodes have external IP address

- Authorized Masters can be enabled for BOTH private and public clusters

- must add Master IP Range to cluster so peering can be set up for private RFC1918 communication between control plane and nodes

Public Master / Public Nodes

- default configuration

- all have external IPs

Public Master / Private Nodes

- nodes do not have external IP address

- control plane (Public Master) still accessible with external IP

- need Cloud NAT for pods to reach internet

- best to enabled Master Authorized Networks to protect control plane (IP whitelist)

Private Master / Private Nodes

- need new internal IP range (i.e. 172.16.0.0/28)

- must assign Master Authorized Network(s) to allow bastion, etc. to access nodes

- need Cloud NAT for pods to reach internet

- peering-route- will be added to routes table for managed control plane with new IP range

- create IP ranges in Host Project PRIOR to cluster creation

- Only supported by VPC-Native Clusters (Alias IP)

- grant cluster service account IAM role on host project

- Host Service Agent User role (

roles/container.hostServiceAgentUser) - user creating cluster MUST have Network User role (

roles/compute.networkUser)

- Host Service Agent User role (

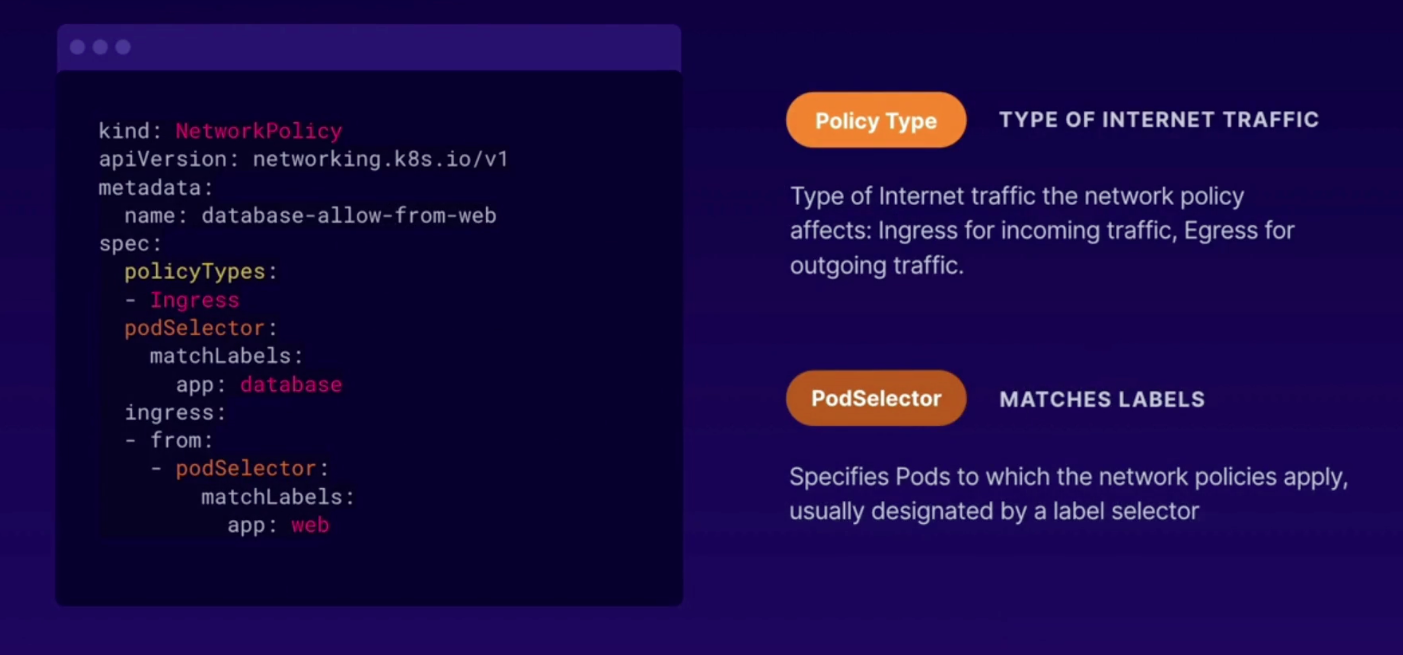

Pod and Service network security. Isolating Pods and Services. By default pods are not isolated.

- enable at cluster creation time, or after cluster creation

- need at least N1-Standard1 size to accommodate additional resources

- GKE will recreate all cluster nodes (NOTE: if maintenance window it will not run until next schedule maintenance)

- when enabled, all pods still can communicate until you define policy rules (YAML file)

- example deny all

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-ingress

spec:

podSelector: {}

policyTypes:

- Ingress- example web to database

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: database-allow-from-web

spec:

policyTypes:

- Ingress

podSelector:

matchLabels:

app: database

ingress:

- from:

- podSelector:

matchLabels:

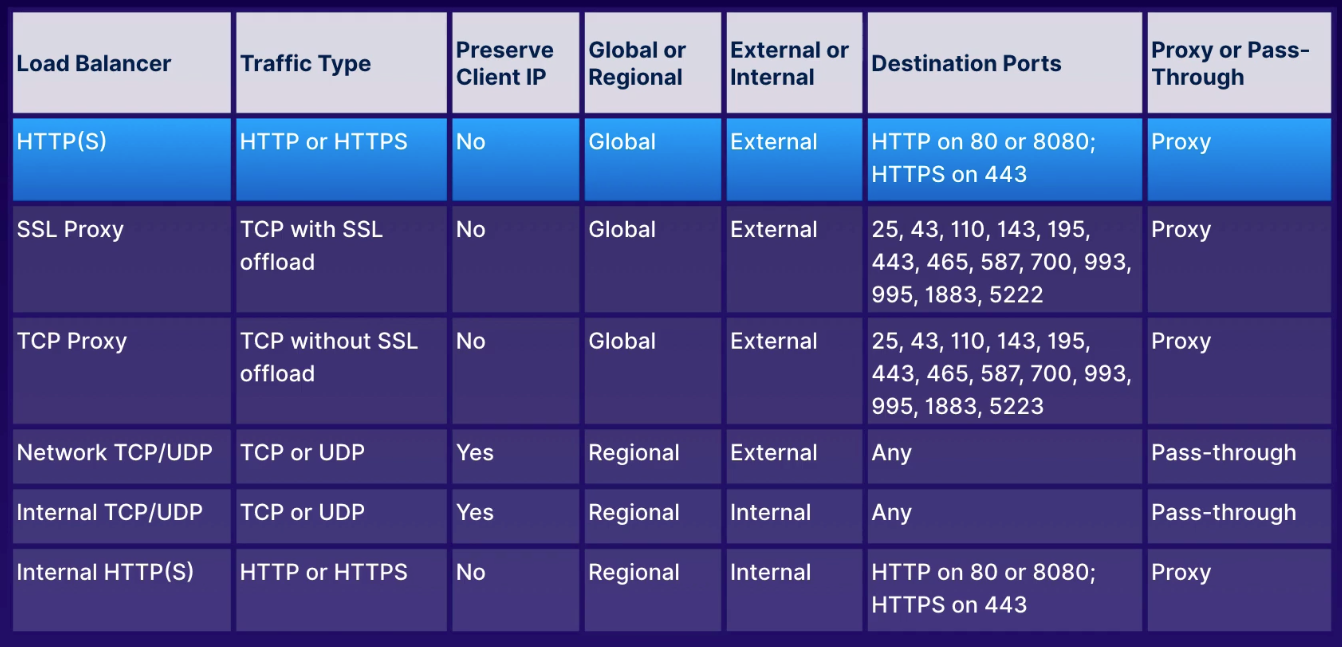

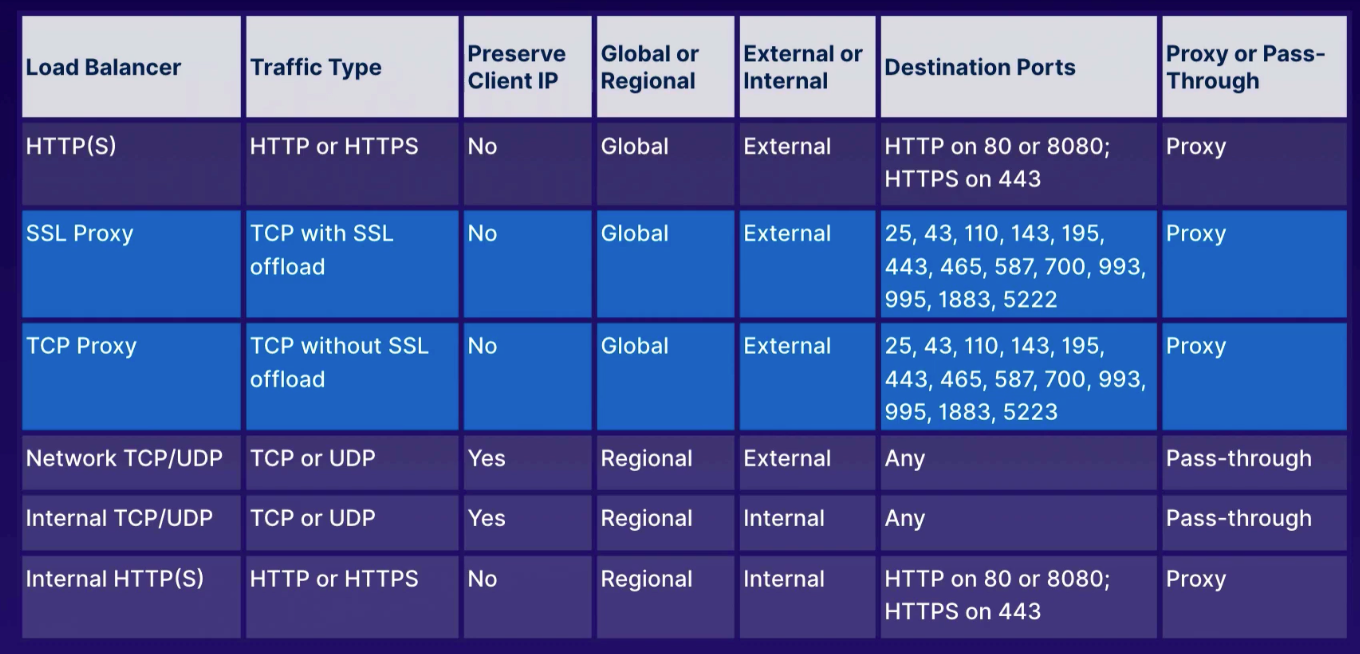

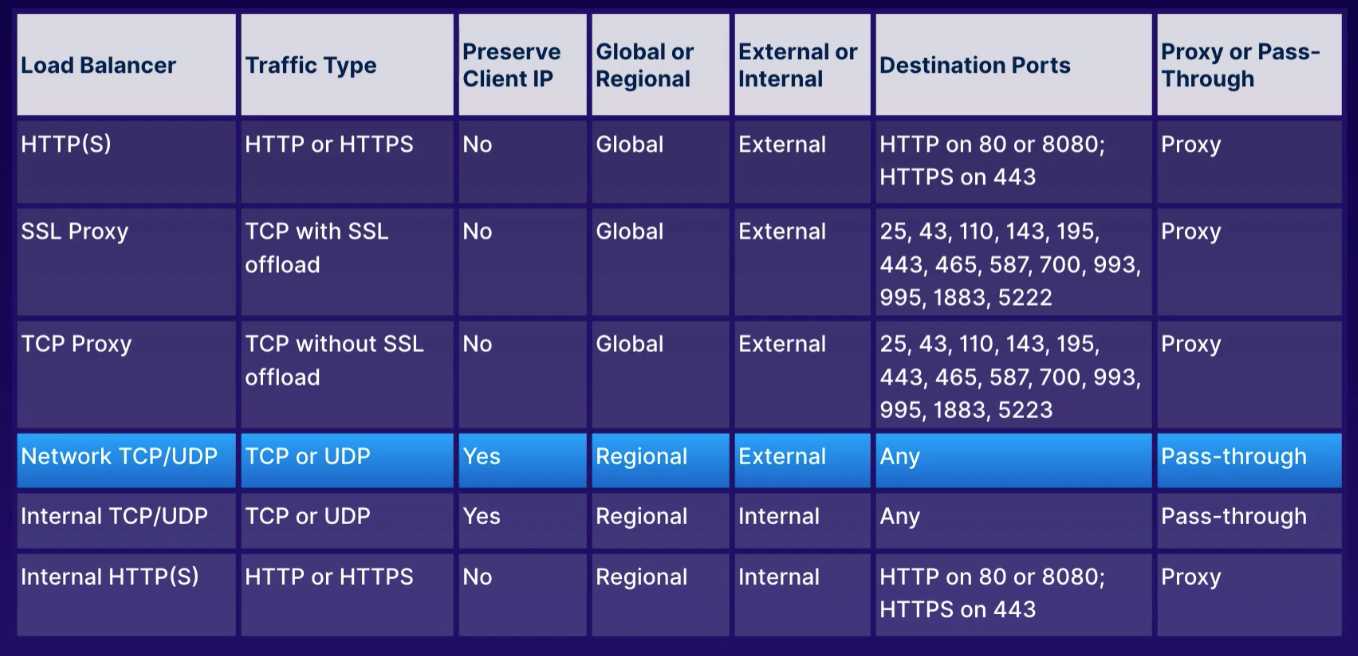

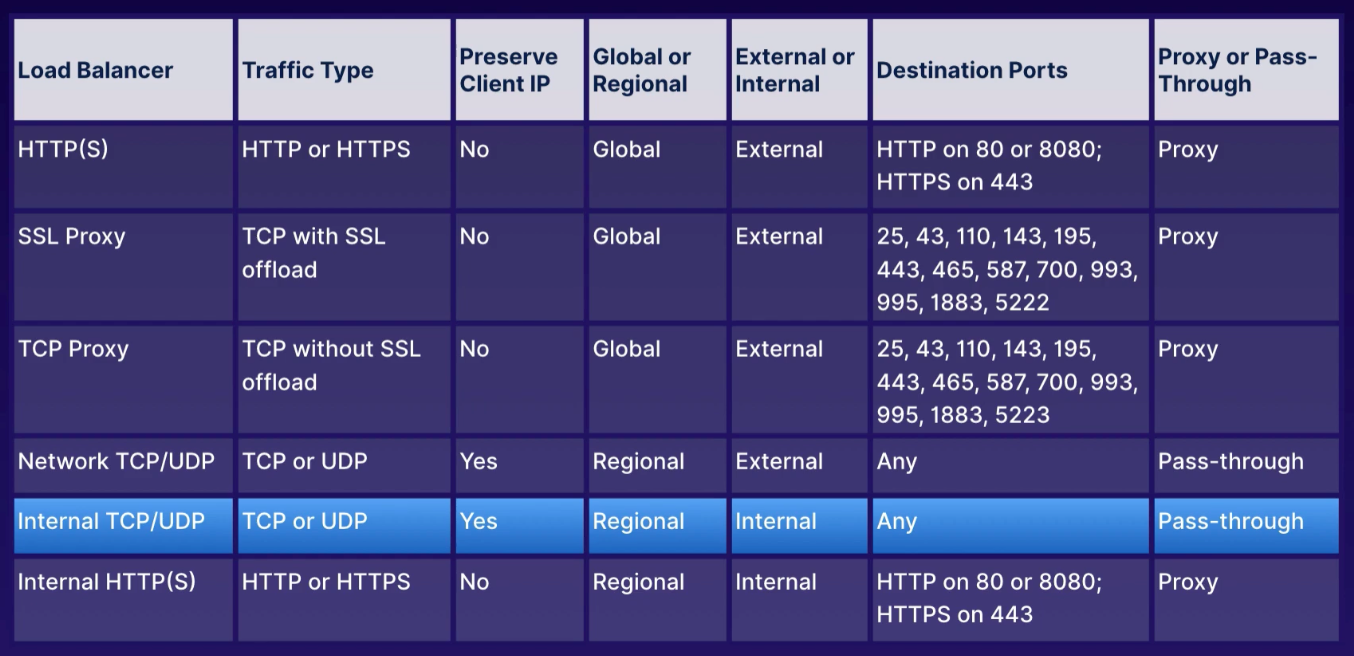

app: webActs as traffic director to multiple backends to allow scaling.

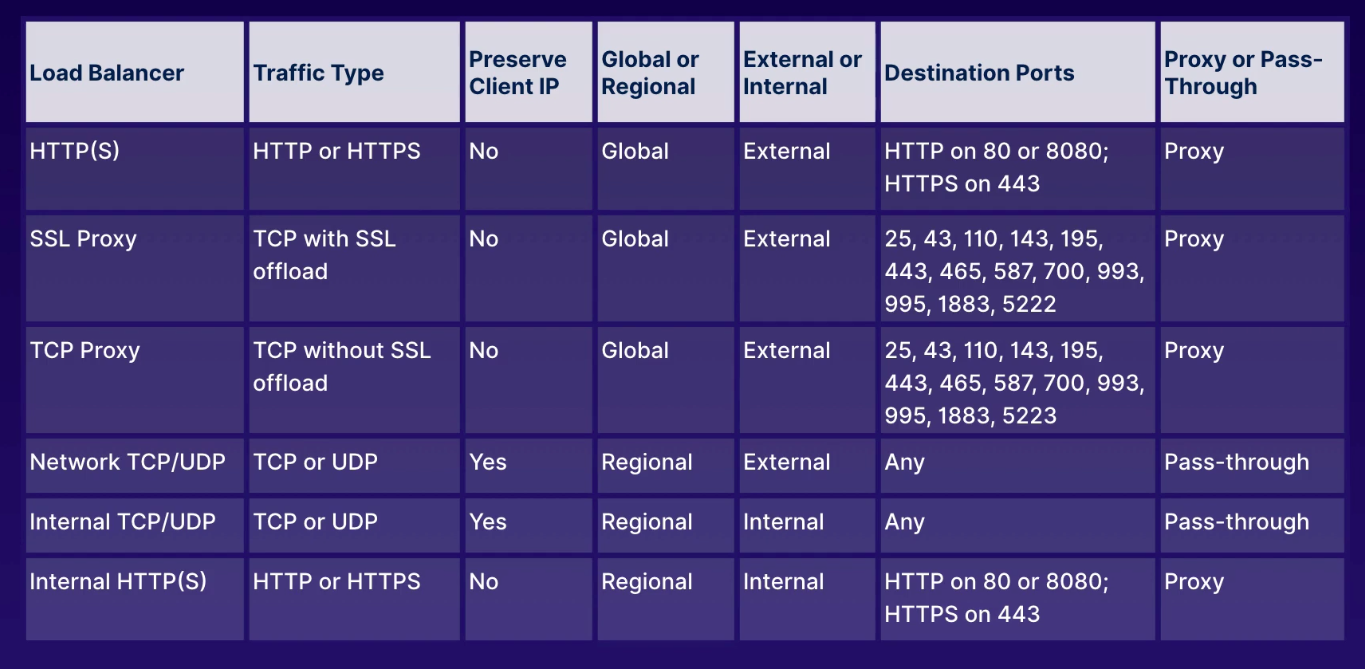

- HTTP(S) load balancer

- layer 7 for HTTP and HTTPS applications

- ports

80,8080, and443

- TCP load balancer

- layer 4 load balancing or proxy for applications that rely on TCP/SSL protocol

- UDP load balancing

- layer 4 load balancing for apps that rely on UDP protocol

To know

- key difference of proxy load balancers (terminates incoming connections, opens new connections to backend)

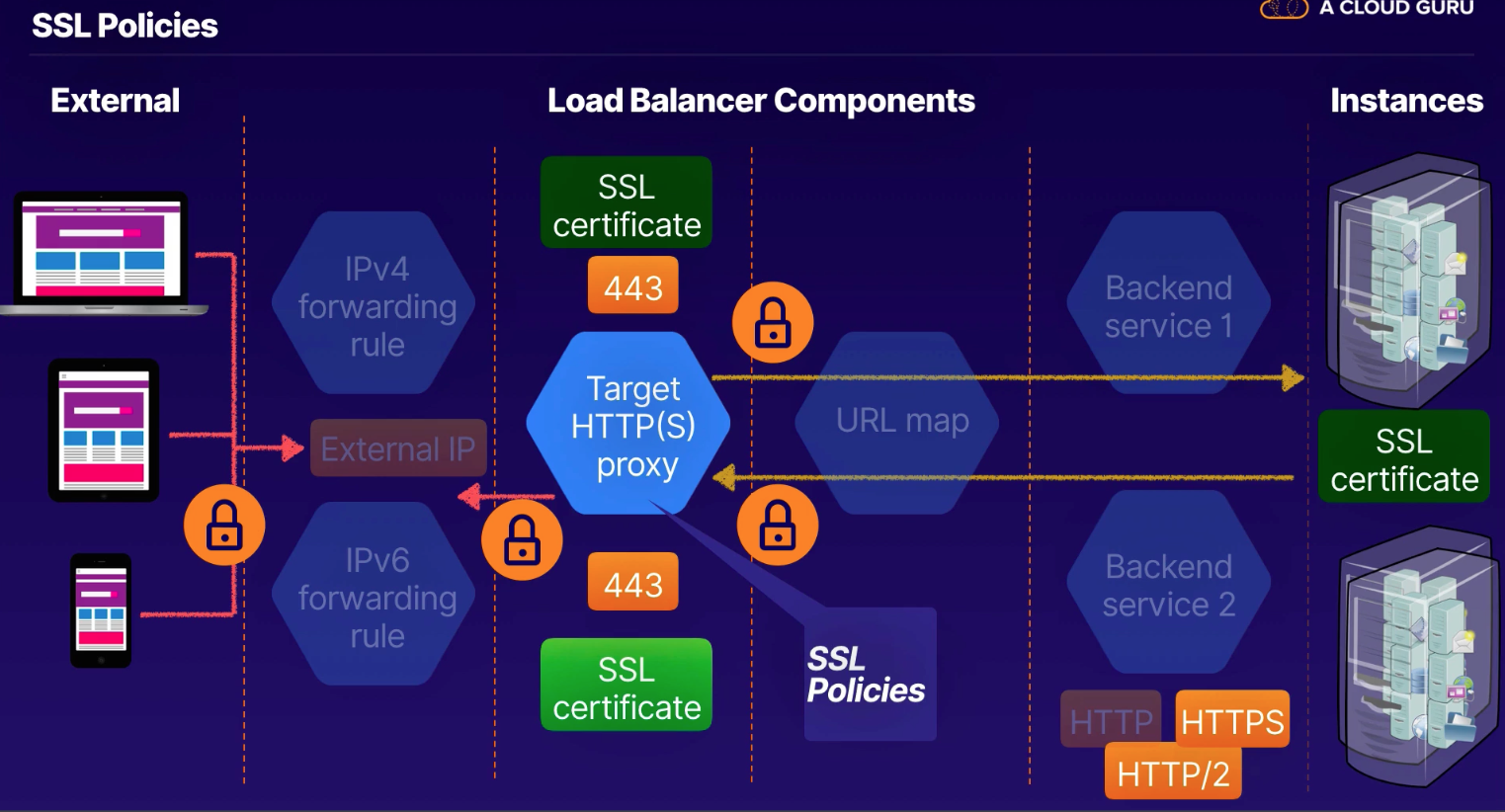

Components

- Forwarding Rule (external IP [VIP = anycast IP]) - fwd rule for IPv4 and another for IPv6

- Target Proxy (terminate request) - HTTP or HTTPS

- URL map (L7 URI to route to backends, or storage bucket)

- Backend service (instance group and serving capacity metadata; specify which health checks performed)

- Instances

- Storage buckets

- MUST HAVE firewall rule for health check for (

130.211.0.0/22and35.191.0.0/16)

- Backend service (instance group and serving capacity metadata; specify which health checks performed)

- URL map (L7 URI to route to backends, or storage bucket)

- Target Proxy (terminate request) - HTTP or HTTPS

Balancing Mode

- tells LB system when backend at full stage

- if full, new requests automatically routed to another

- Premium Tier

- global external IP address (IPv4 or IPv6)

- "anycast IP"

- traffic routed to location nearest to user

- Standard Tier

- regional external IP address (IPv4)

- only distribute traffic to VMs in single region

- traffic routed to location where backend instances are located

-

does not support port 80

- 25, 43, 110, 143, 195, 443, 465, 587, 700, 995, 1883, 5222

-

Proxy load balancers (TCP / SSL)

- global services and not single region

- specific ports so any other, use Network load balancers

- original port/IP address not preserved by default

- target proxy terminates connection; opens another to backends (why losing original IP info)

- can configure though "proxy protocol rules" to save source IP/port info

- session affinity (route subsequent requests to same backend instances)

- backend services manage health checks and detect if available to send traffic

Firewall rules (allow health checks)

Regional non-proxy load balancer that does not terminate client connections but allows them to pass through to our GCP backends.

- within a single region, the load balancer services all zones

- does not modify source IP or port

- instances chosen at random

- existing traffic sent to same server using source IP/port and destination IP/port hash

- no session affinity, but target pools provides that persistent connection

- does not load balance traffic within GCP network

- responses go directly back to client, not through load balancer

- industry term: direct server return

- must run basic web server on each instance so HTTP health checks on port 80

Key difference

- GCP recommends allowing all IP addresses

0.0.0.0/0

- Allow higher availability and flexibility in rolling updates, maintenance

- only RFC1918 addresses in same region (not traffic from public internet)

- internal fwd rules must be in same subnet

- each uses one regional backend service (must have 1 IG and health check)

- no UDP health checks, so must run a TCP service to respond to health check

- can configure failover backends if a configurable threshold of backend not responding

- configure firewall rules for internal traffic source ranges

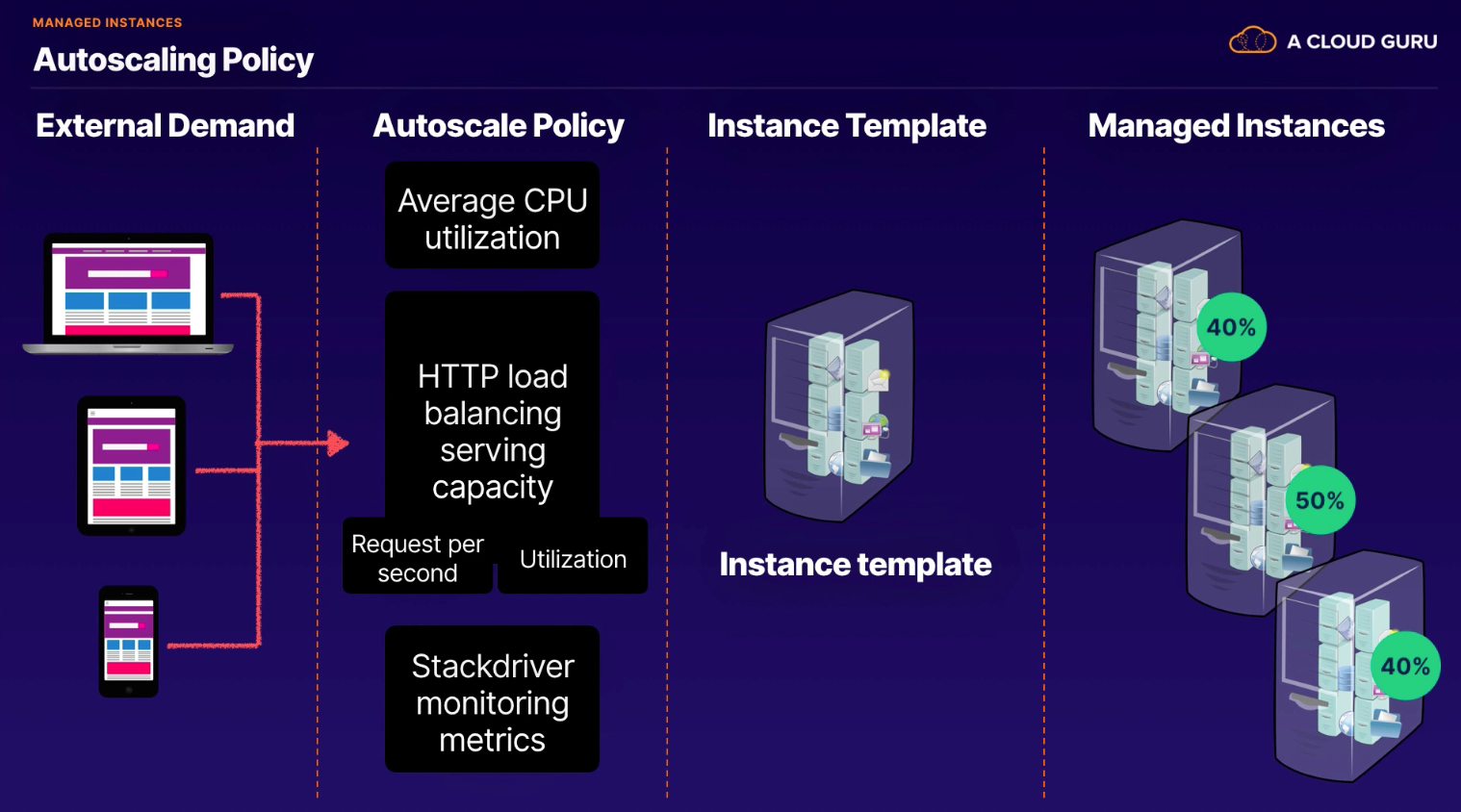

- instance template

- zonal, regional (recommend 3 minimum instances)

- managed instances

- autoscaling policy (default 60 second cool down period; configurable)

- unmanaged instance

- no autoscaling policy

- managed instances

- zonal, regional (recommend 3 minimum instances)

To know:

- rolling update and canary (how to)

- autoscaling target utilization methods

- Average CPU utilization

- Cloud Monitoring metrics

- HTTP load balancing serving capacity (utilization or requests/sec)

Logical groupings of IPs and ports representing software services instead of entire VMs

- VPC

- subnet

- region/zone

- default port

- (Add Network Endpoint): VM, container, or apps

- add IP/Port of app running

- (Add Network Endpoint): VM, container, or apps

- default port

- region/zone

- subnet

Highlights

- every IP address must be in same subnet

- VM instance must be in same zone as NEG

- network interface must be in same subnet in VPC network

- if using NEG in load balancer, all other backends MUST BE NEGs

- IP must be primary address or alias IP

- can add same NEG for more than one backend service

- can add same IP/port to multiple NEGs

To know

-

you cannot use balancing mode of UTILIZATION for backend services that use zonal NEG as backend

- only use balancing modes RATE or CONNECTION

-

Console

- Compute

- Network Endpoint Groups

- Compute

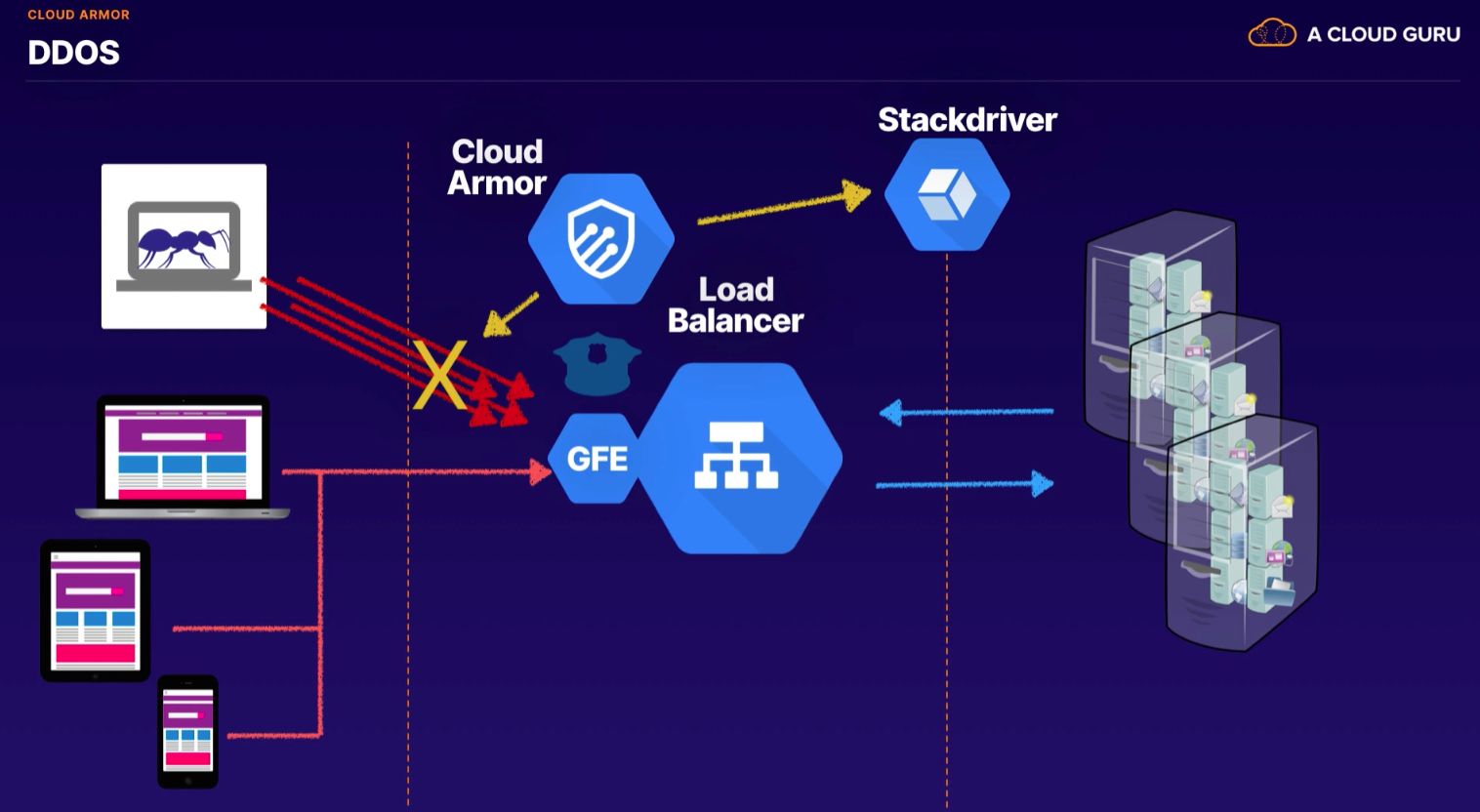

Security Policies are sets of rules you define to enforce application layer firewall rules protecting externally-facing application or services. Each rule is evaluated with respect to incoming traffic.

- "Service firewall rules)"

- Operates at GCP edge, closest to source, preventing unnecessary consuming resources

- Not supported for internal traffic

- Check which LBs it supports (formerly just HTTP(S) load balancing)

- "Deny lists" or "Allow lists"

- 5 IPs or ranges per rule

IAM Roles

- Compute Security Admin (create, modify policies)

- Compute Network Admin (assign policies to backend service)

Preview mode

- log actions to see how it works before applying rules to traffic

Three zones available using Cloud DNS

- Internal DNS

- cannot be turned off; automatically created by Google Cloud

[INSTANCE_NAME].[ZONE].c.[PROJECT_ID].internal

- Private Zone

- contains DNS records only visible internally within your GCP network(s)

- Supports DNS Forwarding and DNS Peering

- Public Zone

- visible to the internet. Usually purchased through a Registrar.

- Private and Public Zones

Creating a Private DNS zone

- Public or Private

- Zone name (i.e.,

research-acme-com) - DNS name (i.e.,

research.acme.com) - Description (optional)

- Options

- Default (private)

- Forward queries to another server

- DNS Peering

- Managed reverse lookup name

- Networks

- multi-select

Then Create record set

- A, CNAME, etc.

Create a Public DNS zone

- Zone name (i.e.,

dev-acme-com) - DNS name (i.e.,

dev.acme.com) - DNSSEC (Off)

- On

- Off

- Transfer

- Description (optional)

- then add new Nameserver records pointing to GCPs nameservers

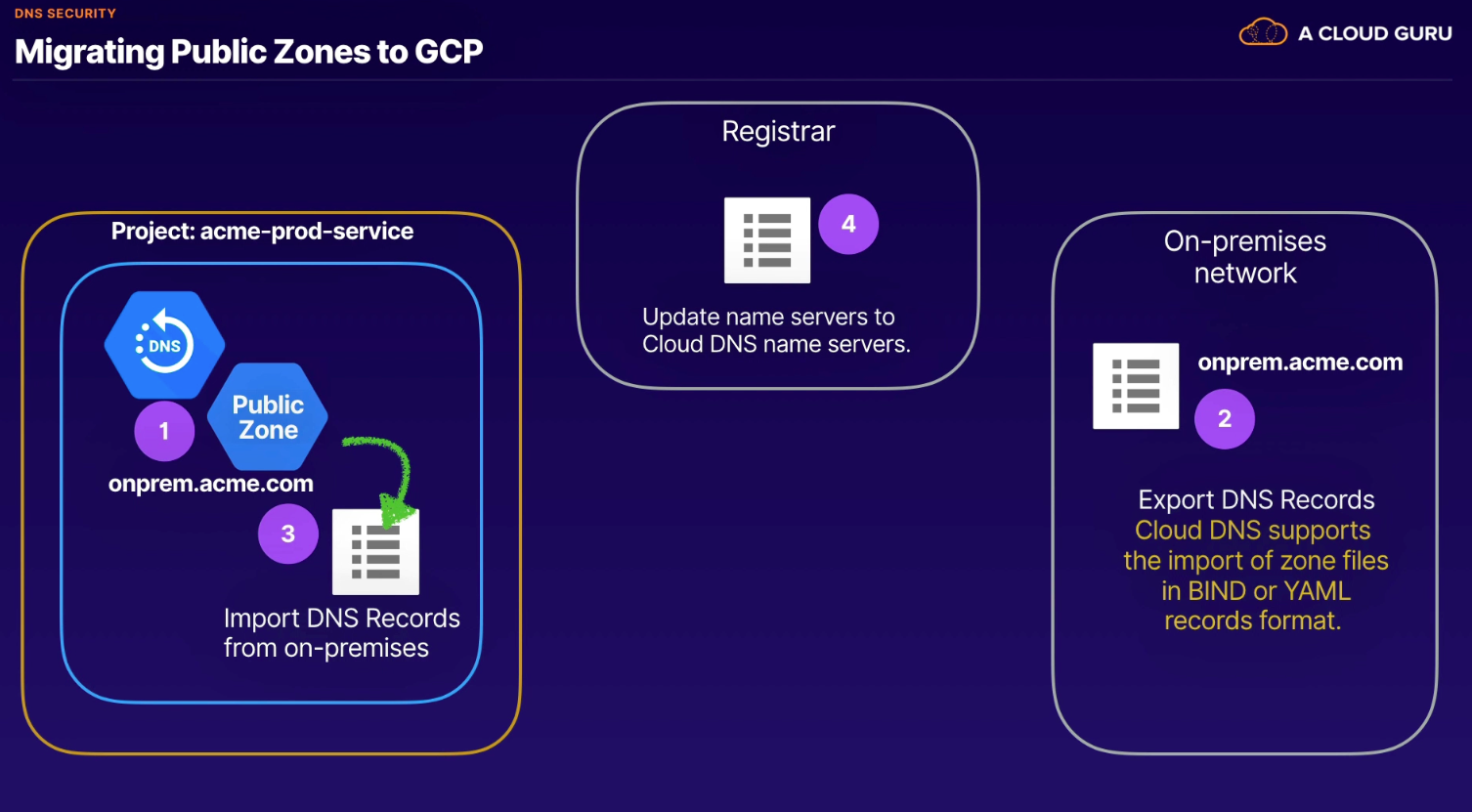

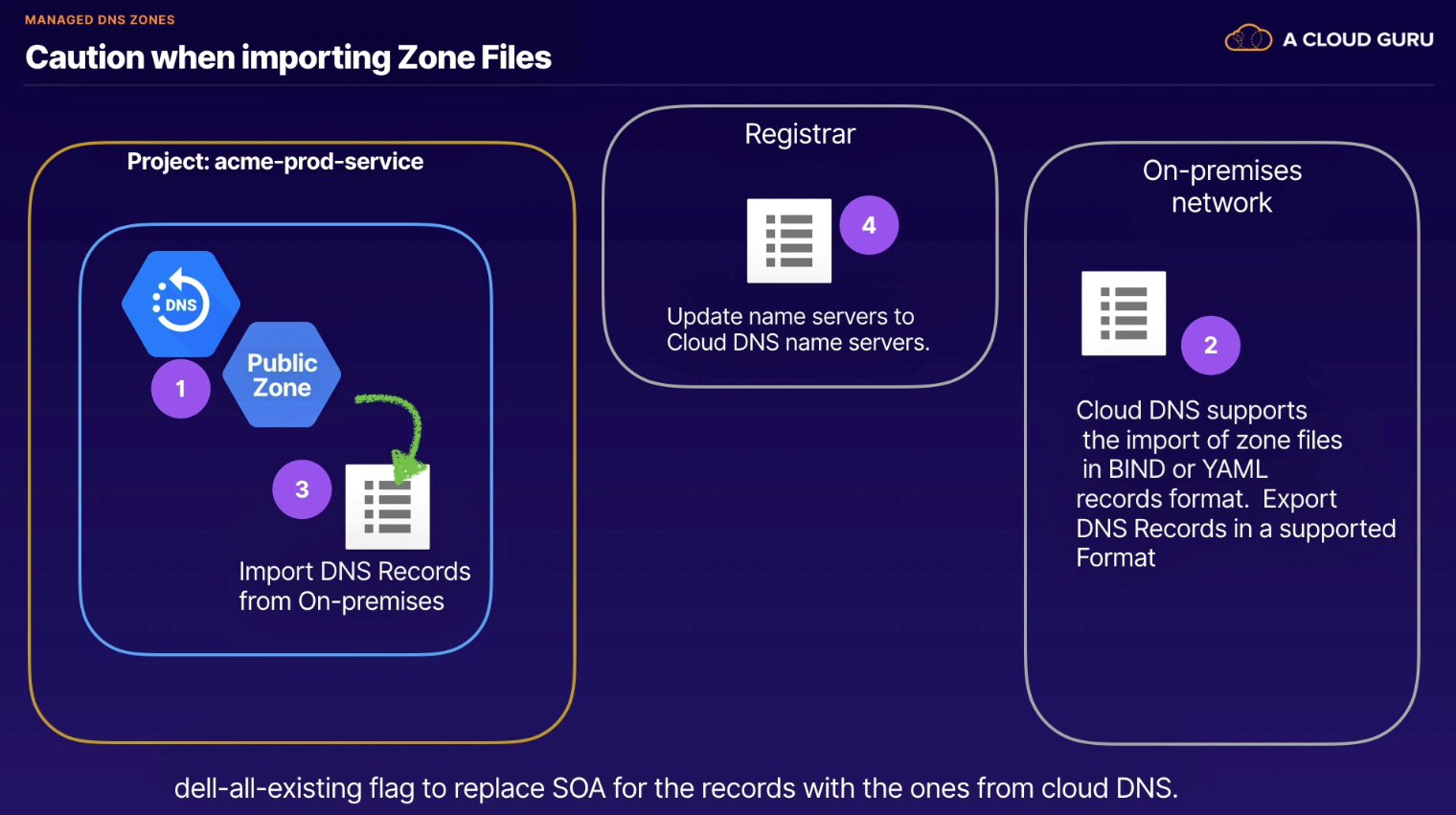

Migrating existing public zones

- Create public zone

- Export records from existing on-premises network

- BIND or YAML format

- Import DNS Records from on-premises

- WARNING: if SOA (start of authority) records, use the

delete-all-existingflag to replace with ones from Cloud DNS

- WARNING: if SOA (start of authority) records, use the

- Update name servers to Cloud DNS name servers at Registrar

DNS Resolution Order on GCP

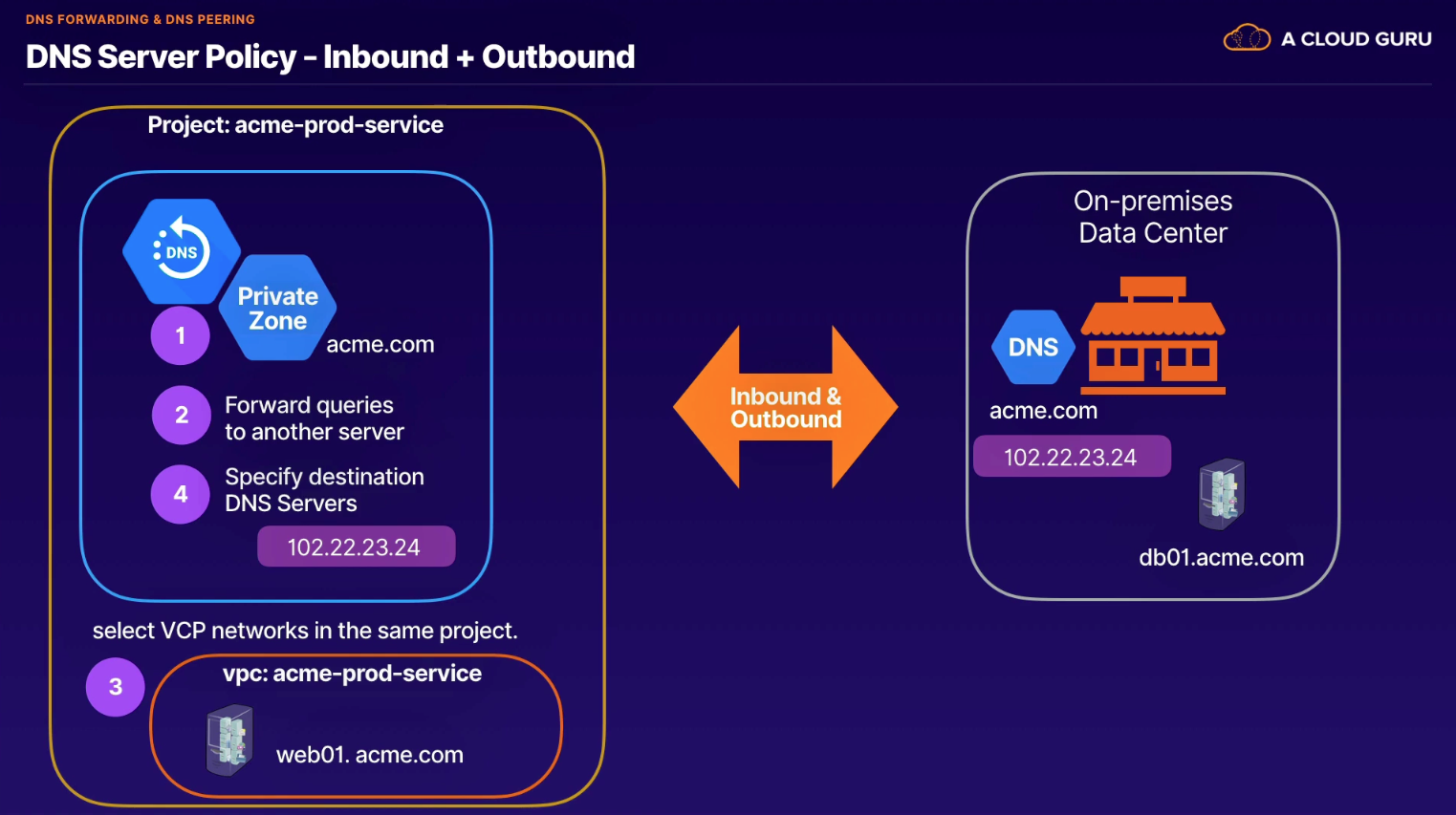

- Forwarding - provide inbound and outbound between on-prem and Cloud DNS (hybrid DNS)

- CANNOT USE TO FORWARD BETWEEN 2 GCP ENVS REGARDLESS OF DIRECTION

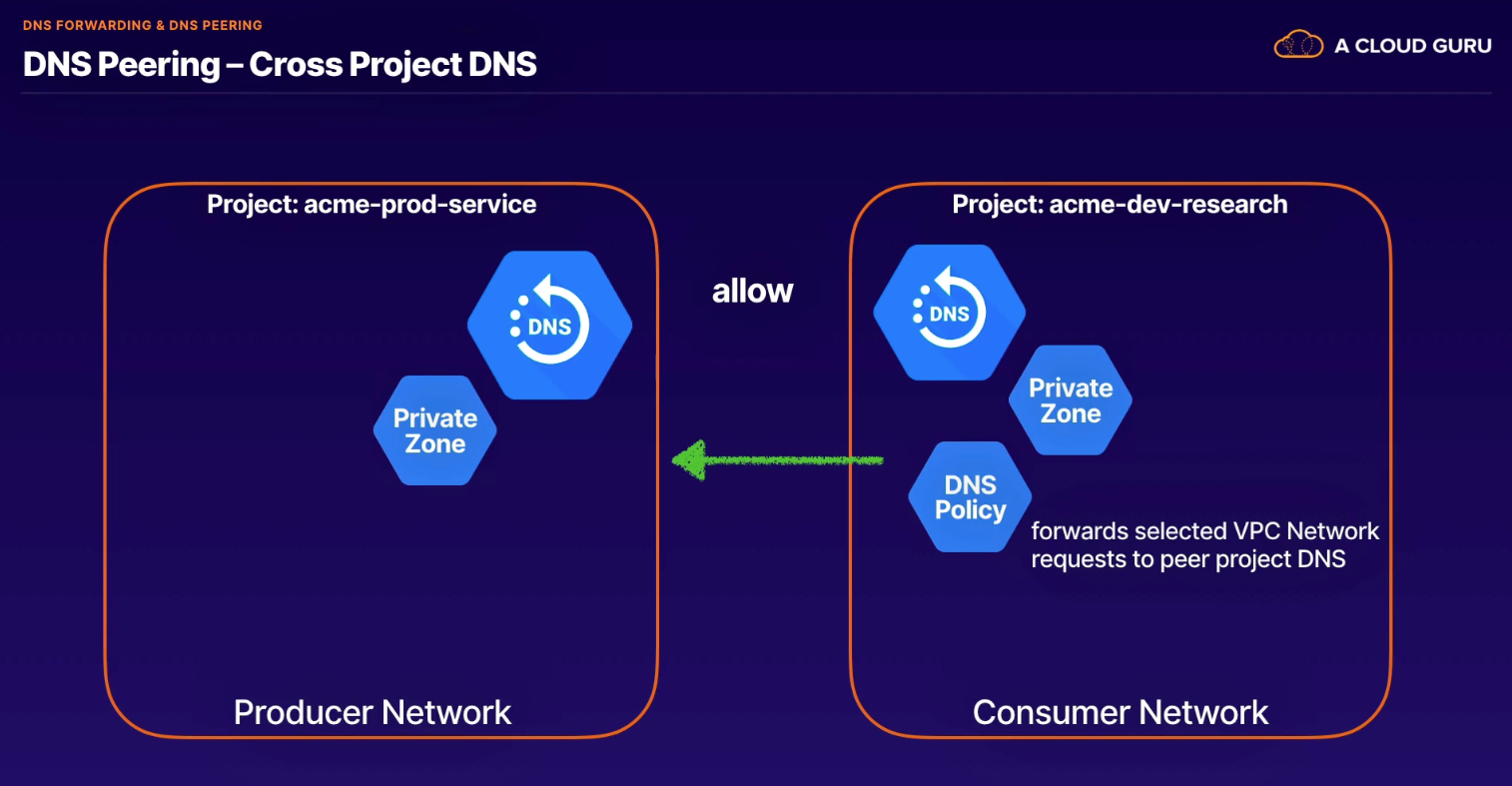

- Peering - extend DNS zones between VPC networks

DNS Policy (forwarding rules) - alternative to forwarding

Summary (Top 5)

- Private Zone

- Cloud DNS private zones support DNS services for a GCP Project. VPCs in the same project can use the same name servers.

- DNS Forwarding for Private Zones

- Overrides normal DNS resolution of the specified zones. Instead, queries for the specified zones are forwarded to the listed forwarding targets

- DNS Peering for Private Zones

- lets you send requests for records that come from one zone's namespace to another VPC network

- DNS Policy Outbound

- when enabled in Cloud DNS, forwards all DNS request for a VPC network to name server targets. Disables internal DNS for the selected Networks

- DNS Policy Inbound

- create an inbound DNS Policy to allow inbound connections from on-premises systems to use that network's VPC name resolution order

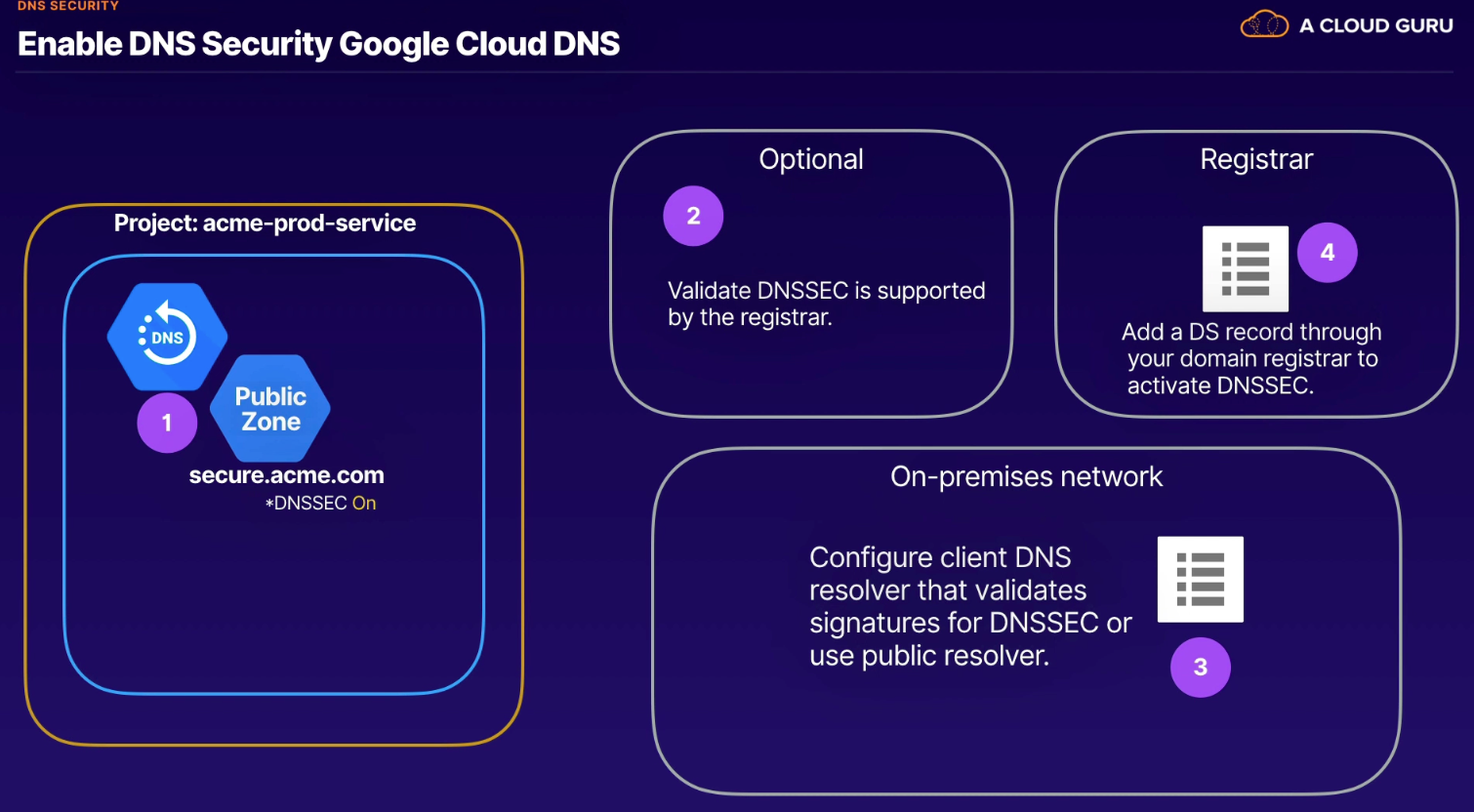

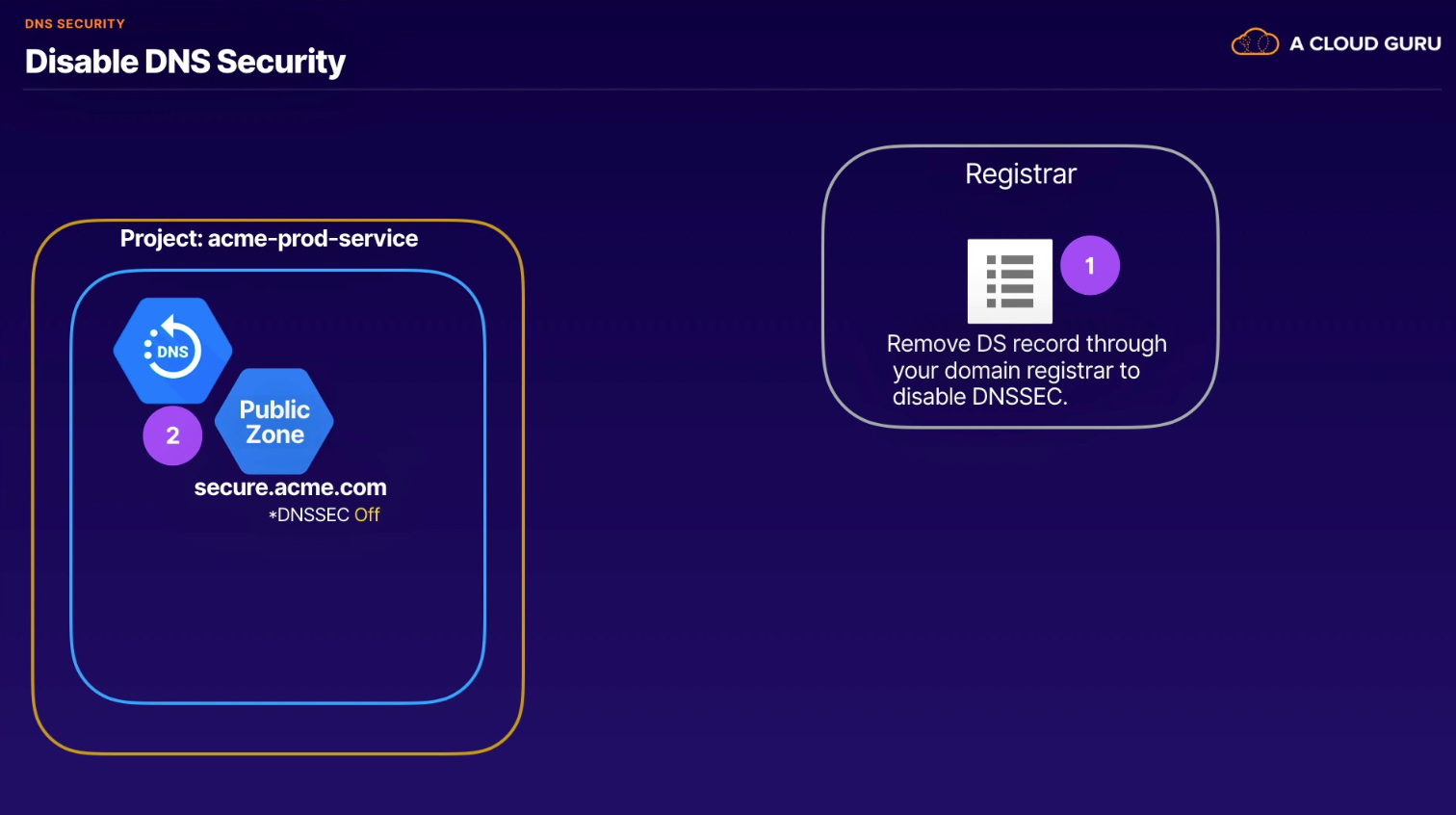

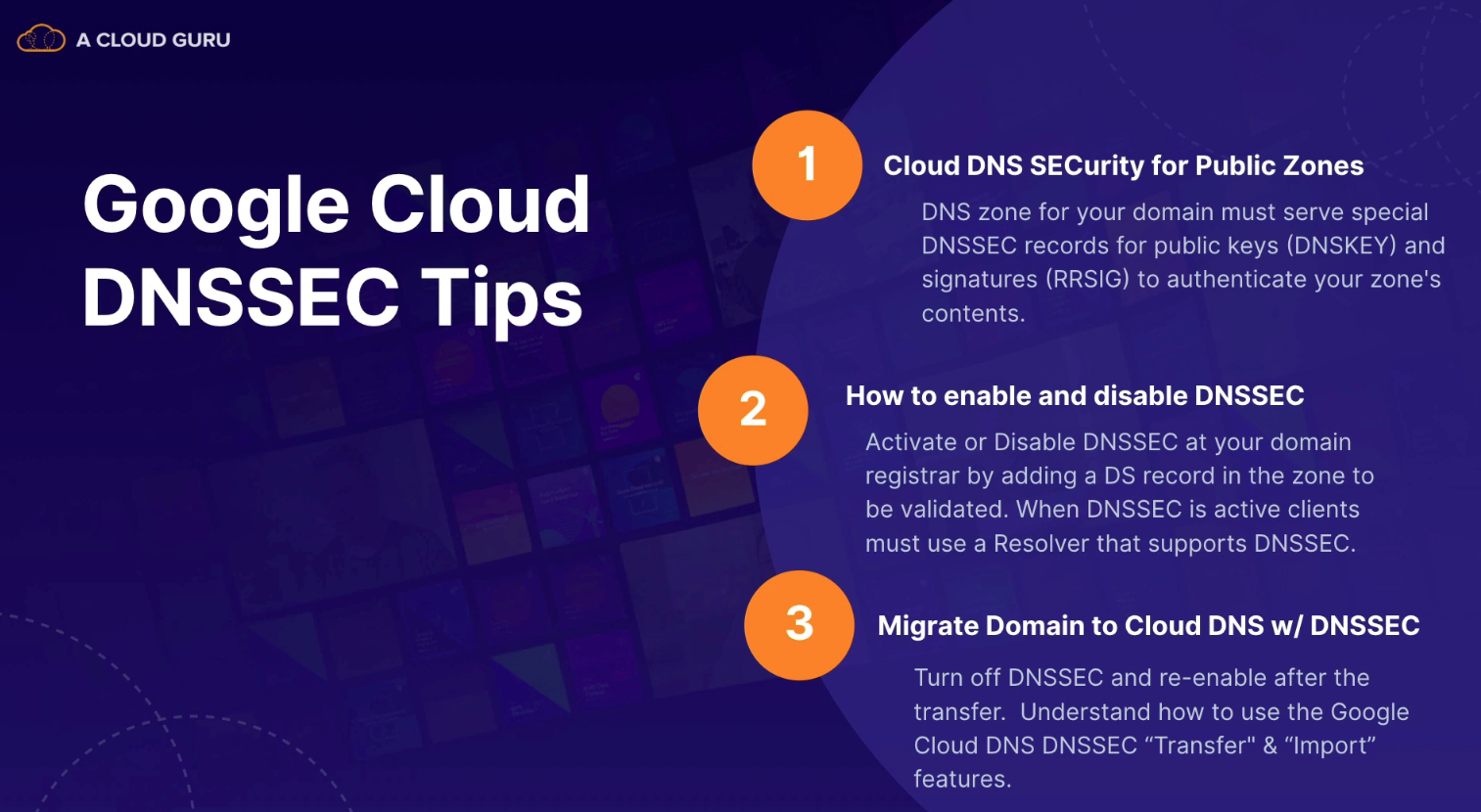

Protects public domain zone from spoofing or impersonation by a 3rd-party DNS server.

Cloud DNSSEC for Public Zones

- DNS zone for your domain must serve special DNSSEC records for public keys (DNSKEY) and signatures (RRSIG) to authenticate your zone's contents

- enabling

- disabling

- transfer

- create public zone

- export on-prem records

- import records

- update registrar

Migrating DNSSEC signed zones to GCP

- create public zone

- select DNSSEC as "Transfer"

- export DNS records (including original key)

- import records

- update registrar

- FINAL STEP: set DNSSEC to "On"

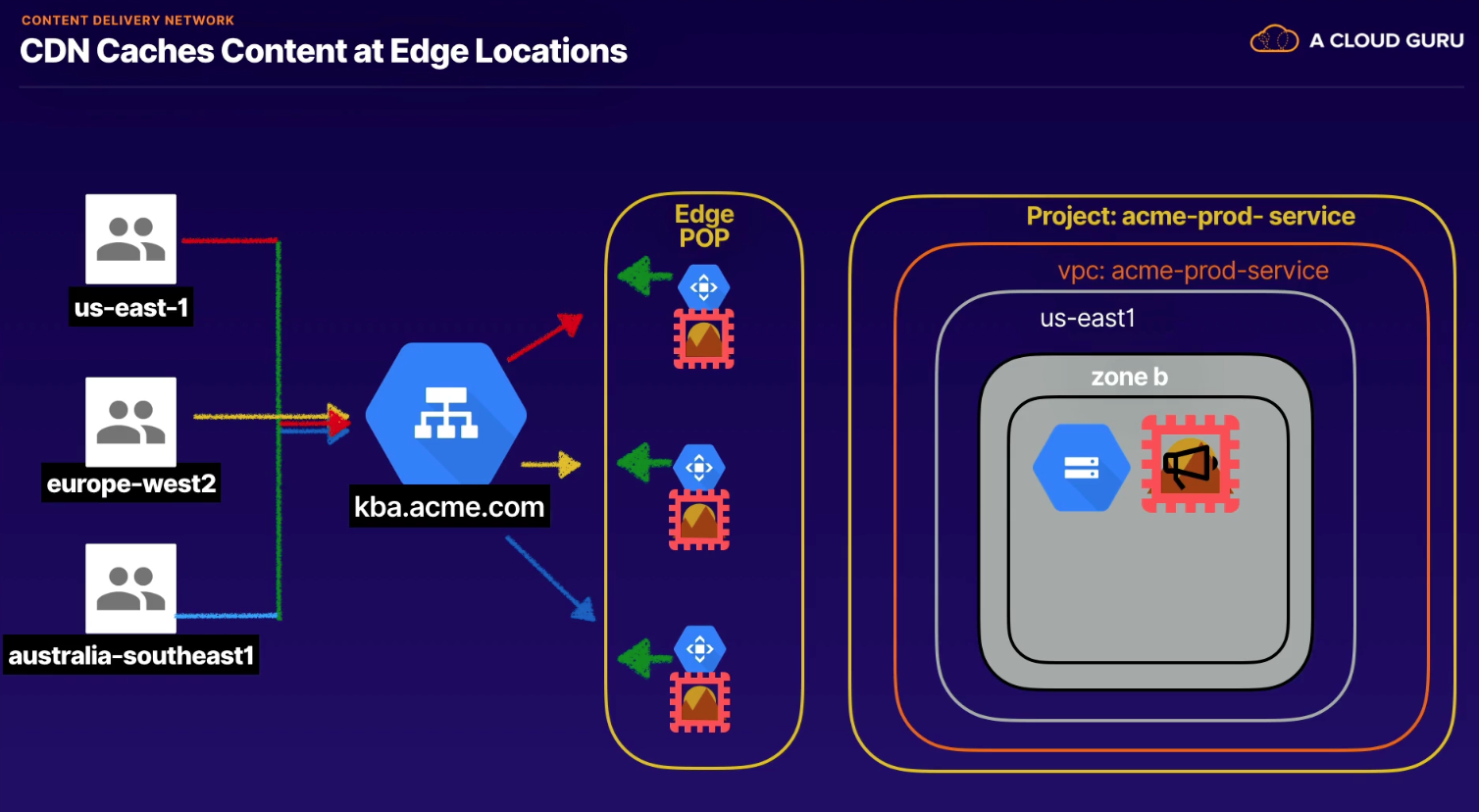

Shorten physical distance that data has to travel to get to our users to improve site rendering speed and performance. Stores a cached version of content in multiple geographical locations.

Requirements

- Premium Tier network (global network)

- Global HTTP(S) Load Balancer (fetches content from backends)

- Edge Location Cache Server

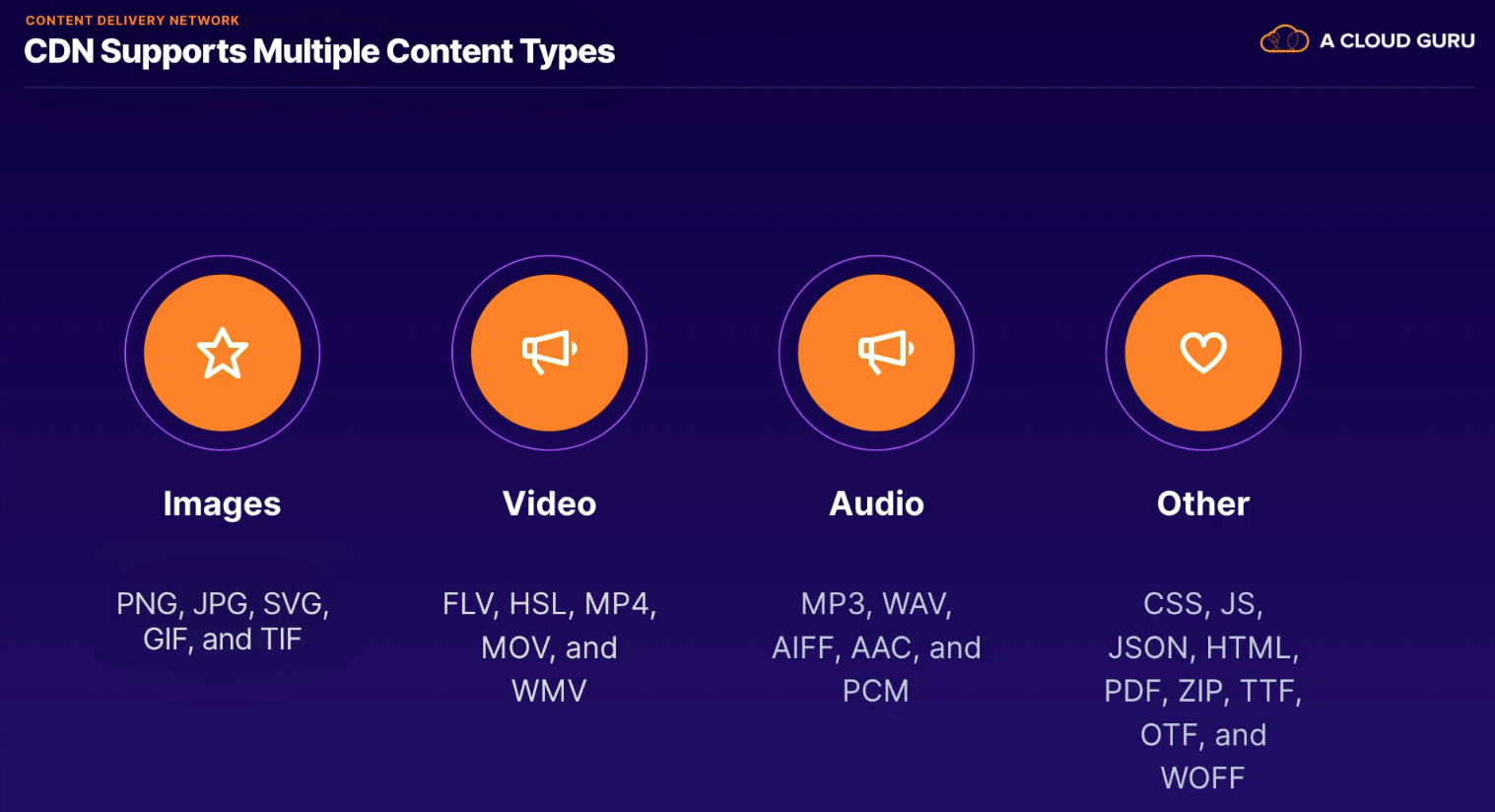

Types of content

Terminology

- Hit - when user request filled from the edge pop location

- Miss - if user request arrives and CDN does not have the file

- Fill - Cloud CDN initates request to source to fill request

- no fill until request enters GCP network at that POP

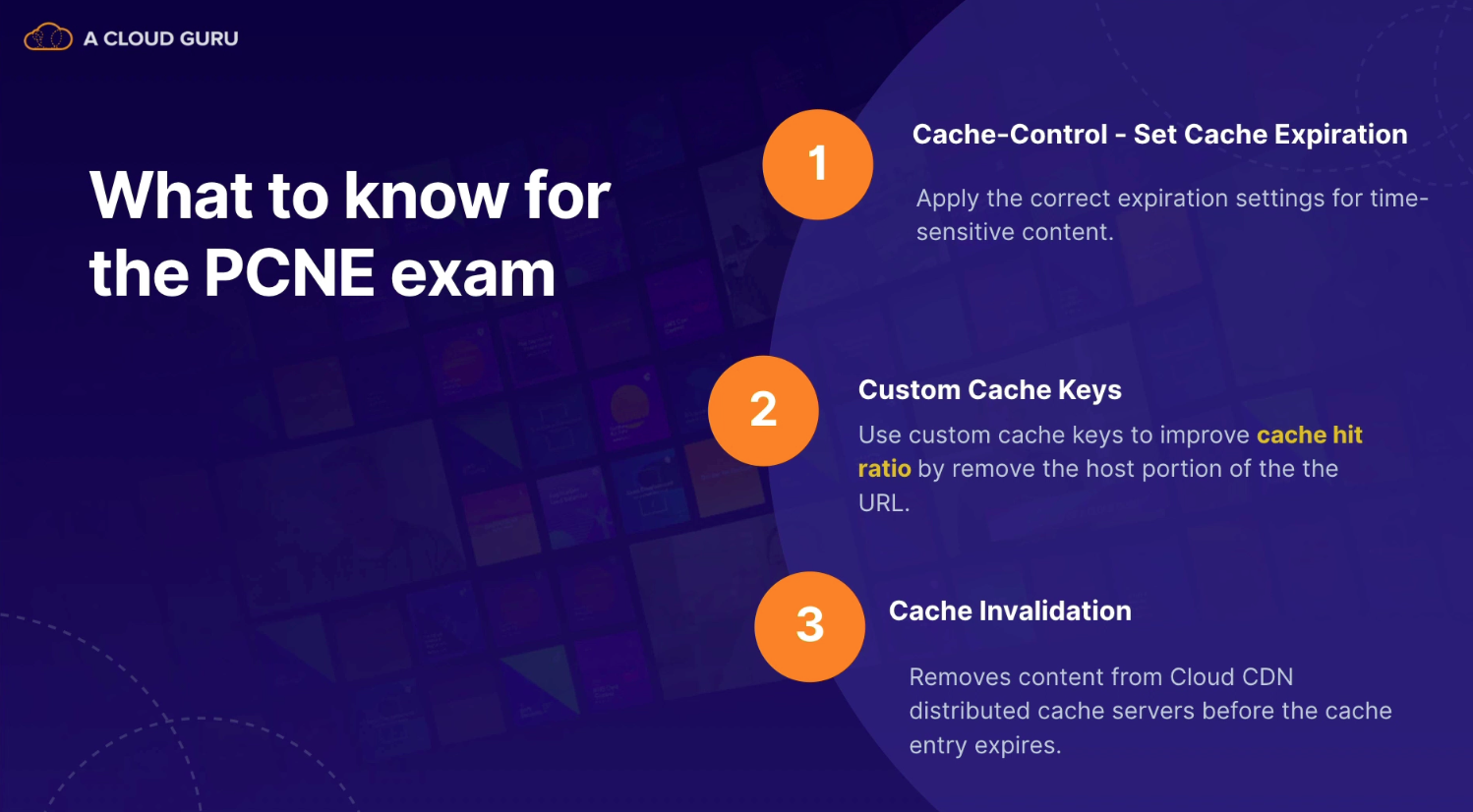

- apply correct expiration settings for time-sensitive content

- creating custom cache keys (e.g., remove hostname of URI and increase cache hit ratio)

- use Cache invalidation (use folder structure instead of individual files: 1 per minute rate)

- if want to invalidate before expire date

- caches only update changes to files (diffs only)

- use Cache invalidation (use folder structure instead of individual files: 1 per minute rate)

- settings

- s-maxage (priority)

- maxage

- Expires

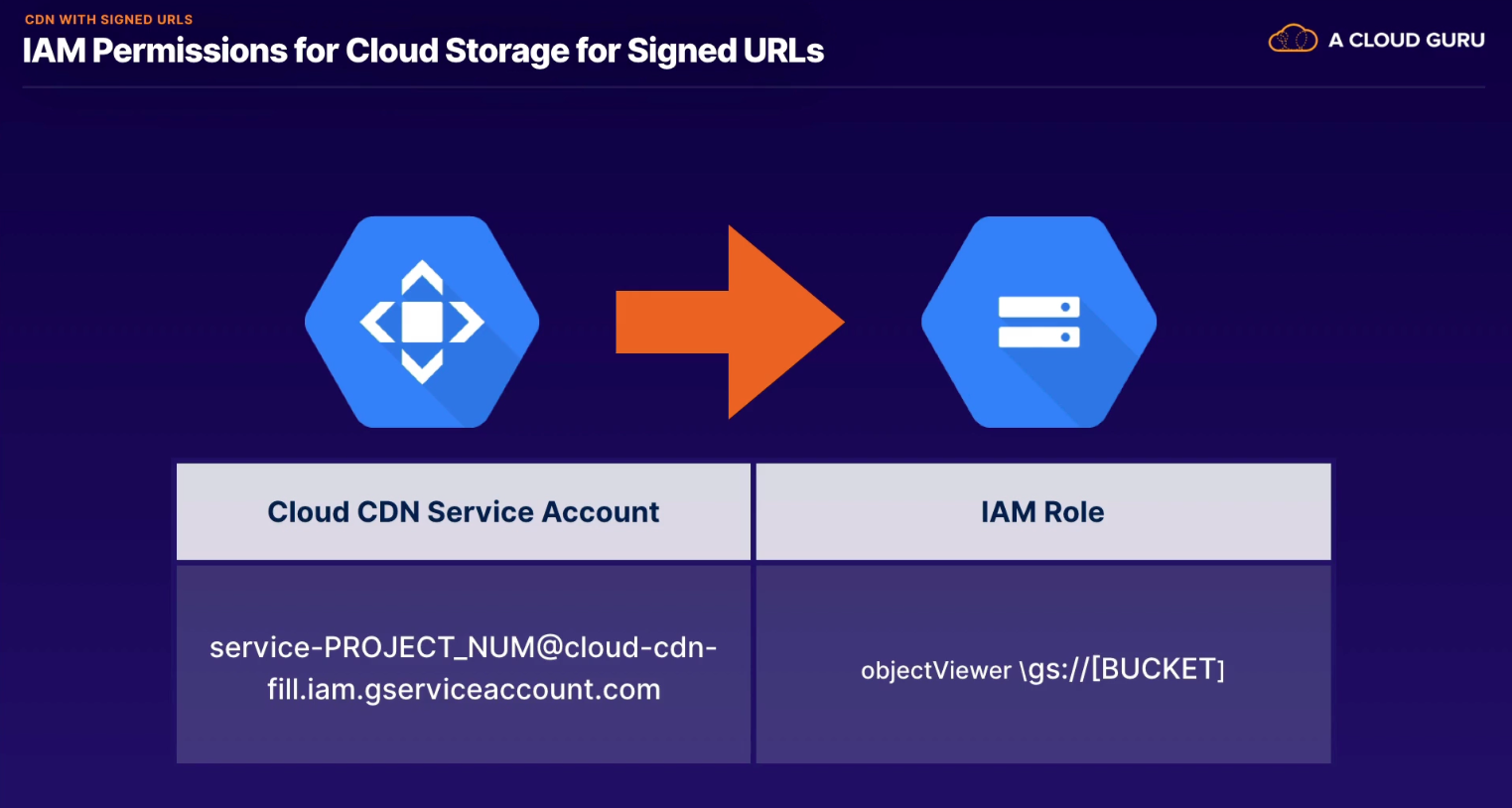

Give public temporary access to content without signing in.

- 128-bit key used for signing URL

- name

- creation method (automatic)

- cache-entry max age

Observability is a measure of how well internal states of a system can be inferred from knowledge of its external outputs.

Log entries standard fields

- actor

- logType

- logName

- severity

- timestamp

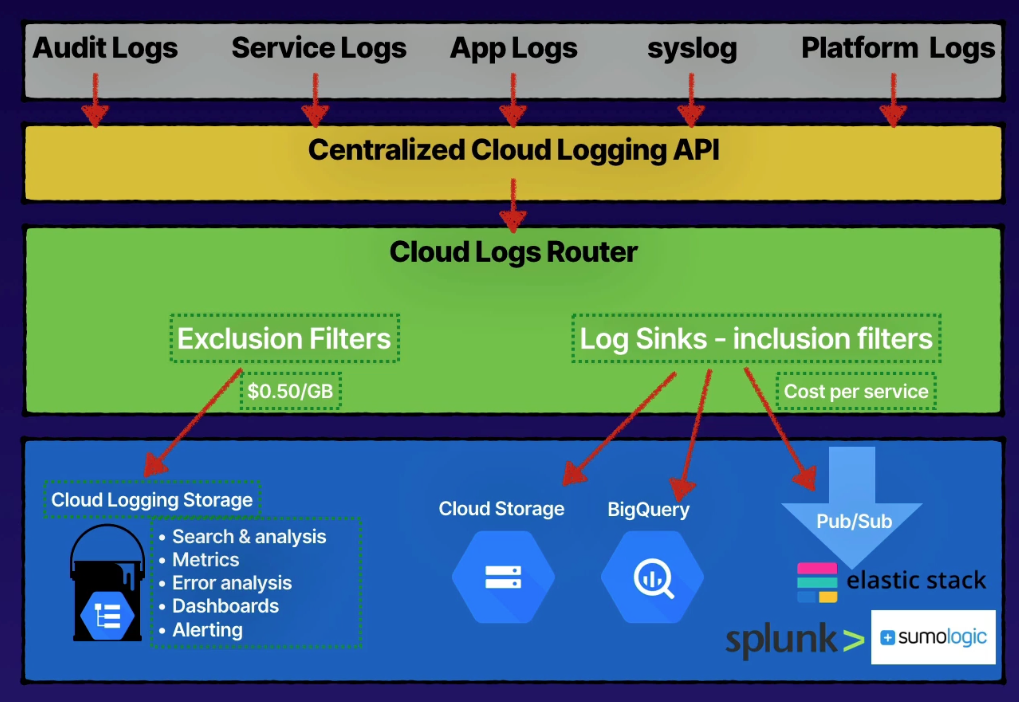

Components

- Logs

- Cloud Logging API

- Cloud Logs Router

- Exclusion filter

- Inclusion filter

- Log sinks

- Cloud storage

- BigQuery

- Pub/Sub (3rd-party exports too)

- Log sinks

- Cloud Logs Router

- Cloud Logging API

- Log buckets

_Default(30 day default retention)_Required(400 day retention, not configurable)

- Logging Admin - full control over all logging services

- Logs Configuration Writer - inclusion/exclusion filter configurability

- Logs Viewer - view non-private log data and configs

- Logs Writer - service accounts must have

- Private Logs Viewer - data access and transparency logs access

Cloud Logging Agent

- for 3rd party solutions/apps

- need Log Writer IAM role for service account

- if on-prem or other cloud, need service account with private key

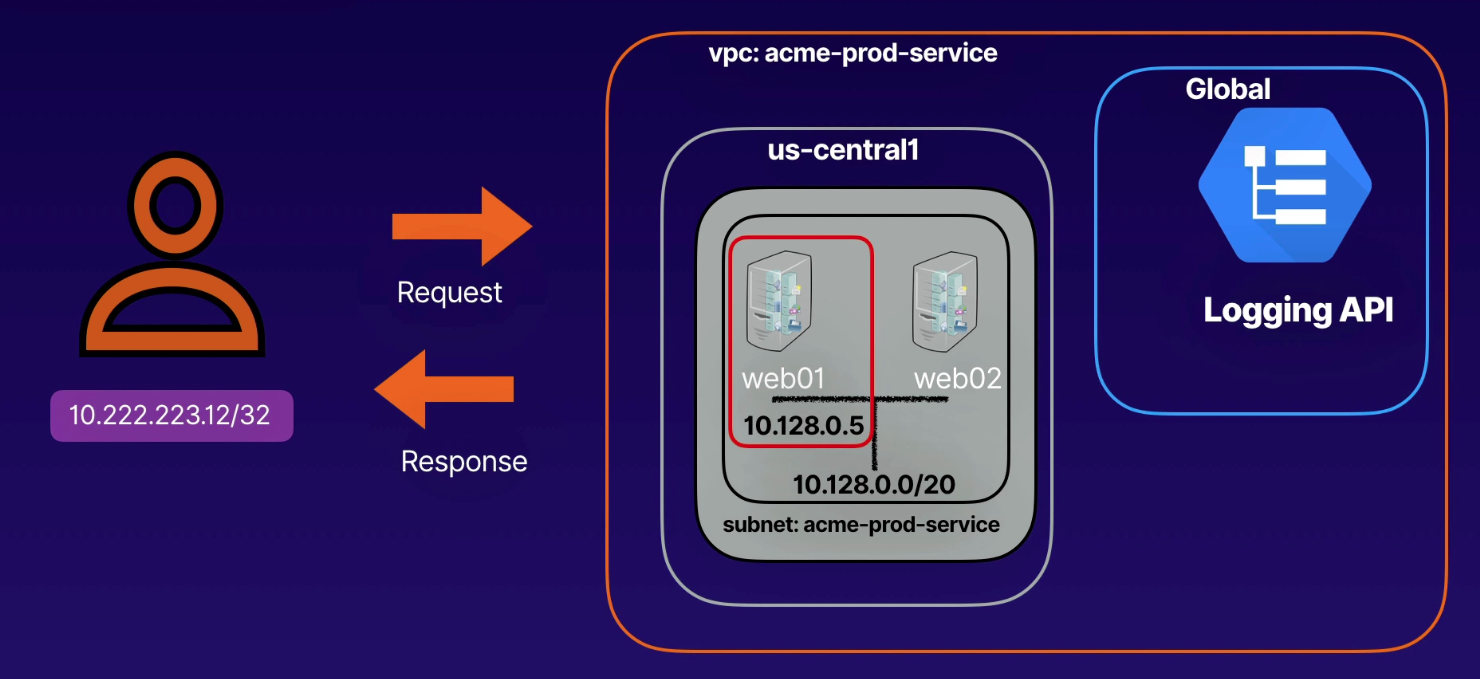

- VPC network monitoring

- Forensics

- Security analysis

- Cost control / forecasting

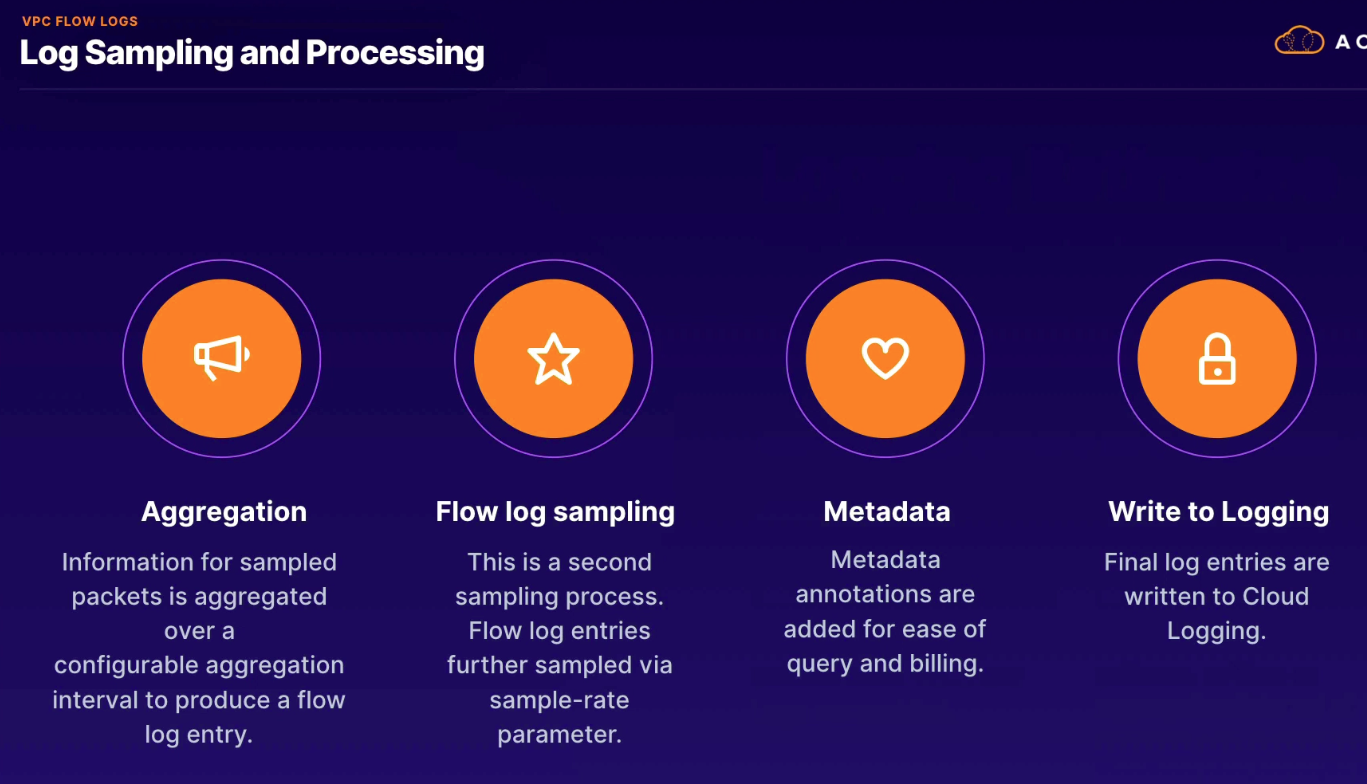

Provide a packet-level view into how our VPC network is functioning.

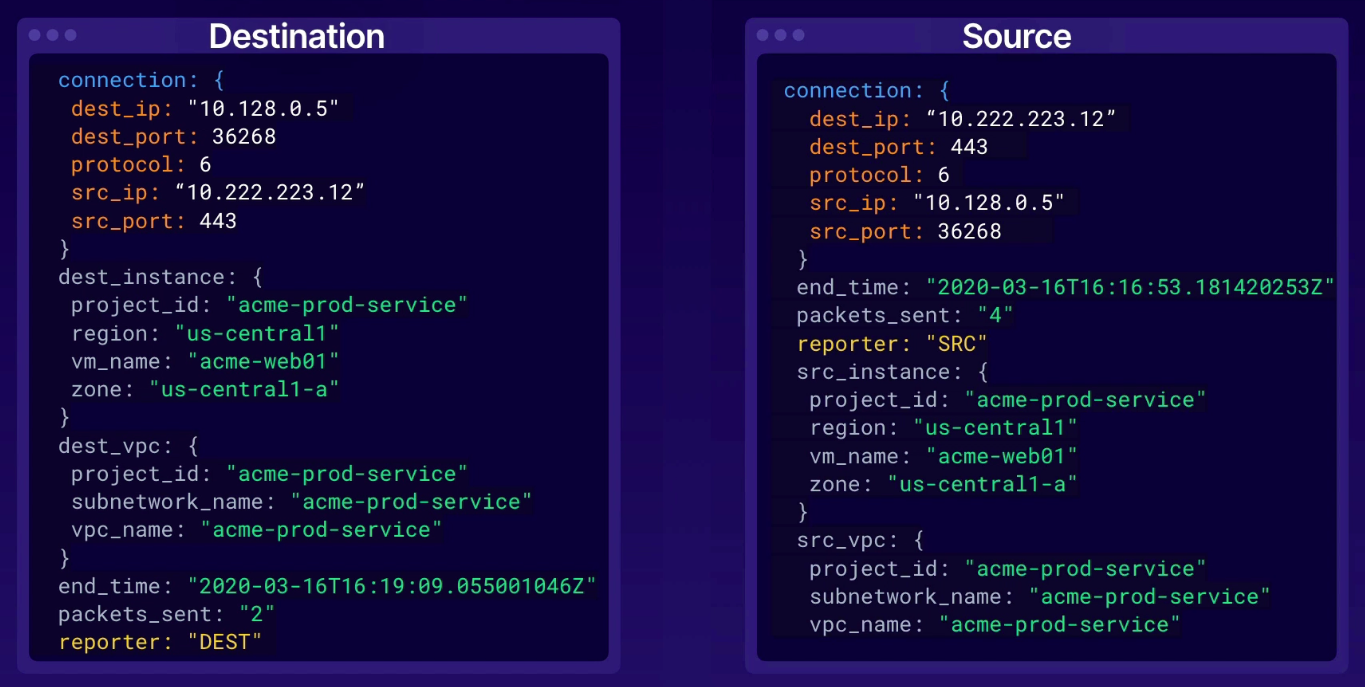

- 5-tuple (

dest_ip,dest_port,protocol,src_ip,src_port)

Good to know:

both

both SRC and DEST info logged for every VM instance (even if within GCP network)

Cost control (aggregation, sampling)

- sampling controls how much is stored (default 50%; quickly find issue set to 100% for troubleshooting)

- can turn on/off as needed

- stores in

_Defaultfor 30 days; if need longer then set up log sink export

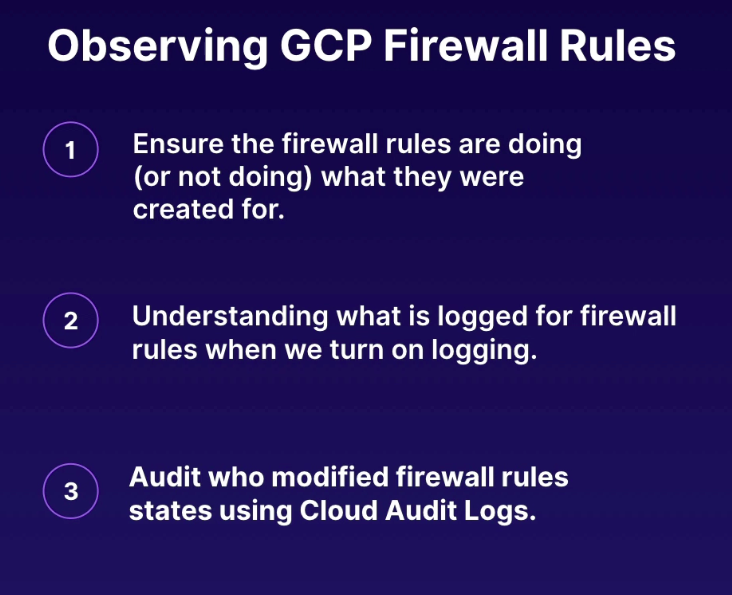

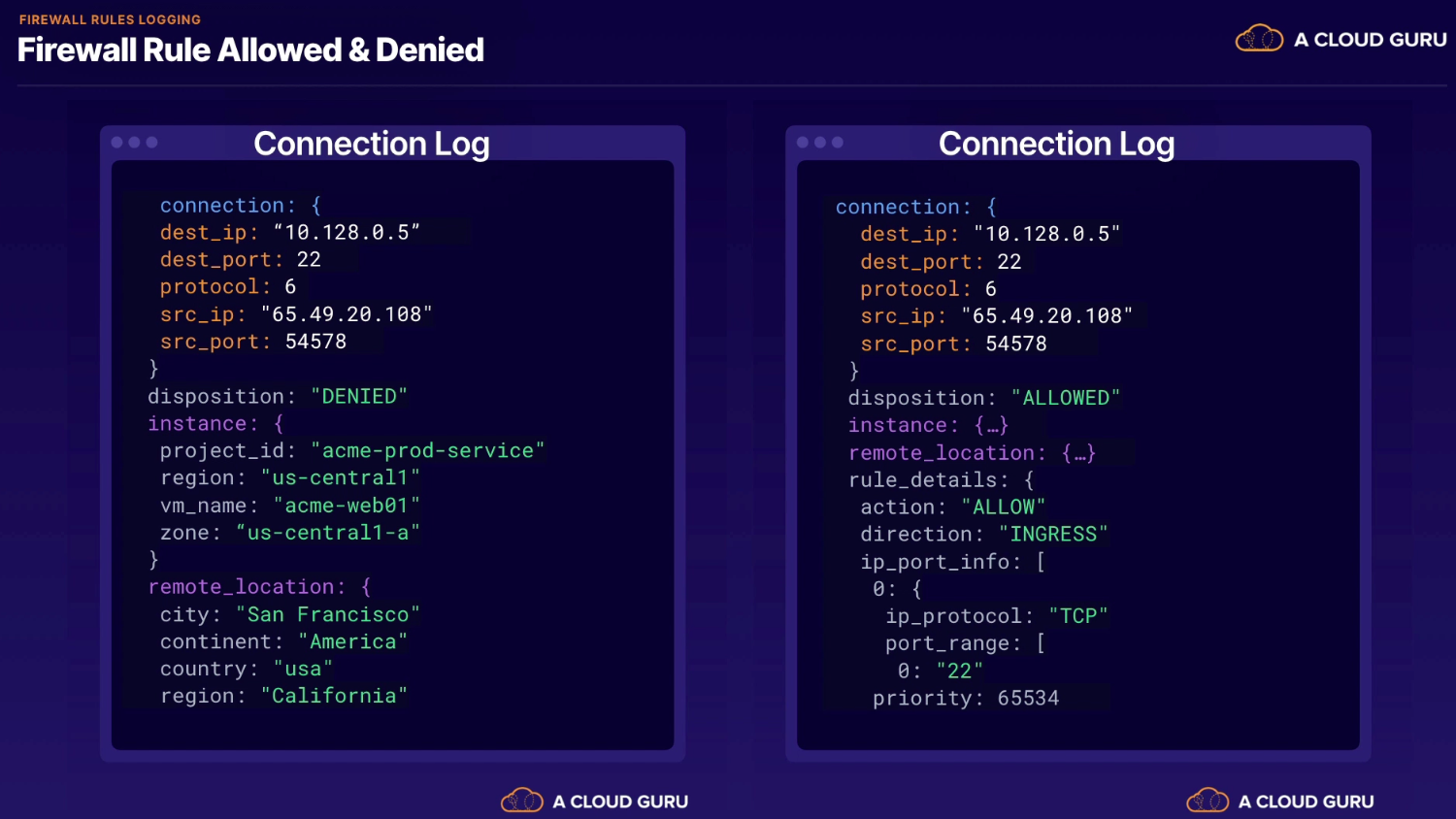

Allows us to verify and analyze the effects of firewall rules on our network traffic.

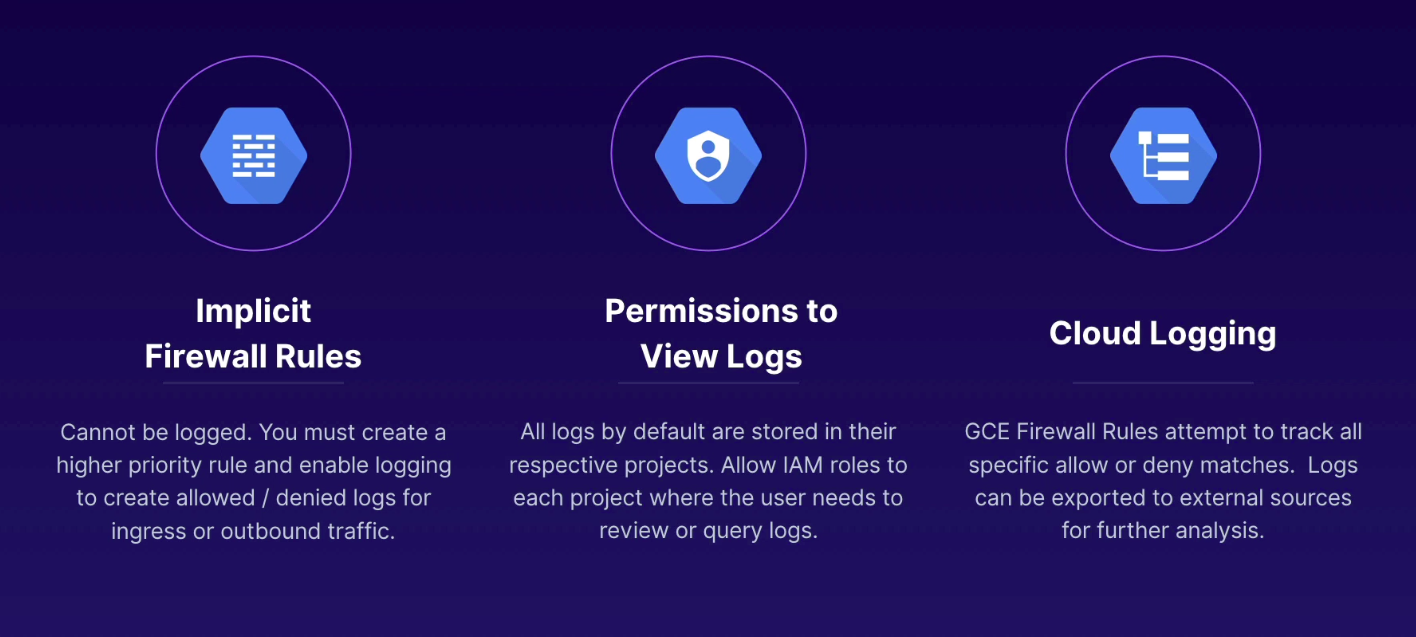

IAM roles to enable rirewall rule Logging

- compute admin

- compute security admin

- owner or editor

IAM roles for firewall rule Viewing

- logging viewer

- owner, editor, or viewer (primitive roles)

Default rules

- ingress - deny all - 65535 priority (not logged and not editable)

- egress - allow all - 65535 priority (not logged and not edibable)

- to log:

- create similar rule with higher priority (any priority with lower number than defaults)

WARNING / Troubleshooting

- default deny-all rules if logging, will generate a lot of log entries (cost)

- only works for TCP/UDP and no other protocols (i.e. ICMP)

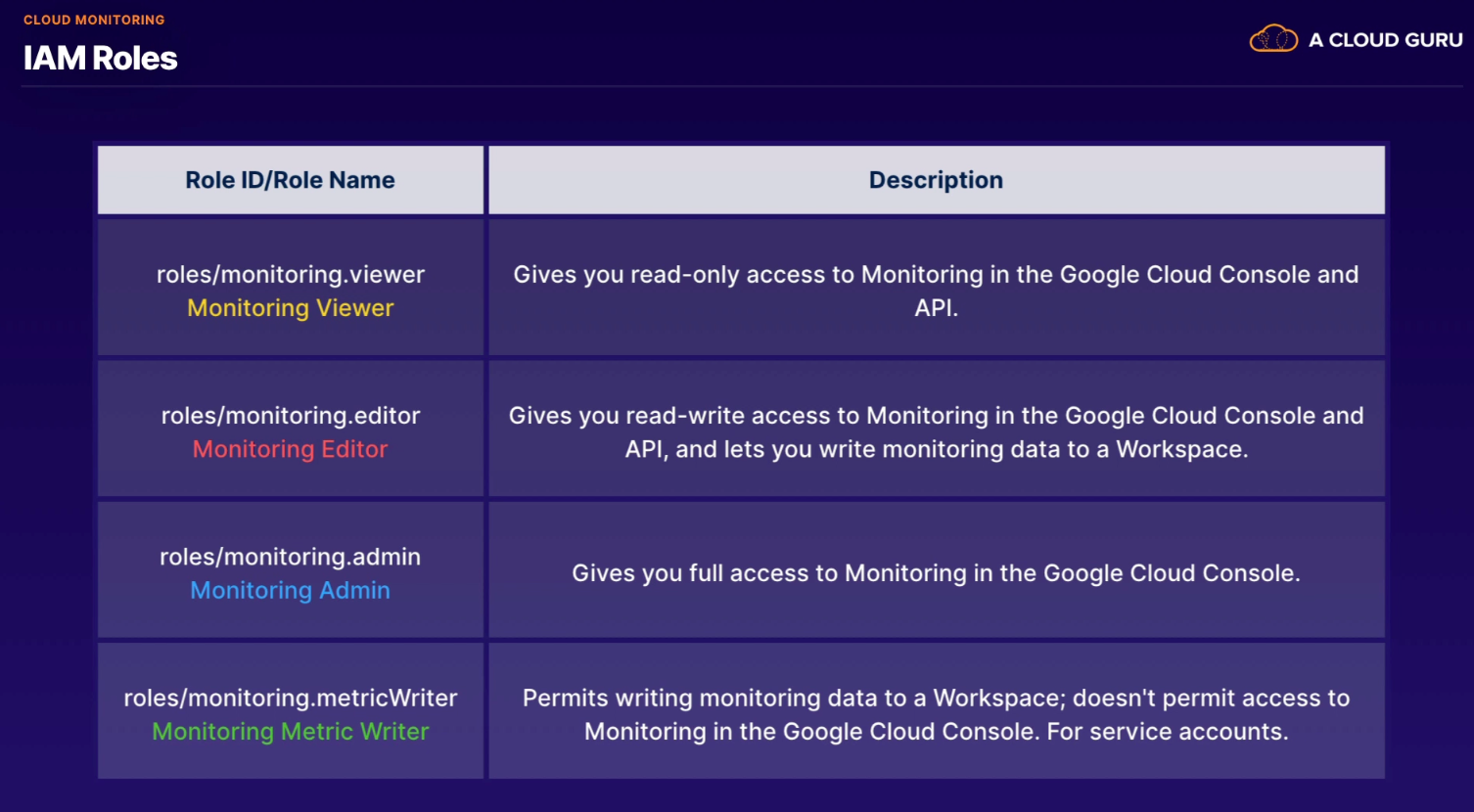

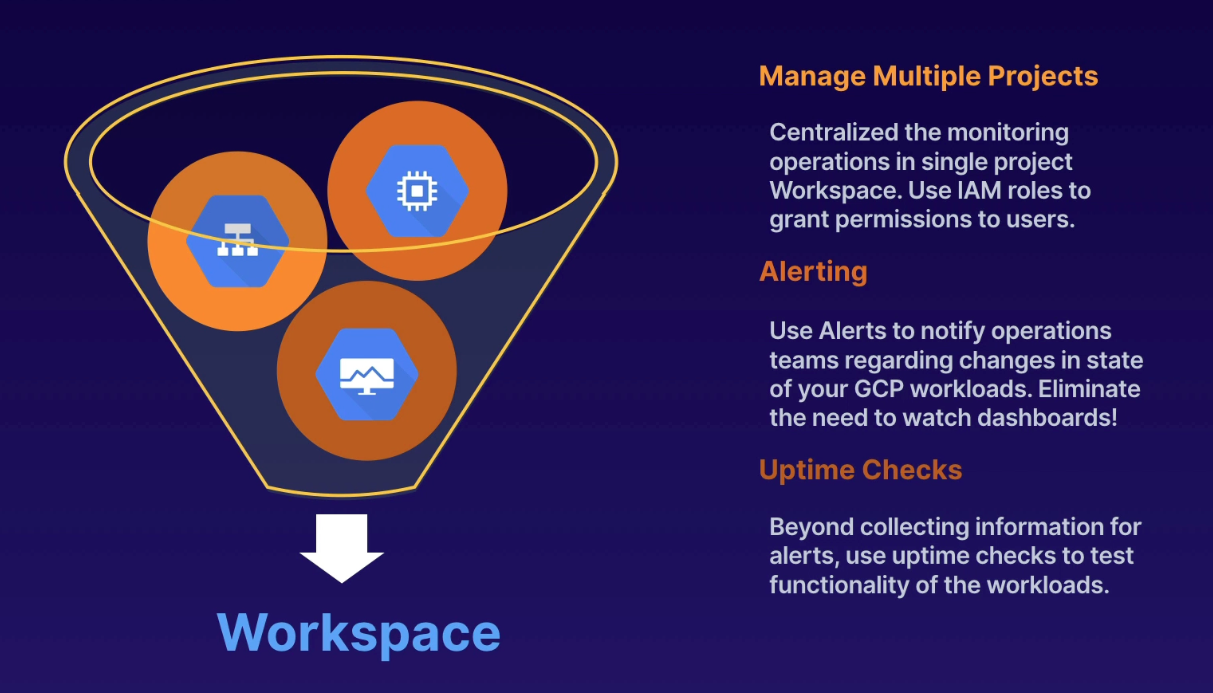

We create pictures of what is going on; proactive and predictive operations. By collecting measurements over time, we can predict the pattern of events or take automated action based on the state of the environment.

Components

- Dashboards

- Uptime Checks

- Alerting Policies

- Notification Channels

- Groups

- Monitored resource (can be CPU, disk IDs)

- Metric types (e.g., "gauge")

- Time-series (points: collection of metrics over time)

Workspace

- single project for IAM permissions, agents, users

- takes name of project where created

- can monitor up to 100 GCP projects

- best practices

- create separate project for Workspace

- install monitoring agent on all GCP resources

IAM Role for VM service account

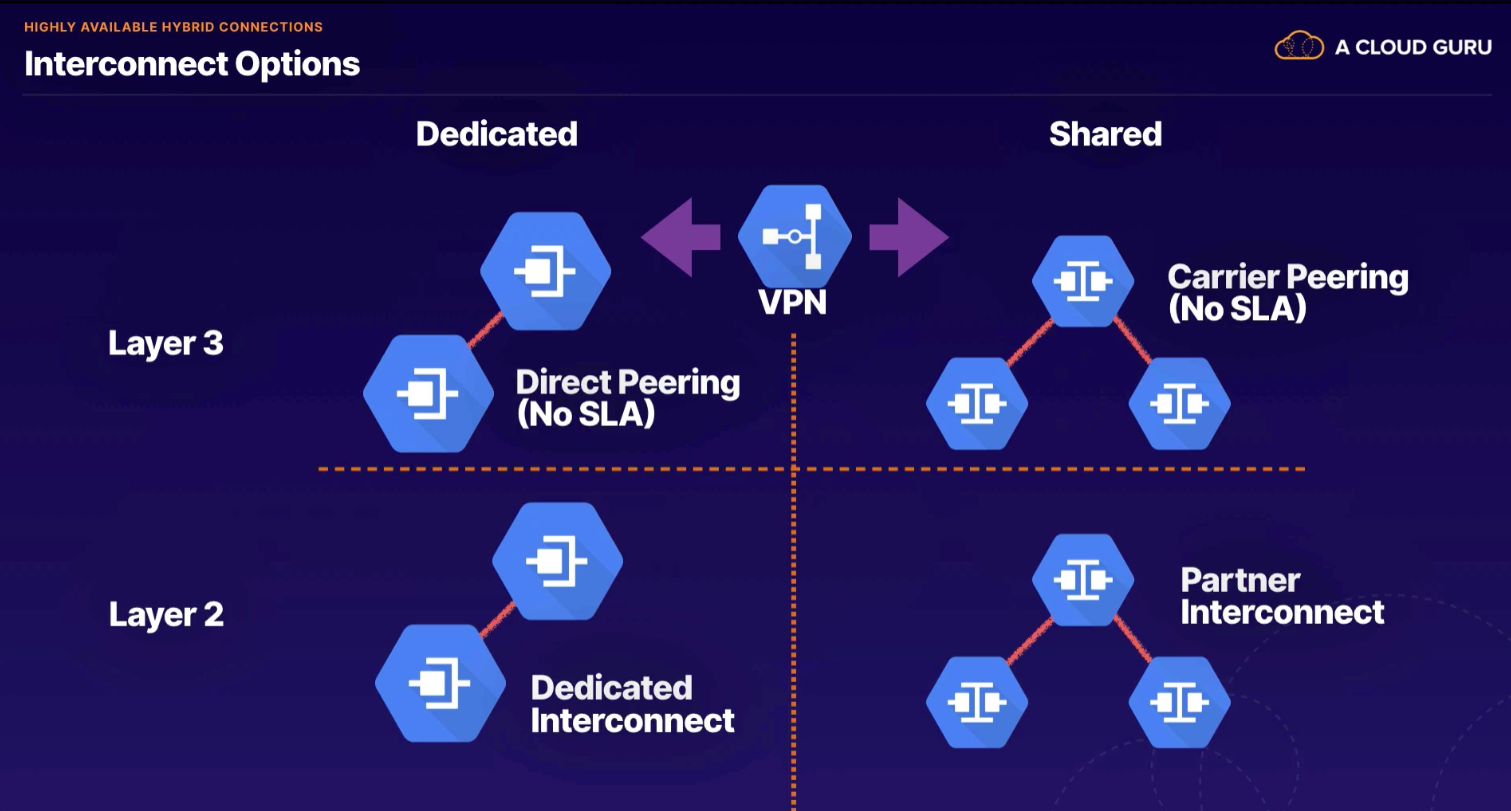

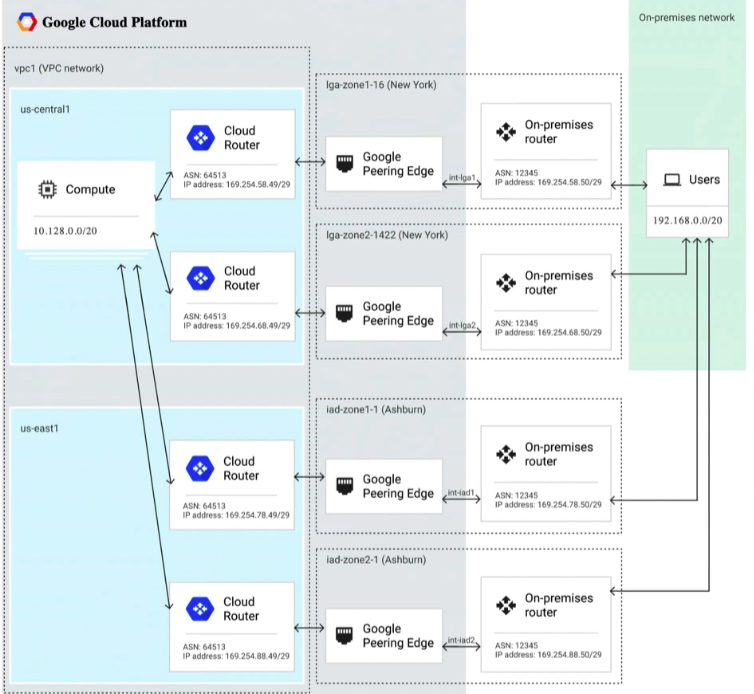

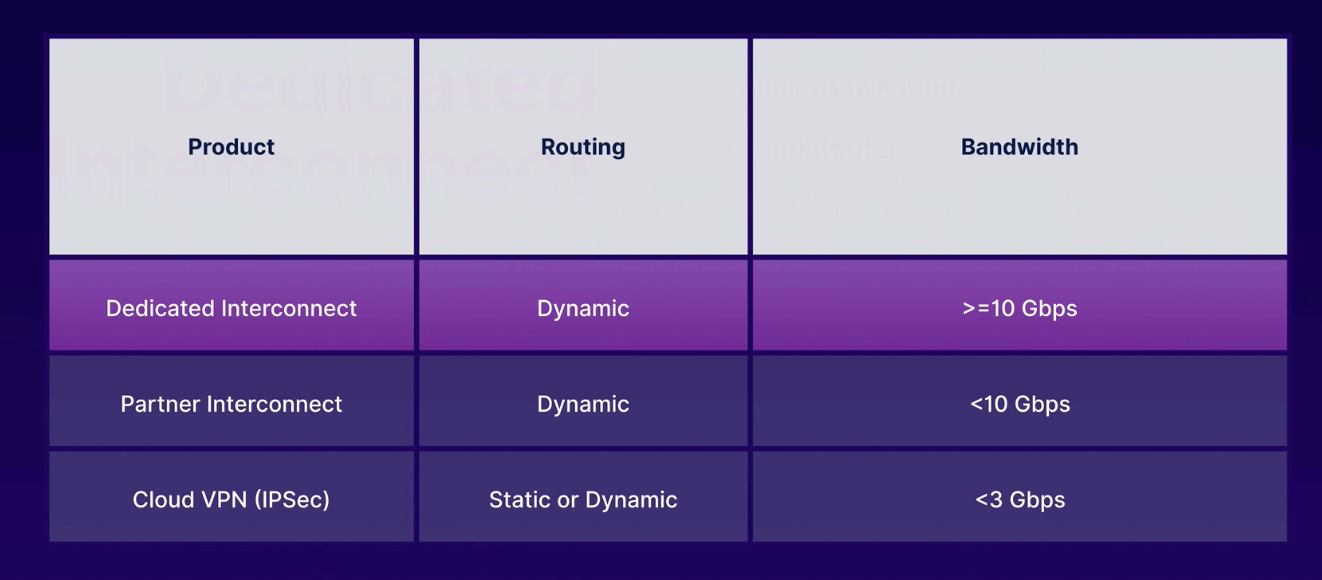

Establishing private connections between our VPC network and existing on-premise or multi-cloud network infrastructure.

- VPN - lets you securely connect GCP resources to your own private network

- uses IKEv1 or IKEv2 to establish IPSec connectivity

- Interconnect - lets you establish high bandwidth, low latency connections between your GCP networks and on-premises infrastructure

- Cloud Routers - enable dynamic route updates between your VPN and non-Google network

- Regional

- Global

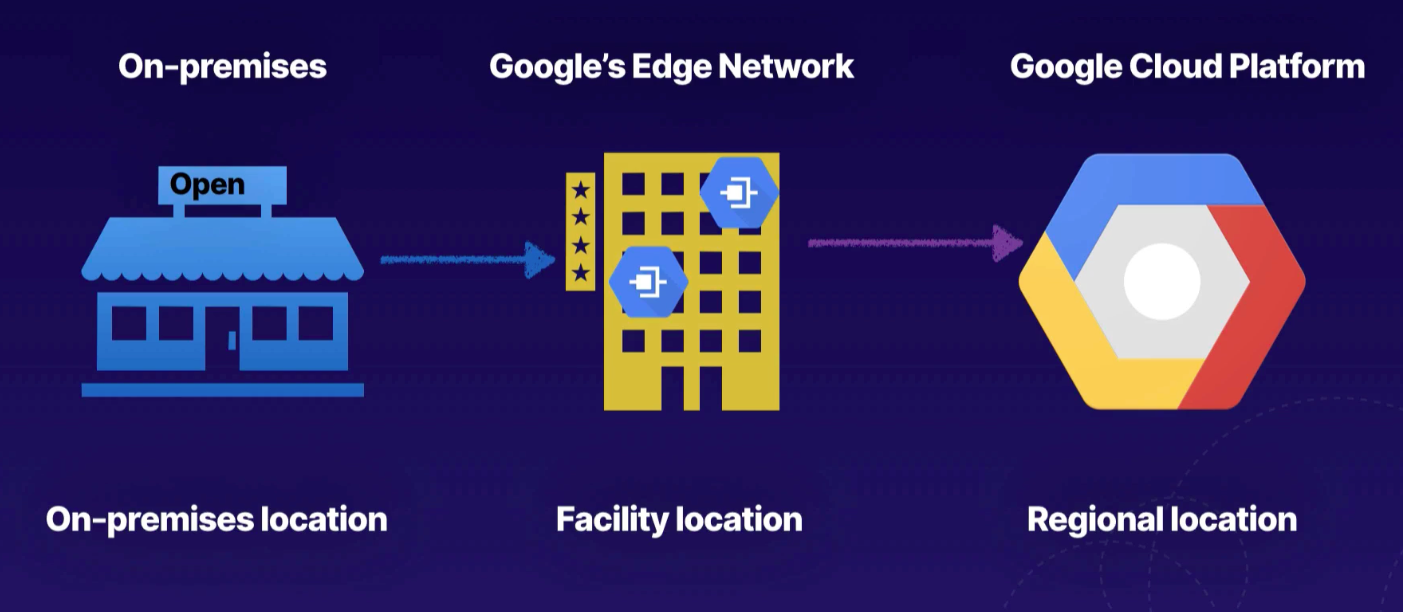

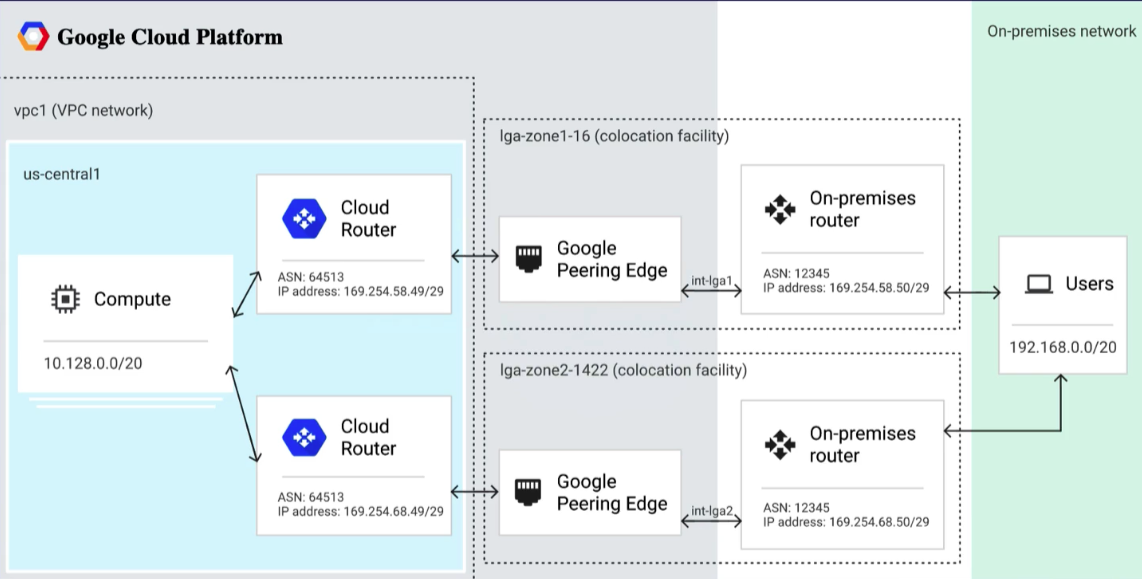

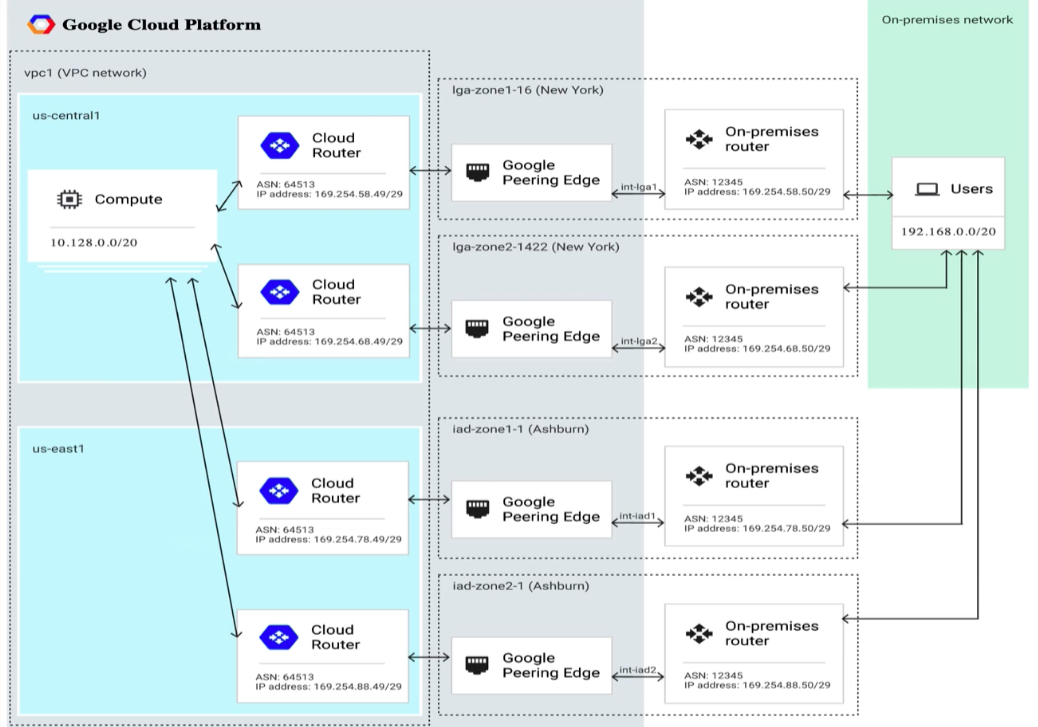

- On-premises - Colocation Facility (Edge POP) - Google Cloud Platform

- colocation facility providers establish circuit between colo and GCP establishing layer 2 connectivity

- each "metro" metropolitan area

- select colocation facility and metro where the Interconnect will live

- select location close to on-premise location to reduce latency

- each metro supports a subset of regions

- more cost effective to avoid inter-region egress costs

- each colocation facility supports specific regions

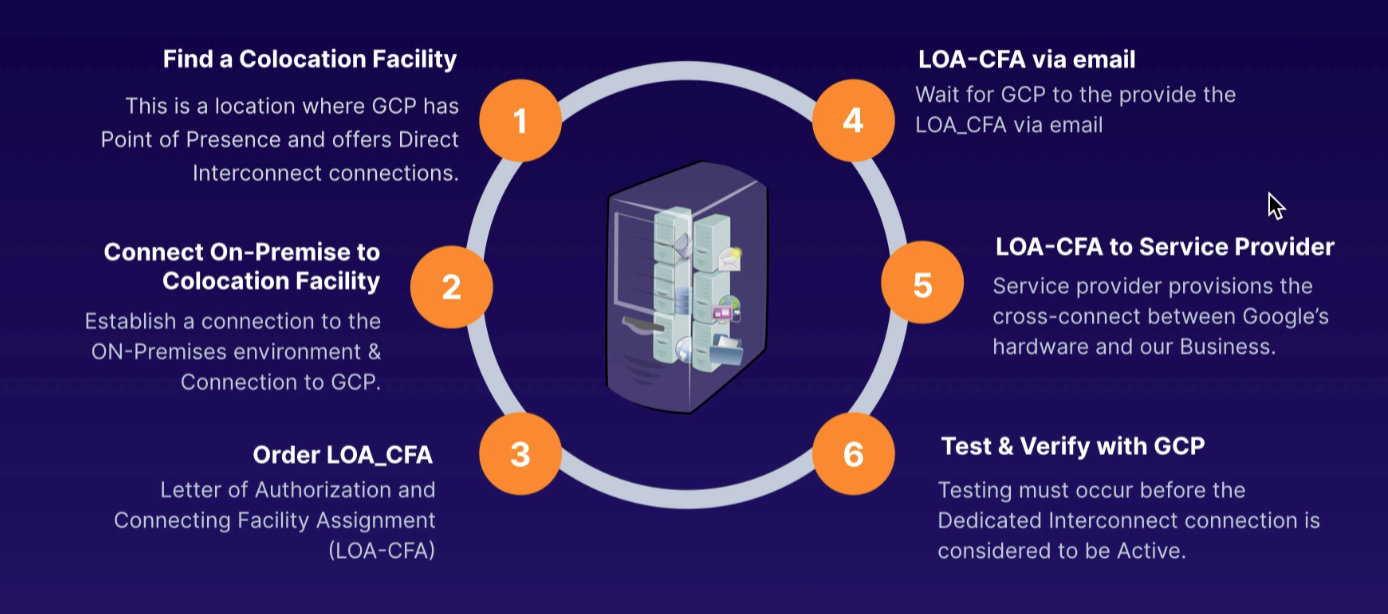

LOA-CFA

- Letter of Authorization and Connecting Facility Assignment (LOA-CFA)

- Google sends email to facility provider to provision cross connect between Google and provider

Edge Availability Domain

- Each colocation facility has at least 2

- prevents outage as only 1 down at any one time for maintenance

Network Service Provider

- Colocation facility vendor

- enables establishing shared connectivity between provider and GCP

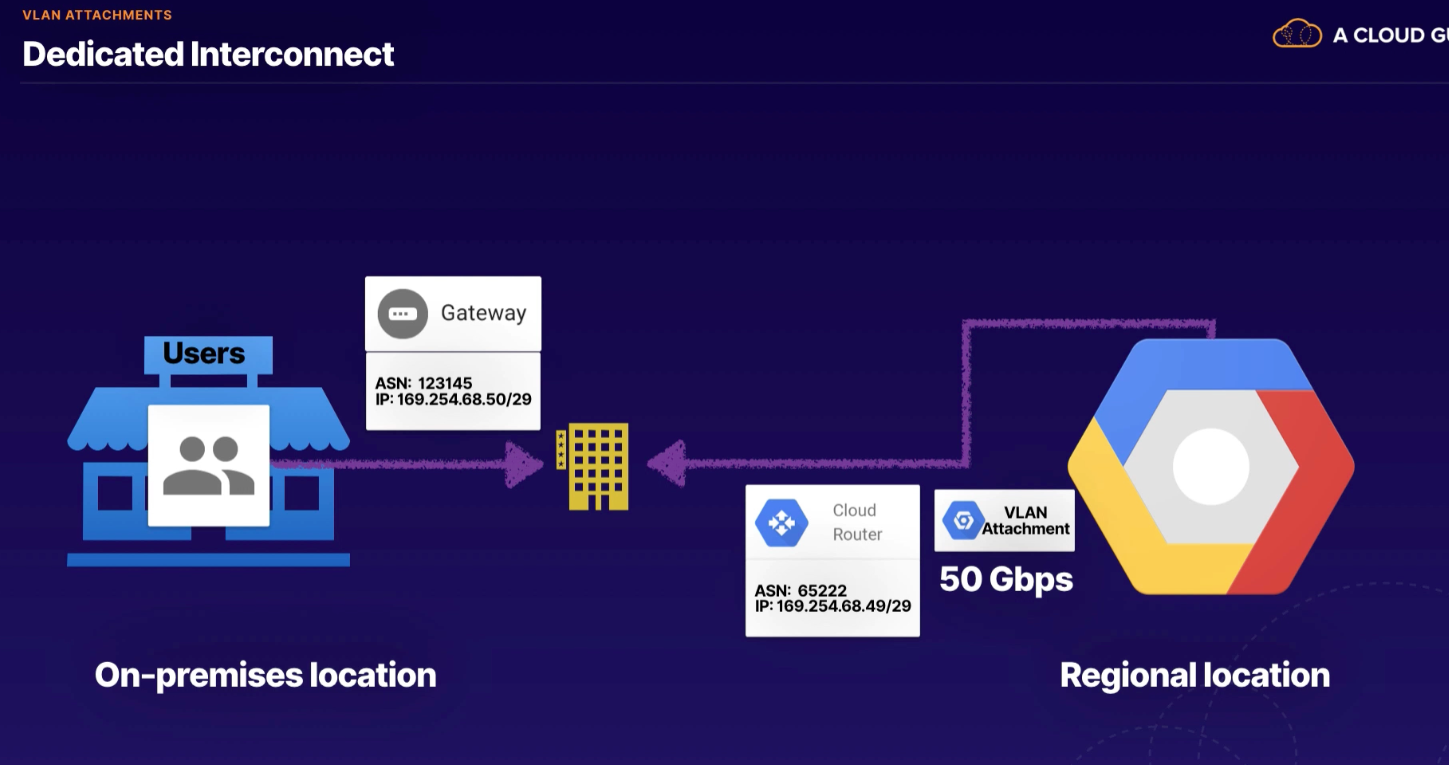

Dedicated Interconnect

- colo provider provisions circuit between our provider and Google's Edge POP

Partner Interconnect

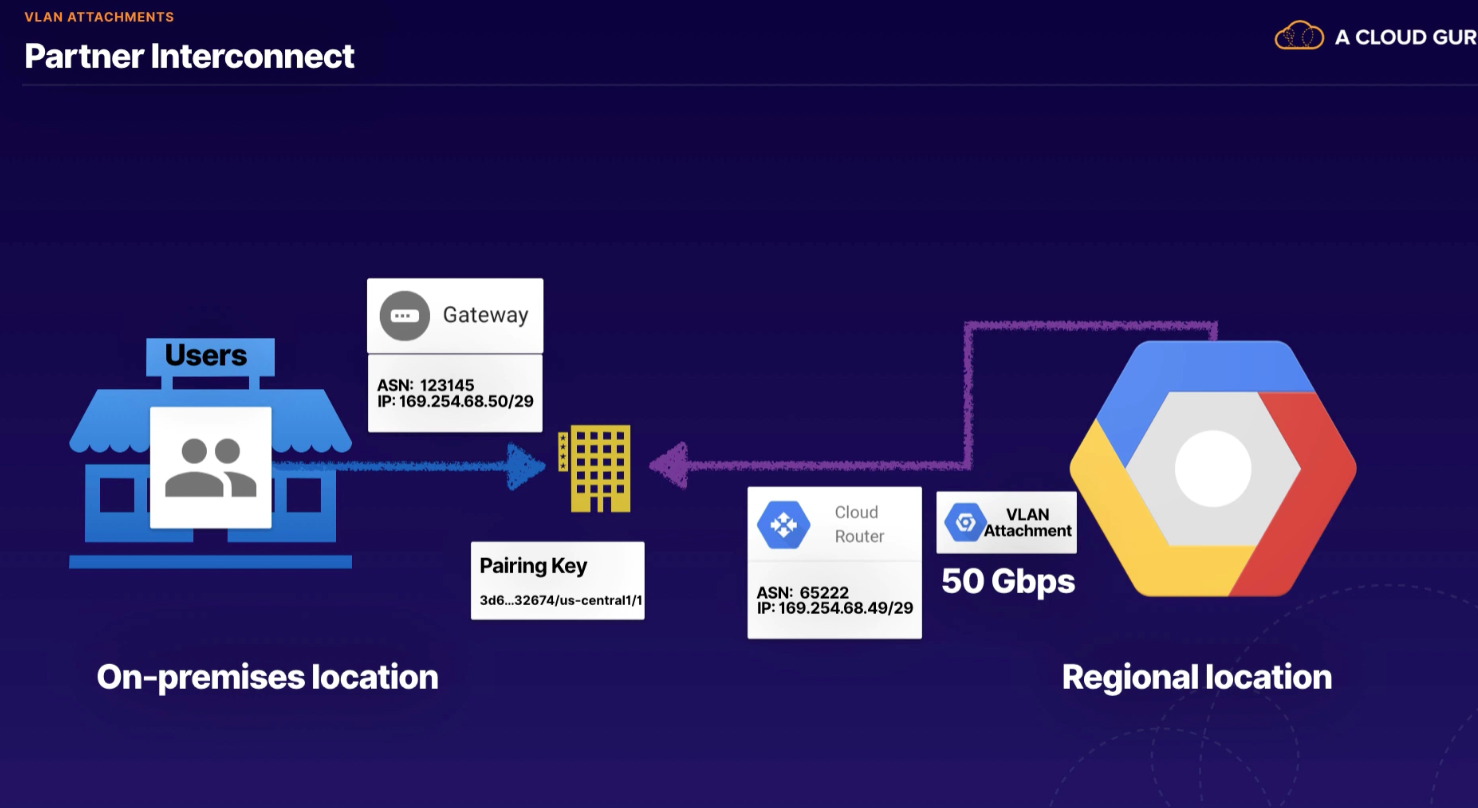

- useful if our datacenter in physical location that cannot reach Google partner colocation facility

- or if bandwidth needs

VPN IPSec

- 3GB per tunnel, up to 8 tunnels

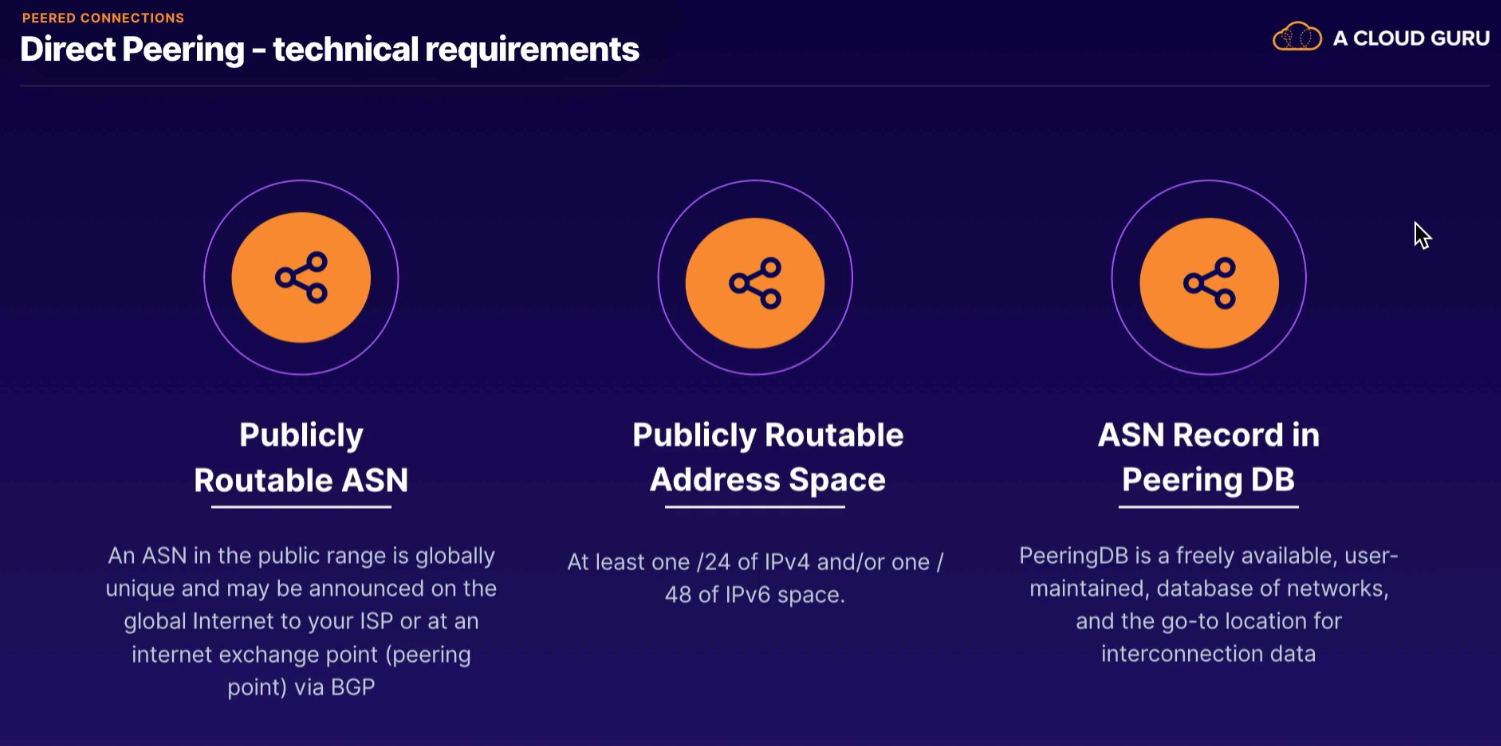

Direct Peering

- exchange routes and next hop is Google Global network; default IGW or VPN tunnel

- discount egress rates (no charge for peering)

Carrier Peering

- works same as direct peering

- uses shared network from GCP provider

- Connect your on-premise network to Google Cloud network by connecting new fiber to your equipment

- LOA-CFA specify bandwidth needs for the business

- establish BGP session between on-premises router and GCP Cloud Router

Order

- name

- location

- suggest 2nd for SLA for redundancy

Redundancy

- company name

- technical contact

VLAN Attachment (can add to existing)

- Allocates a VLAN on an Interconnect connection and associates that VLAN with a specific Cloud Router

- name

- Cloud Router

- VLAN ID

- Allocate BGP IP address

- Bandwidth (max 50Gbs)

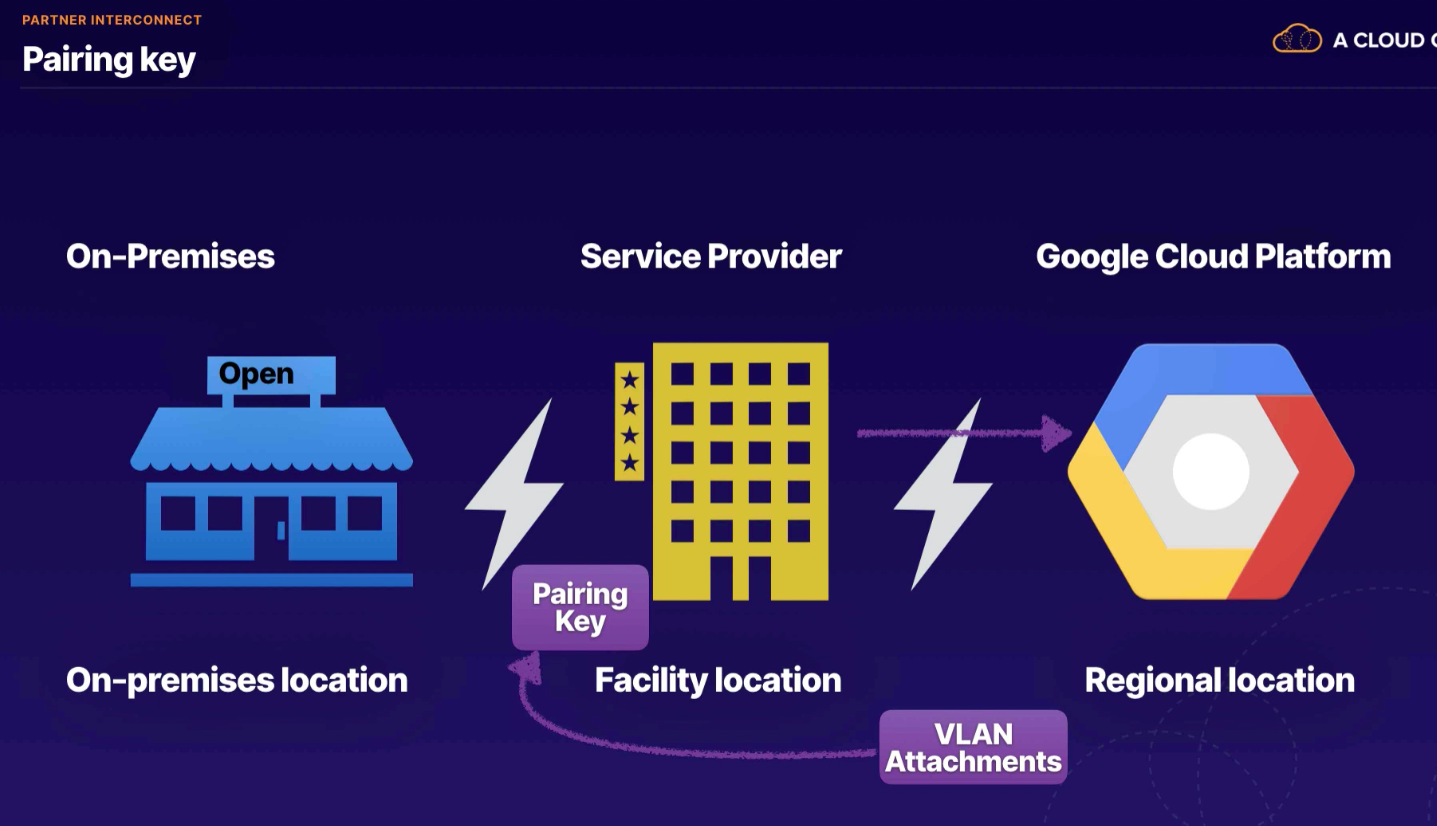

Provide connectivity between GCP and our network through a 3rd-party provider

Provide connectivity between GCP and our network through a 3rd-party provider

- should still use 2 zones and 2 connections for high availability

Setup

- select colocation facility

- connect on-premises network to facility (closest to our network, and GCP region)

- some offer layer 2, and layer 3

- common is layer 3 TCP/IP connectivity

- check connection

- have provider

- find a service provider

- check VPC network

- region

- VLAN attachment name

- Cloud Router

- advertise all subnets OR

- custom routes (also can advertise all, plus additional)

- adding VLAN attachment, Google generates Pairing key (one time) for service provider to set up

- provide pairing key to partner

- no need for LOA-CFA since existing connection with Google already

- layer 2 needs

/24range and ASN

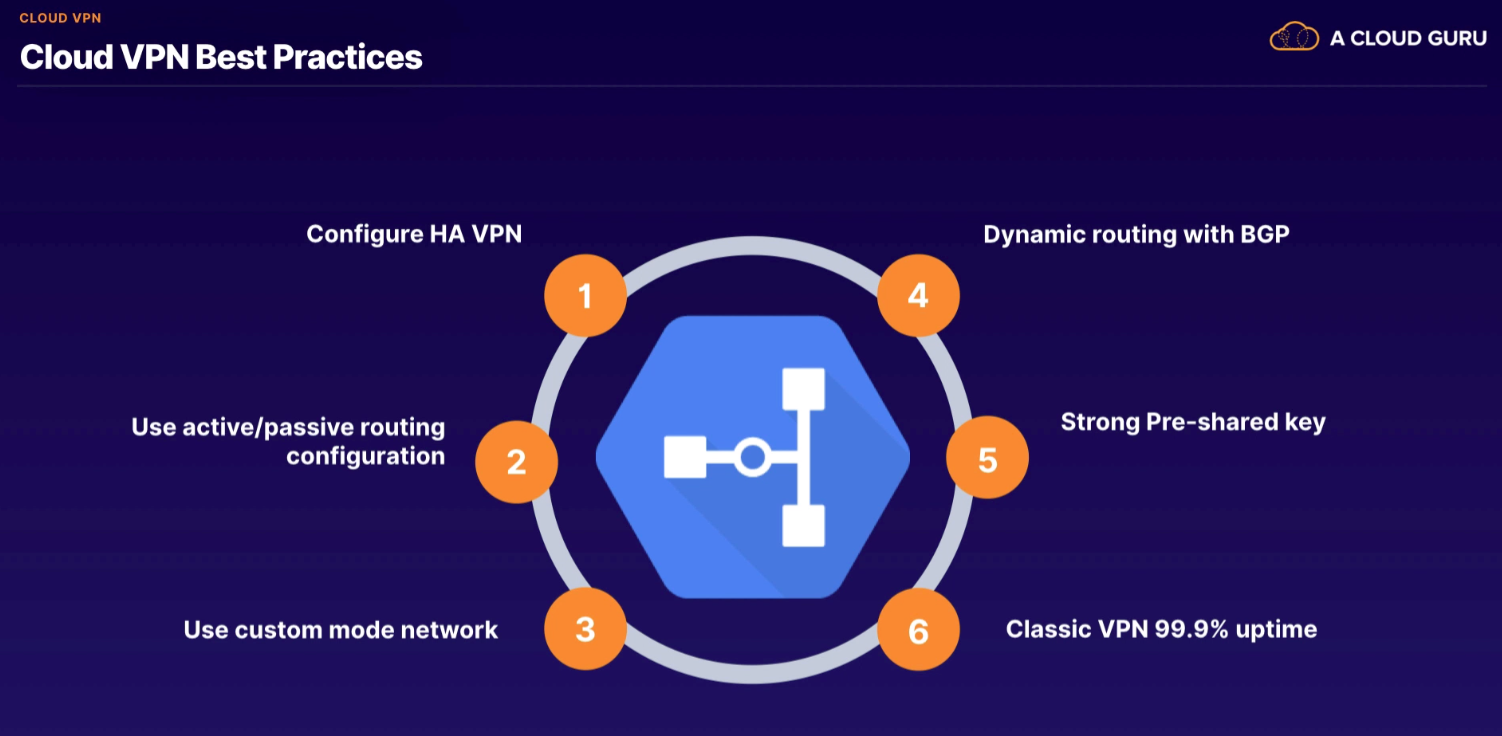

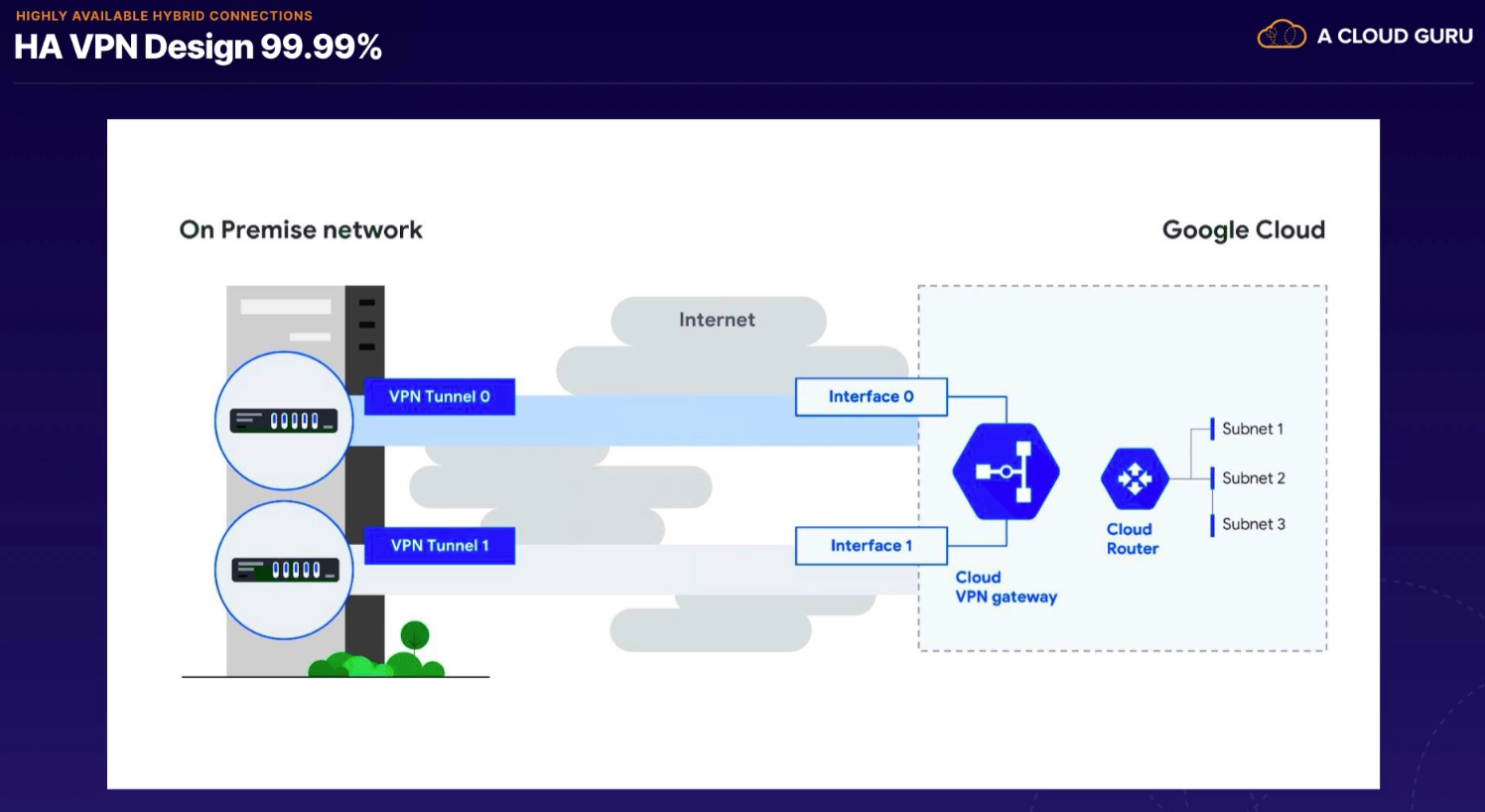

- need Cloud VPN and Cloud Router

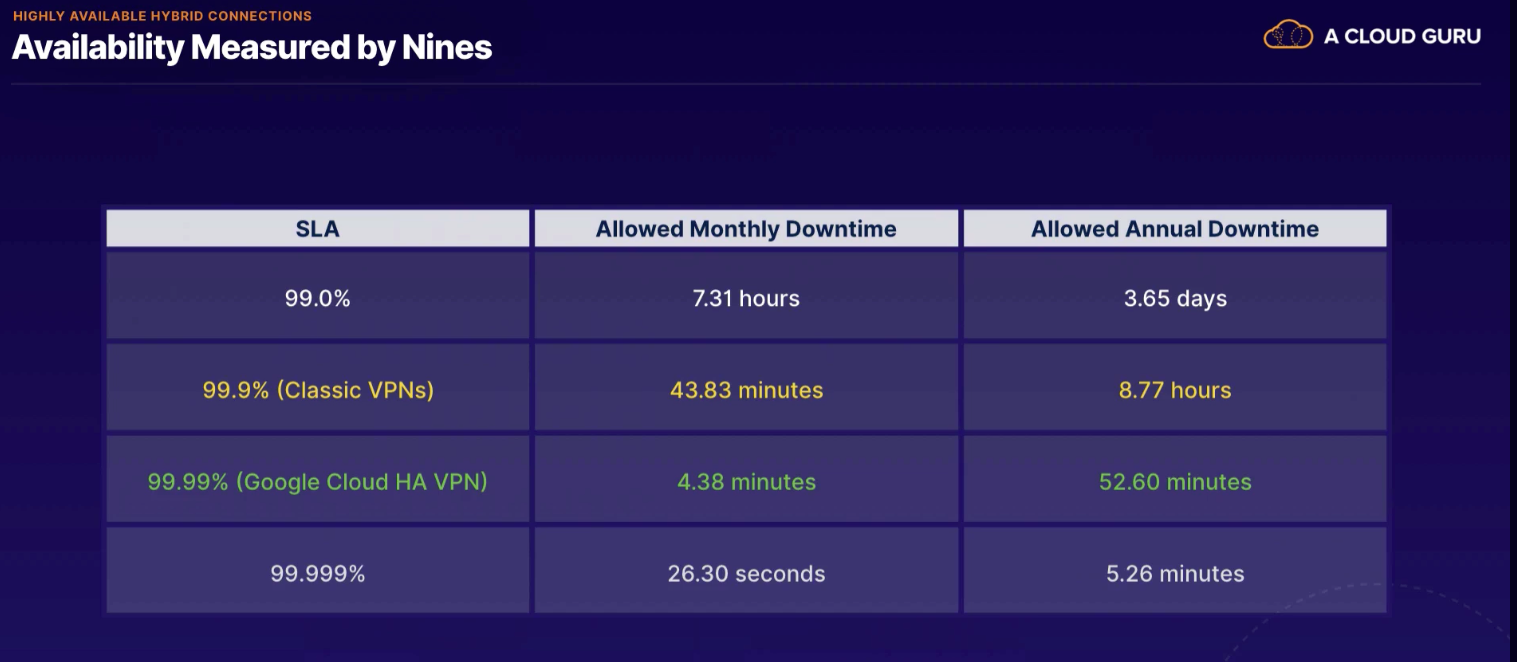

- IPSec = Internet Protocol Security

- traffic over public internet, but authenticates and encrypts traffic on both sides

- 99.99 SLA

- VPN Gateway (interfaces)

HA VPN active/passive (recommended)

- in event of failure we have enough capacity

Need to know:

- difference between 99.9 and 99.99% availability

- ASN = autonomous system number

- MTU 1460 default (can adjust to reduce latency)

- weigh cost implications

- against terms of service to connect two on-premises connections through VPN

Used with both dedicated and partner interconnect

Used with both dedicated and partner interconnect

- need physical connection between our network and GCP colocation facility

- after connection setup, can use cloud console to set up VLAN attachment

- binds our Cloud Router to our VPC and enables ability to send routes

- not redundant

- need separate cloud router in different region

- for Partner Interconnect, creates VLAN attachment pairing key (waiting for service provider)

- layer 3

- for layer 2, need dedicated connection, ASN

Setup

- configure interconnect

- add VLAN attachment

- select project

- name

- router

- VLAN ID

- capacity (1Gb/s)

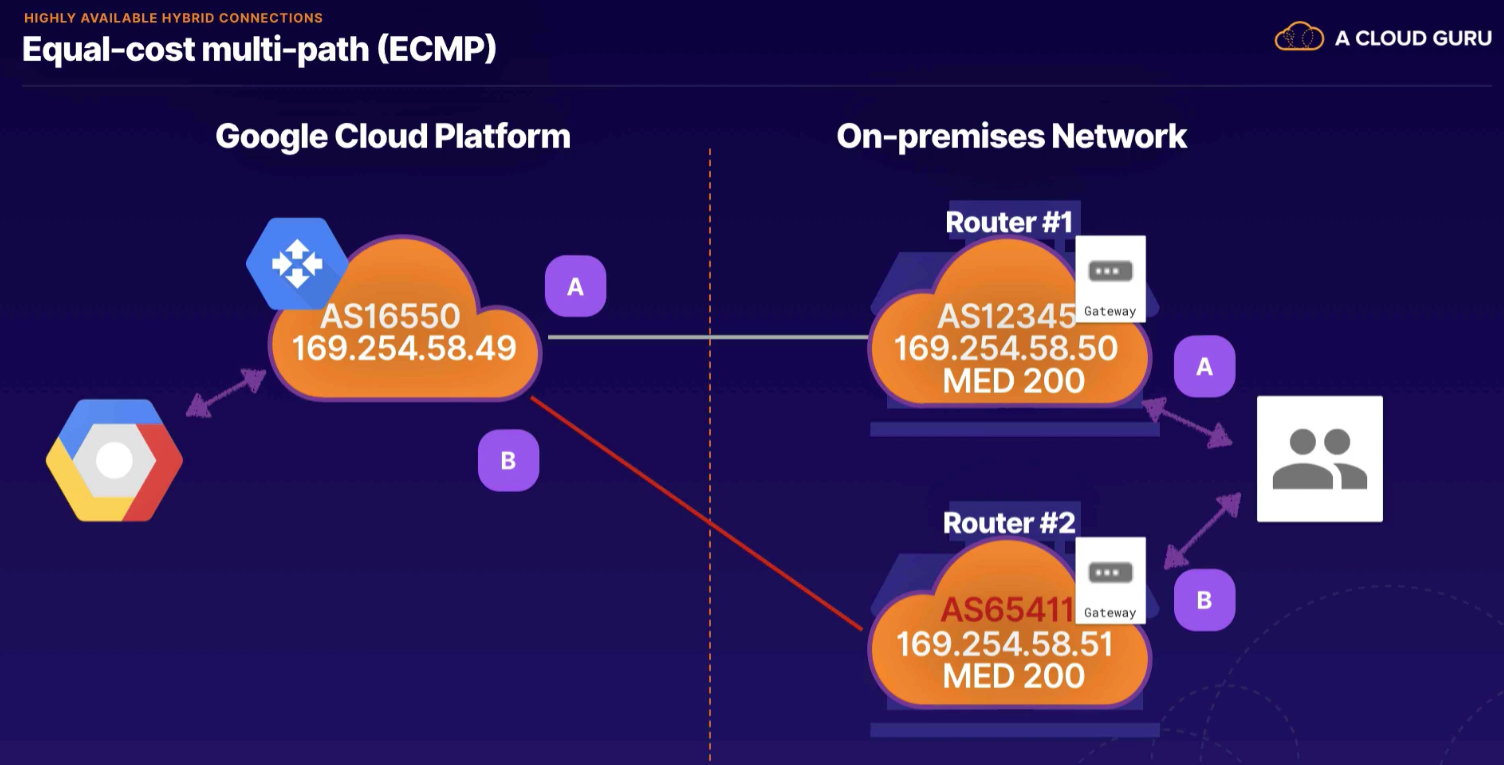

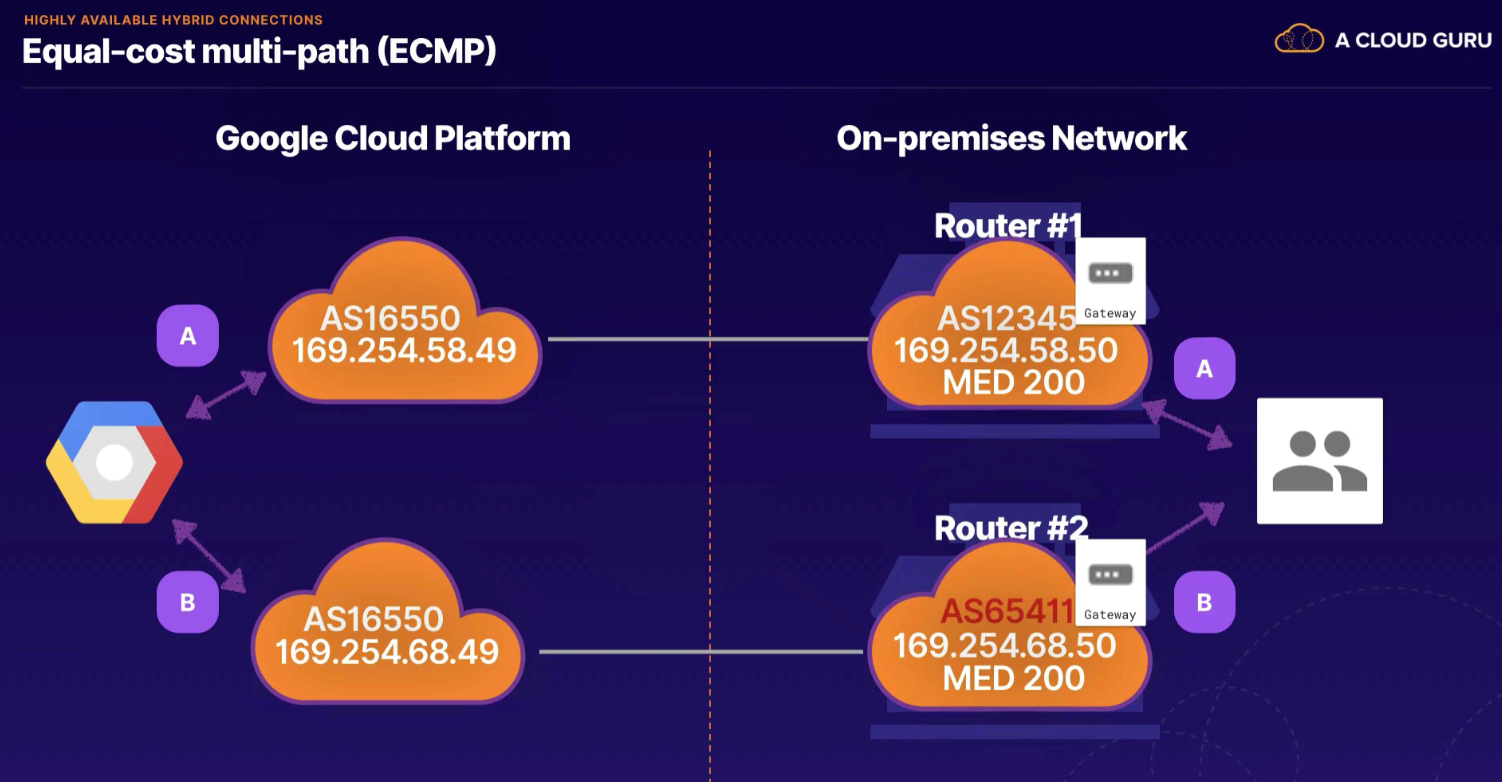

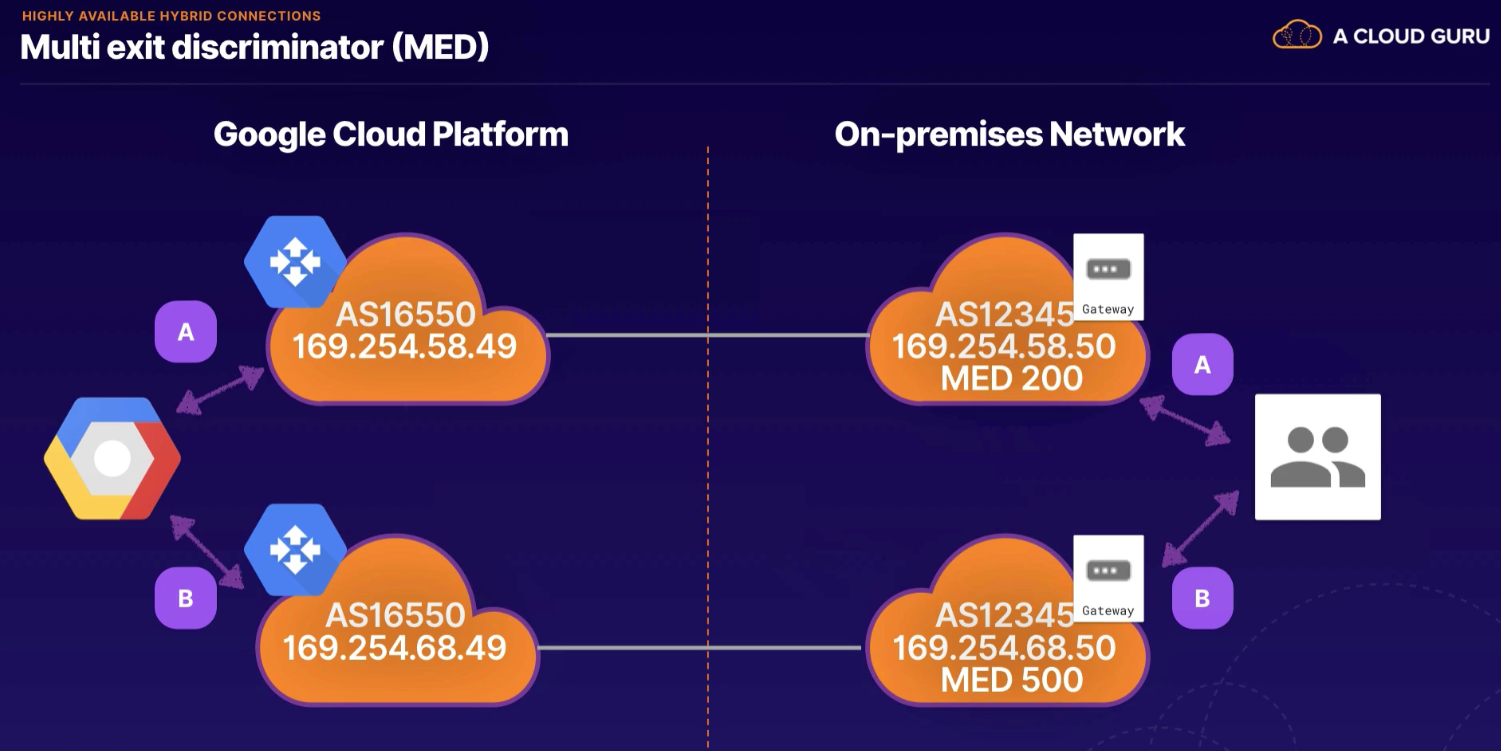

To know about HA

- zone name (zone 1) in each region, need multiple in each region for redundancy as zone 1 may be under maint in both regions

- advertise MED values

- sets up equal cost multipath routing (ECMP)

- Not a physical device. Software defined.

- Establish connection between on-premises network and GCP network.

- uses BGP routing protocol

- if using internal load balancing, recommend regional routing mode (vs global) to reduce risk of lost connectivity

- 64512 - 65534 and 42000000 - ...

Mode

- Regional (default) - only share subnets available from region

- Global - shares all VPC networks where subnets are provisioned

Direct connection between Google's network and another network to support the exchange of traffic.

Direct connection between Google's network and another network to support the exchange of traffic.

- no direct connection

- global routing only, no BGP routing

- should use VPN tunnel to add encryption across

- no setup or maintenance

- discounted egress rates

- need 24/7 NOC

- minimum traffic requirements (10gbs)

Carrier Peering Connections

- 1/3 discount on egress costs may justify extra provider costs

Availability

Deciding

- based on availability, make sure business needs are met

Architectures

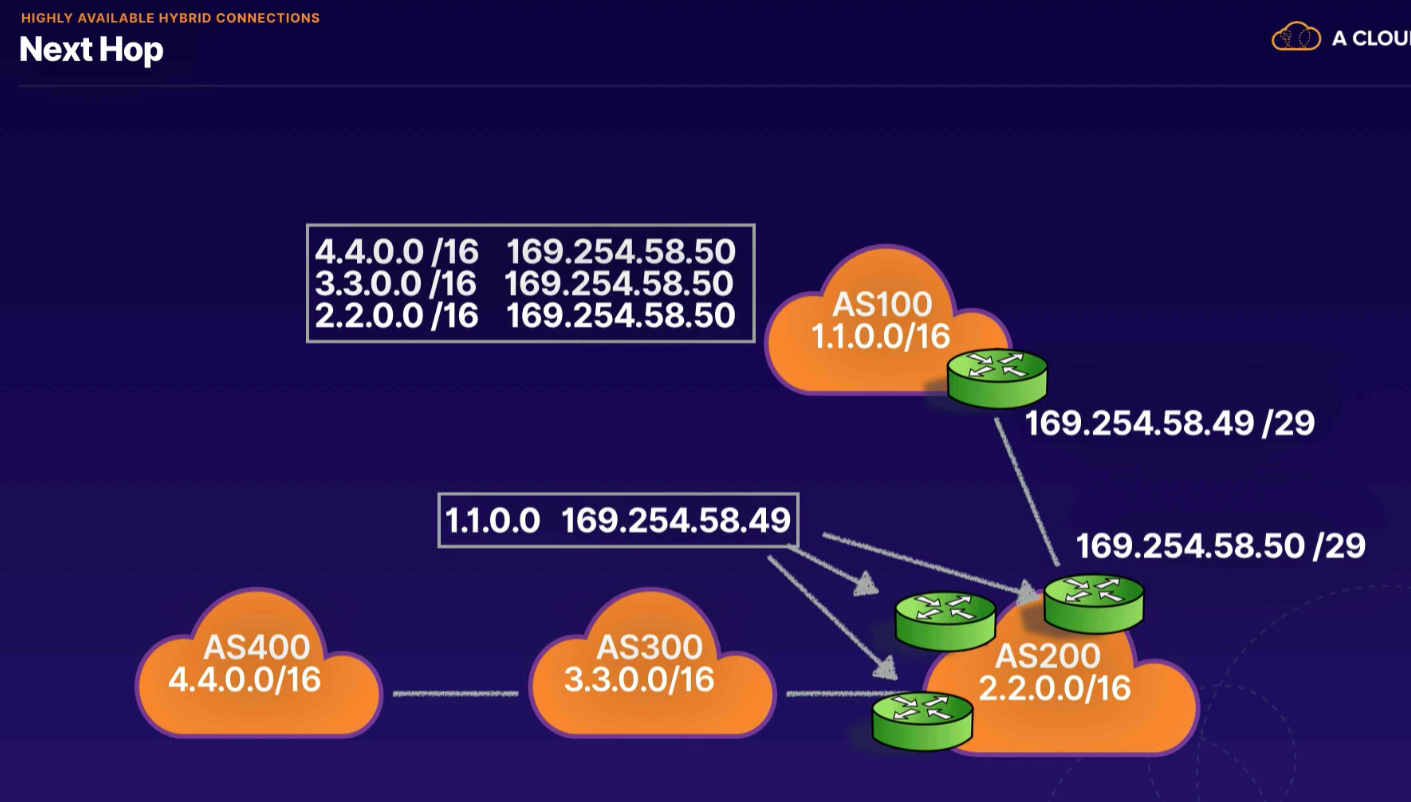

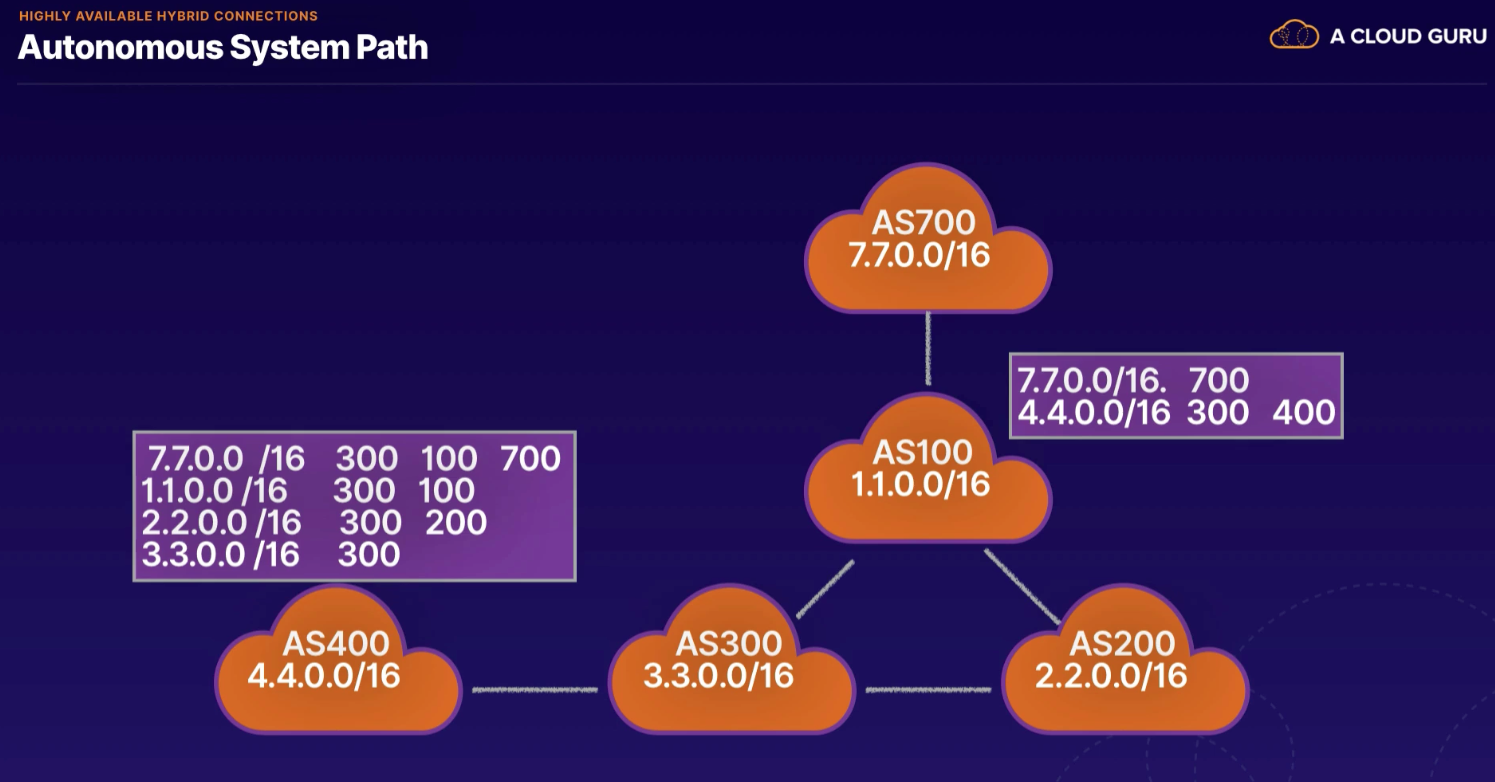

Autonomous System Numbers (ASN)

- group of routers that shared network information to make connectivity possible

- routing on BGP supports only destination-based forwarding paradigm

- forwards package based on the destination path

- routes based on destination IP address

- routing table describes the path to reach networks

- in case of route path failure, uses alternative routes

Multi exit discriminator (MED)

- like a "tie breaker" to help router decide route (lower value wins)

Maximum Transmission Unit (MTU)

- remember outer packet adds encryption so need 75 lower than 1460 max so data can be routed

Partner Interconnect NEEDS PUBLIC ASN

- all others use private