#include <iostream>

#include <map>

#include <string>

using namespace std;

typedef int (*BrewFunction)();

typedef std::map<std::string, BrewFunction> BrewMap;

BrewMap g_brew_map;

#define RegisterBrewFunction(func) \

namespace { \

class __Registerer_##func { \

public: \

__Registerer_##func() { \

g_brew_map[#func] = &func; \

} \

}; \

__Registerer_##func g_registerer_##func; \

}

int train() {

cout << "I am train!" << endl;

return 0;

}

RegisterBrewFunction(train);

int test() {

cout << "I am test!" << endl;

return 0;

}

RegisterBrewFunction(test);

static BrewFunction GetBrewFunction(std::string name) {

if(g_brew_map.count(name)) {

return g_brew_map[name];

}

else {

for(BrewMap::iterator it = g_brew_map.begin(); it != g_brew_map.end(); it++) {

cout << it->first << endl;

}

}

}

int main(int argc, char** argv)

{

BrewFunction train = GetBrewFunction("train");

BrewFunction other = GetBrewFunction("other");

return 0;

} -

-

Save tfzhou/d5612f9ea30ac7d2b776d828b34cc27d to your computer and use it in GitHub Desktop.

-

numpy array v.s. asarray

convert the input(e.g., list, list of tuple, tuple, tuple of tuple, tuple of list) to ndarray. The difference is 'asarray' does not perform copy if the input is with matching dtype.

np.asarray(a) is a

-

zero_grad() we neet set the grad manually to zero in pytorch because the

backwardfunction accumulates gradients instead of replacing them. This is for RNN. In the following example, they.backwardis called 5 times, so the final value ofx.gradwill be5*cos(0)=5.import torch from torch.autograd import Variable x = Variable(torch.Tensor([[0]]), requires_grad=True) for t in range(5): y = x.sin() y.backward() print(x.grad) # shows 5Calling

x.grad.data.zero_()beforey.backward()can make surex.gradis exactly the same as currenty’(x), not a sum ofy’(x)in all previous iterations.x = Variable(torch.Tensor([[0]]), requires_grad=True) for t in range(5): if x.grad is not None: x.grad.data.zero_() y = x.sin() y.backward() print(x.grad) # shows 1 -

custom dataset layer

class MyDataset(Dataset): def __init__(self, ...): # initialization codes def __len__(self, ...): # size of dataset def __getitem__(self, idx): # return the idx-th data in the dataset

-

clahe

Adaptive histogram equalization (AHE) is a computer image processing technique used to improve contrast in images. It differs from ordinary histogram equalization in the respect that the adaptive method computes several histograms, each corresponding to a distinct section of the image, and uses them to redistribute the lightness values of the image. It is therefore suitable for improving the local contrast and enhancing the definitions of edges in each region of an image.

However, AHE has a tendency to overamplify noise in relatively homogeneous regions of an image. A variant of adaptive histogram equalization called contrast limited adaptive histogram equalization (CLAHE) prevents this by limiting the amplification.

-

cdf: Cumulative Distribution Function cdf(x) = P(X <= x)

im = imread('test.jpg'); [H, bins] = imhist(rgb2gray(im)); cdf = cumsum(H) / max(H); plot(cdf) -

https://www.math.uci.edu/icamp/courses/math77c/demos/hist_eq.pdf

-

batchnorm When use_global_stats is set to False, the batch normalization layer is tracking the stats (mean/var) of its inputs. This is the desired behavior during training. When use_global_stats is set to True, the layer will use pre-computed stats (learned in training) to normalize the inputs.

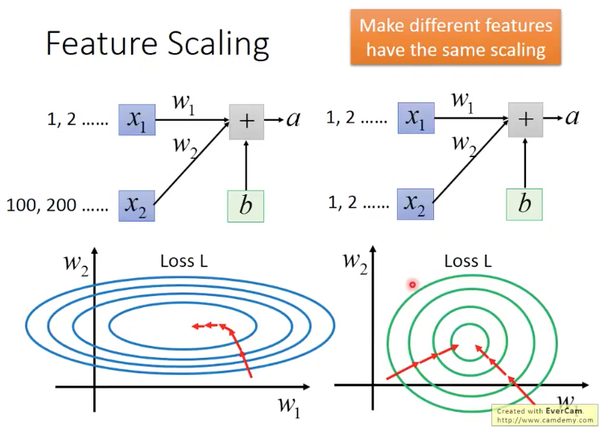

The picture is an example of feature scaling. In the left figure, the inputs x1 and x2 are not scaled; in the right figure, they are scaled. Note the difference of loss landscapes corresponding to these two kind of inputs.

-

Momentum The mementum is used to diminish the fluctuations in weight changes over consecutive iterations.

-

Cross-Entropy Loss

- git subtree

## 确保各个项目已经添加zenjs 这个 remote git remote add zenjs http://github.com/youzan/zenjs.git ## 将zenjs添加到各个项目里 git subtree add --prefix=components/zenjs zenjs master ## 各项目更新zenjs代码的方法 git subtree pull --prefix=components/zenjs zenjs master ## 各项目提交zenjs代码的方法 git subtree push --prefix=components/zenjs zenjs hotfix/zenjs_xxxx - git merge remote branched in different repo

clone remote repo into local (e.g., bar) # Add the bar repository as a remote branch and fetch it: git remote add bar ../bar git remote update git checkout -b baz # Merge branch somebranch from the bar repository into the current branch git merge bar/somebranch